Fighting toxicity in multiplayer games remains an ongoing puzzle for developers: Ubisoft has been trying for years to reduce player toxicity in Rainbow Six Siege, Valve has tried muting and banning toxic Dota 2 players, and EA stripped thousands of assets out of its games in the hopes of promoting a more positive environment. Now, a Boston-based software developer has launched a tool that might help make meaningful progress.

The tool is called ToxMod, and it’s a software package that uses machine learning AI to identify toxic communication during online game sessions. ToxMod recognises patterns in speech, looking at waveforms to not only tell what players are saying, but how they’re saying it – and it can ‘bleep’ out abusive language live.

The concept for ToxMod came out of work done by Mike Pappas and Carter Huffman and their company Modulate, which they founded after meeting each other while studying physics at MIT. Pappas says they met at a whiteboard, working out a physics problem, and quickly decided to start a company together after finding they approached problems in complementary ways.

“As big gamers ourselves, gaming was top of mind from the beginning,” Pappas tells us. “When we started thinking about the applications there, we were thinking of the success of Fortnite and customisable skins and all of those tools for more immersion and more expressiveness in games.”

Their first big idea was to create skins for players voices: ‘cosmetic’ enhancements that players could use to change how they sound over voice chat. But Pappas says that as the pair consulted with game developers about using voice skins, they heard time and again how studios were struggling to find ways to make players feel safer using voice chat – toxicity was keeping them from wanting to pick up a headset at all.

That led Modulate to look at ways to use the machine learning tech it had built as a way to make more players feel safer online. Using voice skins could let someone conceal their age, gender, ethnicity, or anything else that could make them a target for harassment online. Pappas said Modulate wanted to think bigger, though – they wanted to build a “comprehensive solution to this voice chat problem.”

“A big thing that had come up in our studio conversations were questions like, could you live-bleep swear words?” he says. “Or could you detect if someone is harassing someone, and alert our team about that?”

Read more: How Sea of Thieves is anti-toxic by design

Using the tech it had already built, Modulate developed ToxMod, which uses AI to scan vocal waveforms and can not only tell what players are saying, but how they’re saying it. The algorithm is sophisticated enough to pick out nuances of human speech, detecting spikes in aggressiveness and responding accordingly, right on the fly.

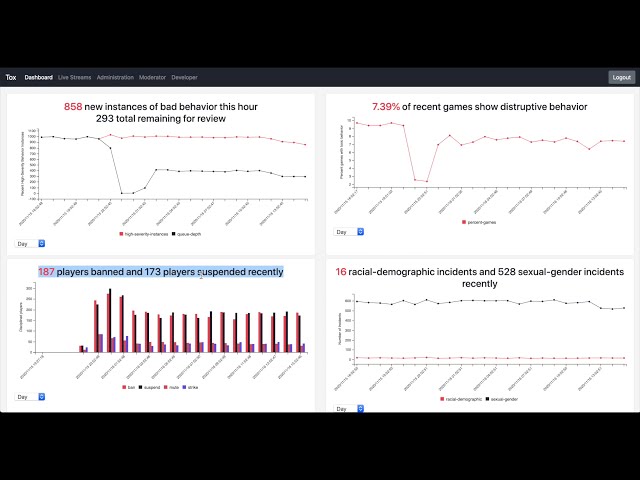

The ToxMod SDK allows developers to build the tech right into their games, with much of the filtering happening on the client side, Pappas says. Another layer of screening happens on the server side, which is where ‘grey area’ speech can be flagged and handled by a human moderator if necessary, but over time, ToxMod’s AI learns to handle more and more cases without needing human intervention. Developers can custom-tune ToxMod to suit their games’ and their communities’ specific needs, choosing thresholds appropriate for that setting and audience.

“How highly do you want to rate violent speech as a potential threat? If you’re playing Call of Duty, maybe that’s less of a big problem, unless it’s extremely severe,” Pappas says. “Whereas if you’re playing Roblox, then maybe it’s a problem no matter what.”

ToxMod also works as a predictive tool and can manage player reputations, too. It looks at what’s happening in-game along with the speech it’s analysing, so it can figure out pretty quickly if a player becomes more prone to toxic behaviour under specific circumstances, such as a string of losses or a teamkill. That means it can make recommendations to a game’s matchmaking servers that can help prevent those circumstances from coming to pass at all.

“What we really want to be able to understand is if someone has lost the last five games, and they’re super, super frustrated, and they get teamed up with a couple of women who are new to the game, and in their frustration that they’re about to lose the game, they start to say some sexist things,” Pappas explains. “Is that bad? Absolutely. We still want to flag that in the moment because those women are now having a bad time, and we absolutely need to stop that in the moment. But in terms of what the repercussions are for that actor, it might not be that you get banned for 30 days.

“Instead, it might be that we have learned that you get aggravated really quickly, and so when you’ve lost the last few rounds, maybe we should advise the matchmaking system, ‘hey, throw this guy a bone, give him some more experienced teammates so that he gets a win in, so that he stays in a kind of emotional state where he’s not likely to explode at people.”

Each game and its community of players is a unique case with specific needs, and Pappas says ToxMod can work with developers to fine tune the software to fit those requirements. Modulate isn’t ready to announce any official partnerships yet, but it has been in talks with members of the Fair Play Alliance, a coalition of game publishers and developers committed to fostering healthy communities. That group includes the likes of Epic Games, EA, Riot, Roblox, Blizzard, and Ubisoft among many others.

“We want to fix voice chat,” Pappas said. “We can come in and not just be providing them one tool, but actually partnering with them and saying we have this suite of tools that allows us to really shape your voice chat experience, however you need it to be, and allow you to really make sure that overall it’s going to be the rich experience that it should be.”

You can find more information about ToxMod at Modulate’s official site, and Pappas says it’s ready to roll out today. Modulate officially launched the tool December 14.

“ToxMod can be plugged in by any game developer tomorrow, and they can start taking a look at all the audio that it’s processing through,” he says. “The technology is ready. We’re hard at work on getting some of these initial integrations into place – so it’s gonna be an exciting year, for sure.”