Some new benchmarks have surfaced over the last week which show an unreleased AMD Vega-based GPU being put through its paces. The compute-intensive SiSoft Sandra test has it comfortably ahead of the GTX 1080.

Image isn’t everything, so make sure you aural experience matches the visuals of your rig with the best gaming headsets money can buy.

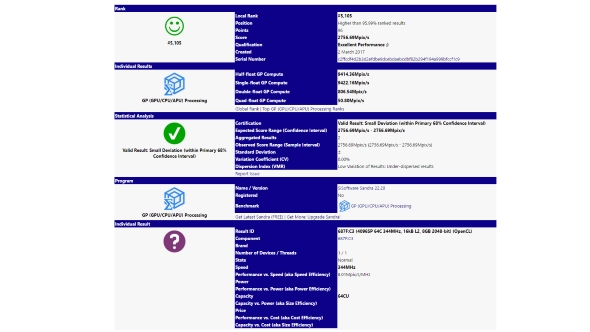

The most recent benchmark to appear is the SiSoft Sandra general purpose compute test which seems to be using a later revision of the Vega card than we’ve previously seen in the Ashes of the Singularity or CompuBench results. The new code, 687F:C3, uses the same essential device ID but what looks like a later revision code, potentially making these latest results more in line with the final release. The previous results have come from a card with the device ID of 687F:C1.

The overall general purpose compute power of what we expect to be the flagship AMD Radeon RX Vega card comes in at 2756.69 Mpix/sec, while the GTX 1080 can be found with scores of 2,050.72 Mpix/sec. That’s a lead just shy of 35% for the AMD card when it comes to the computational side of the equation. Running the same test on our GTX 1080 Ti though has the latest Nvidia card running at 3,451.05 Mpix/sec, or some 25% quicker again than the RX Vega.

What that compute test doesn’t show, however, is how well the RX Vega is going to perform in-game, though we have seen live demos of the RX Vega running both Doom and Star Wars Battlefront at 4K and providing performance not far shy of the GTX 1080 Ti.

The SiSoft Sandra entry does also show some core configuration details for the Vega-based GPU, with it sporting 4,096 GCN cores across 64 compute clusters. It also shows the memory system as 8GB running across a 2,048-bit bus. That would then mean we’re looking at a pair of 4GB HBM2 modules, each offering up a 1,024-bit memory bus.

As the potential RX Vega card is an unreleased device Sandra is obviously having a little trouble making total sense of the GPU’s sensors. She’s been reading the clock speed as a paltry 344MHz and with just 16kB of L2 cache. Though if she is reading them right the AMD engineers have performed some serious miracles to get that sort of performance out of it…

But if Sandra is struggling with the sensors it’s also possible the benchmark result isn’t hugely reliable either.

Via ComputerBase