When we say that empathy is the ability to put ourselves in another’s shoes, we usually mean it metaphorically. But hey, this is videogames: we regularly inhabit the size 12s of action heroes, physically and literally.

Related: the best indie games on PC.

In Empathy, that chance to see through somebody else’s eyes is turned into a central mechanic. Stepping into an empty land of tent-filled parks and teetering, jenga-like tower blocks, it’s only by inhabiting the first-person memories of others that we can work out where everyone went.

<iframe width=”590″ height=”332″ src=”https://www.youtube.com/embed/jN3vTY8yWd4″ frameborder=”0″ allowfullscreen></iframe>

There have been no complex NPC behaviours or explosion effects for Stockholm developers Pixel Night to grapple with – but story-driven, exploratory adventure games like these come with their own, unique technical challenges.

Pulling the trigger on story

Scientists haven’t yet conducted any studies into what the most distressing possible bug in a game is, but if they did, they’d probably conclude that it was ‘two lines of dialogue playing at the same time’.

“That’s been one of my headaches for a very long time,” admits lead developer Anton Pustovoyt.

In the interest of avoiding that upset, some first-person narrative games simply space out dialogue trigger points in the environment. If the player moves, for the sake of argument, at three metres per second, and a recording takes 30 seconds to play, the developer might simply place the next trigger point 100 metres down the road. That way, they know the player couldn’t possibly reach it before they’ve finished listening to the first recording.

“Which obviously works with games with very careful linear design,” says Pustovoyt. “That’s not really an approach we wanted to take.”

Empathy takes a more meandering path. In its first level, the park, the player is free to explore and pick away at different starting points in the story – engaging with puzzles and action sequences in an undetermined order. So another solution was needed.

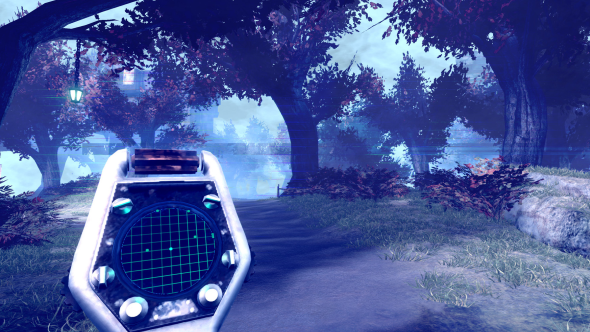

“What we did was actually add another mechanic to the game,” Pustovoyt explains. “It’s a gadget that allows you to find those narration hotspots.”

The gadget – which aesthetically and functionally sits somewhere between a compass and Alien’s motion tracker – guides the player toward key memories tied to objects. When they find those objects, the gadget begins ‘syncing’ – “in order to bring [the memory] back into our world”.

It’s a simple solution, but that time delay helps circumvent any potential dialogue mix-ups.

“Because you are busy syncing with the hotspot and the tool is busy with the item, it can’t really show the next one,” Pustovoyt notes. “So the player is occupied with the current item.”

The fog of memory

It’s one thing to transport the player into other people’s memories. It’s another to communicate to them exactly what’s happening.

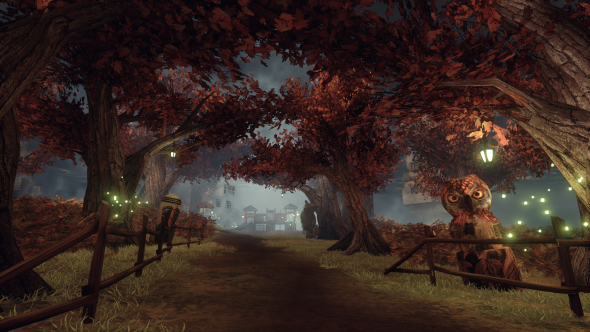

Pixel Night rely on a “memory fog” effect to indicate that a memory sequence is taking place. That sequence might find the player sleuthing or inspiring a band of rebels, depending on who the memory belongs to, and the fog fences off the area where the action is due to go down. Although an effective device, the fog brings with it attendant technical issues.

“In 3D it’s often difficult for an engine to figure out the stacking of the items with opacity,” says Pustovoyt. “Should this item be shown first, or should the other item be shown first?”

For instance: in one memory, triggered by a hammer lying on a bench, the player embodies a disgruntled mechanic working to repair a bridge. Opaque bars of light indicate where the planks of the bridge are supposed to go – and those bars initially interfered with the opacity of the fog.

“We managed to figure it out, thanks to Unreal,” Pustovoyt goes on. “But we haven’t quite implemented that fix for the windows, which can sometimes be seen through the fog in the distance. It’s still something we have to work on.”

Making the grass grow

“You can do crazy stuff with the materials in Unreal if you have time and you want that level of graphical fidelity in the game,” says Pustovoyt. “It’s really cool stuff, just endless possibilities, but at the same time for us it was more important to get this kind of artistic style rather than realistic details.”

What Pixel Night have taken advantage of are the mathematical functions that can save time when it comes to level design. The aforementioned park is situated on a floating island, with an underside made up of craggy rocks. Instead of handling each of the rocks by hand, Pustovoyt relies on a material trick that automatically applies textures based on a slope’s angle.

“If it’s a flat surface it gets grass,” he explains. “If it’s a vertical surface it gets rock. I don’t go in there and paint it manually.”

The end result is an environment which, despite being partially automatically generated, winds up looking natural. Or as natural as a floating island in a world inhabited only by memories has any right to look.

Empathy is set for release on PC in 2017. Unreal Engine 4 is now free.

In this sponsored series, we’re looking at how game developers are taking advantage of Unreal Engine 4 to create a new generation of PC games. With thanks to Epic Games and Pixel Night.