For all the sound and fury surrounding Nvidia’s new GeForce RTX 2080 Ti and RTX 2080 graphics cards, it’s easy to forget that it’s not the only graphics card game in town. Yes, there is another, and this time we’re not talking about AMD. There is an ephemeral third player in the GPU game of thrones, and while Intel may not have the sort of discrete graphics cards to make you go weak at the PCIe-s right now, Nvidia’s new generation could pave the way for an Intel GPU that just might.

Yes, Intel and graphics cards. You probably never expected to see such seemingly disparate words in such close proximity together ever again. Sure Intel have 10% of the gaming GPU market if you take the Steam Hardware Survey as gospel – all those laptops with integrated graphics playing weird dating sims count for something – but when Intel poached AMD’s Raja Koduri last year it announced, to our surprise, that it was looking for his Core and Visual Computing Group to create ‘high-end discrete graphics solutions.’

And it recently doubled-down on that producing a SIGGRAPH sizzle reel for its gaming card, set to launch in 2020, promising to set its graphics free… “and that’s just the beginning.” Ominous, eh? Well, there’s the potential that it could be for both Nvidia and AMD if the big blue chip manufacturing machine can get its GPU act together.

With the end of 2018 looming large, 2020 doesn’t actually actually seem too far away now. And with Intel’s historic interest in graphics coming more from the compute side, and it seemingly wanting back in on the AI/deep learning dollar, there’s a good chance Intel’s discrete GPU could make some waves. And the new graphics cards being released by Nvidia are laying the foundations for that to happen.

The reason Intel is creating a discrete GPU again is almost entirely because of Nvidia. For a long time Intel was the AI / deep learning kingpin.

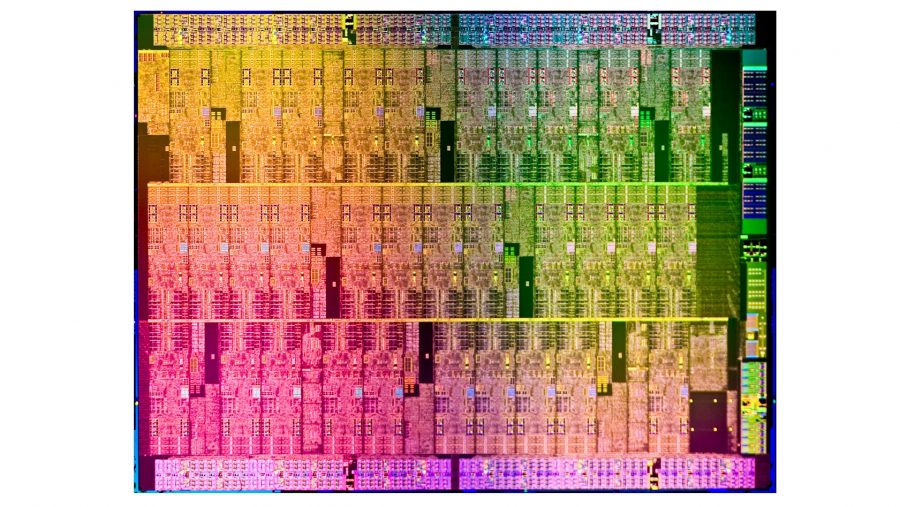

All the maths was done on big Intel CPUs and in its conferences its engineers demo’d ray tracing and machine learning all day long. Intel was showing off real-time ray tracing at its own developer events a decade ago or more… that is until Nvidia worked out its thousand-core GPUs could nail the parallelism necessary for such workloads far better than a measly 32-core server CPU.

So then Nvidia took over, and has almost been running the machine learning scene ever since. And it has therefore been raking in all those sweet, sweet AI dollars. Now Intel wants back in. It knows the market, knows what it needs to get there, and now it seemingly has the team to make that happen.

With Raja Koduri heading up the graphics engineering game, and Jim Keller overseeing the wider silicon design of Intel chips, there are a pair of engineers inside of Intel with a wealth of experience making advanced GPU and CPU cores.

But we’ve been here before…

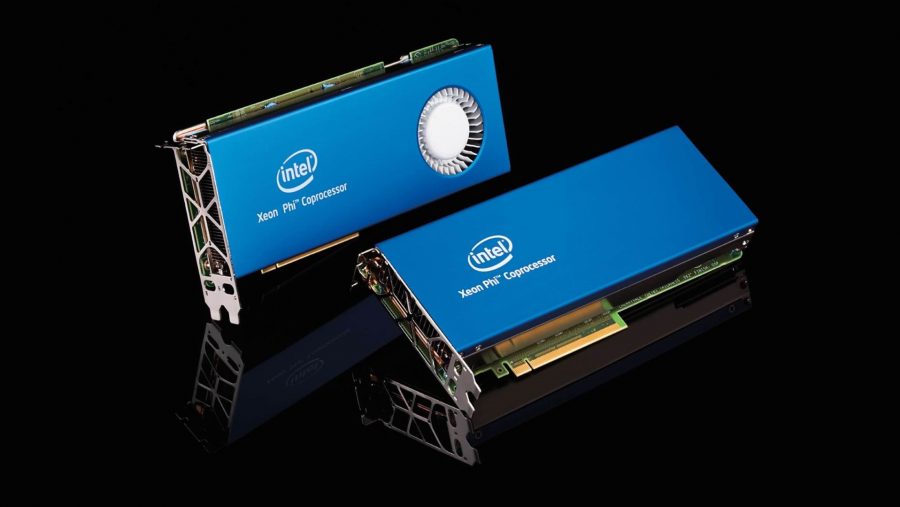

As soon as anyone mentions Intel making discrete graphics cards our first thought is, inevitably, ‘oh god… Larrabee.’ That was the codename for Intel’s last attempt at making a discrete GPU for the graphics market, and it was a remarkable failure. Such a failure it barely even made it out of the labs, let alone out to market.

Larrabee was announced around 2008, set for a launch in 2010. And if the 2018 announcement for launch in 2020 sounds too much like history repeating, that’s kinda our fear too.

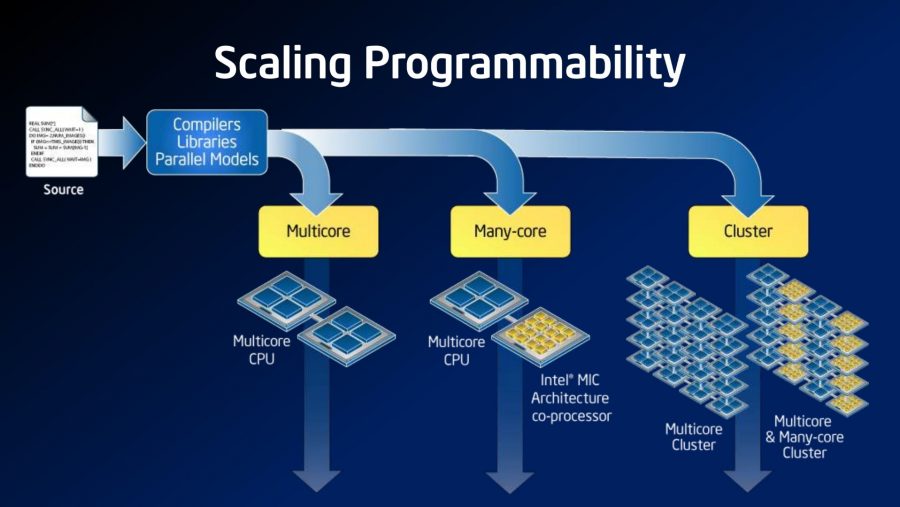

Essentially the Larrabee design was attempting to create a ‘many-core’ GPU using multiple x86 cores, roughly analogous to the standard processors at the heart of most of our PCs. The idea was that the many-core design would allow for the sort of parallelism that makes AMD and Nvidia’s GPUs so capable for compute tasks as well as graphics today.

Along with the pseudo Pentium cores built inside the Larrabee GPU there would be other fixed function logic blocks to do dedicated heavy lifting on the graphics side. That mix would potentially make for a powerful, flexible architecture that was capable of impressive feats of compute as well as fast-paced rasterized rendering.

And it all sounded great… yet Intel just couldn’t get it to work in any way that would make it competitive on the graphics side. So it just ended up in the hands of researchers, in the form of the Knights Ferry card, trying to make use of its many-core compute chips, but who still couldn’t get it computing in a way that didn’t make them long for an Nvidia Tesla GPU to work on.

But the idea was sound and actually looks even more promising in today’s environment where GPU compute power is arguably becoming more important than raw rasterized rendering performance. Hell, it was even able to demonstrate early real-time ray tracing demonstrations at the Intel Developer Forum in late 2009, using a version of Quake Wars.

Intel isn’t going to make an exact copy of Larrabee for its 2020 discrete card, however. It’s very unlikely to be using straight x86 cores in its design this time around, and is expected to be taking something akin to the existing Execution Units it uses for its integrated graphics and spinning them out into their own dedicated GPU.

But the overall approach might well be similar – make a many-core chip with a host of programmable cores that will give it the flexibility to cope with the demands of traditional rendering as well as the increased focus on GPU compute power. Chucking a bit of fixed function logic into the mix will help on the graphics front and, like Nvidia’s new RT and Tensor Cores, it could dedicate some specific silicon directly to workloads such as inference and ray tracing too.

As we said earlier, Intel have been showing off ray tracing in games for year, and while Wolfenstein in 2010 looks very different to Wolfenstein in 2018, it shows the understanding is there with the old Knights Ferry chips – those server parts derived from Larrabee – to undertake the task. The learnings are there within Intel, it just needs to take that experience and apply it to its new graphics architecture coming in 2020.

But Intel simply couldn’t be the company to drive compute-based graphics or ray tracing forward on its own. There was no way it could aim to create a new discrete GPU in 2020, that would have competitive traditional gaming performance and at the same time try and foster a compute-based/ray tracing ecosystem on its own.

With Microsoft and Nvidia taking that software/hardware step now, at a time where there is little GeForce competition – and therefore less pressure on the platform’s immediate success – that ecosystem will be far more mature if Intel does manage to get a new card out in 2020. I’ve got to keep saying ‘if’ because I still can’t shake the memory of all those IDF conferences and all those years waiting for the Larrabee cards to finally arrive in my office for testing. But, call me stupid (there’s a comments section for that precise reason), I can’t help but feel optimistic that it’s going to be different this time.

We can’t forget Larrabee, and you can bet your life that Intel hasn’t either. That failure will be in the back of the minds of Raja Koduri and every Intel engineer working on the project, and they won’t let the project collapse in the same manner as it did back in 2010.

With dedicated DirectX APIs, both for ray tracing and AI, and a target to aim for, Intel can tune its new GPU architecture for a future where there are more games taking advantage of a broader range of compute workloads built out of the graphics card. And the company was built on compute power, it may be stumbling at the moment, but you can bet Intel is going to come back fighting.

And what if Intel manages to get both its software and hardware stacks aligned – something it failed to do with Larrabee – and can create a discrete GPU with more compute oomph than Nvidia’s second-gen RTX cards? Even if it doesn’t have the traditional rendering power of the competition the compute performance could balance it out and the GPU might still be faster overall at the combined advanced hybrid rasterized/ray traced techniques.

And that could give it an edge in a world where more and more games are taking advantage of non-traditional graphics workloads.

Of course it’s all largely speculation at the moment, just a thought experiment born of years watching the graphics industry dance its merry dance. Apart from the SIGGRAPH sizzle, Intel hasn’t really said word one about what it has planned for its first discrete graphics card. We just know that it’s targeting gaming as well as deep learning, and these two fields are narrowing ever closer together with Nvidia’s new generation of Turing GPUs.

But if Intel can nail the compute side, something which is definitely in the company’s wheelhouse, it might not matter if it isn’t a rasterized rendering monster. Only time will tell, but having a third player in the graphics game can only be a good thing, especially if it can become even remotely competitive.