Nvidia has created a neural network to replicate images of human beings (and cats) that pass the uncanny valley test. While computers tend to fall short of photorealism, and hence make most people feel dreadfully uncomfortable, Nvidia’s latest research paper offers up a new architecture for neural networks that might be able to trick our mortal minds.

Now this research paper doesn’t make for easy reading unless you’re into generative adversarial networks (GAN), a type of machine learning neural network, but the results of the study speak for themselves. No, actually that would be terrifying. Thankfully they don’t speak… yet.

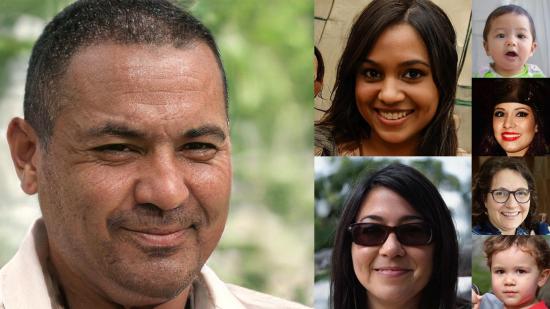

With a helping hand from a high-quality database of human faces, the researchers over at Nvidia were able to produce highly-realistic images of people. Or at least they look like people. None of the beings in the pictures actually exist, they are simply what this neural network believes people should look like. Each one has been created by a learning system that utilises sets of “styles” to generate lifelike images with terrifying accuracy.

But while it is a dab-hand at humans, us bipedal monkeys are an easy nut to crack, our furry little friends are a little tough for AIs to get their chips around. Whether from a lack of high-quality dataset or simply because cats are higher beings with greater intricacies, the researchers at Nvidia just couldn’t get cats all that spot on.

Existential threats aside: Here’s how to overclock your gear

Some are pretty close, but others have become strange blobs of fur: lacking features, skeletons, or even faces.

For the full horror, click the above image.

So while the human-side of the equation is terrifying, and rightfully so, at least we’ve found a new form of AI-proof cryptography. If you’re not sure whether a video or image is fake, simply look for the kitty stamp of approval. If the cat looks real, you’re good. If the cat looks like its come out the wrong side of the Enterprise’s transporter, you might just be looking at an AI-generated fake.

Here’s a link to the Nvidia research paper if you fancy a read. Be warned, it is a hefty PDF and existentially scary.