Update July 27, 2016: More details have come out about the Radeon Pro SSG, using a Fiji GPU (not Polaris) and a pair of Samsung 950 Pro SSDs inside it.

The GPU side of AMD is kicking into high gear, but it’s worth checking out our in-depth look at the upcoming AMD Zen CPUs too.

The Samsung SSDs are about the fastest PCIe drives you can buy right now, and are set up as a pair of 512GB versions in RAID-0. They’re connected to the AMD Fiji GPU via a PCIe bridge chip and require applications to be specially coded with an AMD API to fully understand the connection between chip and extended framebuffer.

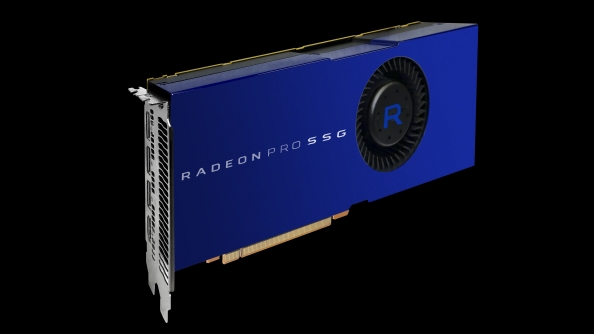

Original story July 26, 2016: Calling it a “disruptive advancement” for graphics, AMD is paving the way for an exponential increase in framebuffer capacities.

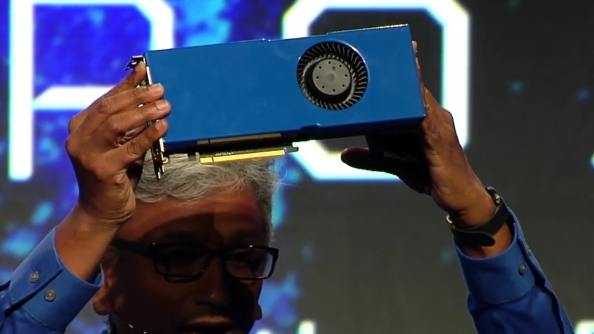

Computer graphics render monkeys are all over Anaheim right now, at the SIGGRAPH technology conference, where AMD have announced a revolutionary new pro-level graphics card: one with solid state storage directly interfaced with the GPU.

File this under ‘cool shit that will never hit our desktops’ but AMD’s new Solid State Graphics (SSG) technology has been designed to deliver an exponential increase in the memory capacity available to a professional graphics card. The new Radeon Pro SSG has a pair of PCIe 3.0 M.2 slots tied into the Polaris 10Fiji GPU, allowing the graphics chip to use them as an extra level of storage. The maximum is reportedly a full 1TB of solid state storage.

And you thought that 12GB on the Nvidia Titan X was impressive…

Right now the largest pool available on an AMD GPU is 32GB – which is still pretty good – but when a pro card is having to deal with huge datasets, or 8K video, it will quickly exhaust that capacity. Then the GPU has to go and have a long conversation with the CPU, begging resources from the system, whether that be straight DRAM or even slower local storage, and all of that takes a whole lot of extra time.

With the new technology, once the Polaris GPU runs out of the standard VRAM it then goes in search of the SSG pool, completely ignoring the CPU, which massively reduces the time it takes to go through attached storage.

AMD used the example of 8K video on stage at SIGGRAPH, where a traditional card slows down to a sedate 17fps playing back 8K footage, the Radeon Pro SSG could play the same footage back at 90fps and even skip through as you would with a locally-stored 1080p video.

The Radeon Pro SSG though still uses traditional graphics memory because it offers far greater bandwidth than even the PCIe attached storage on offer in the SSG card. The maximum you’ll get out of a standard M.2 interface right now is around 2Gbps – AMD’s RX 480 comes with 256Gbps of bandwidth and the new Nvidia Titan X offers 480Gbps.

The new card is only at the developement kit stage so far, with full availability coming next year. But if you absolutely have to get your mits on one you can sign your soul over to AMD and cut them a cheque for $10,000.

But the obvious question is: will it play Crysis?