Our Verdict

A year down the road and AMD's most powerful consumer card is still well behind the competition it was originally aimed at. And we're not just talking in straight game performance terms either, it's also less efficient and far more expensive too. Not a great combo...

The AMD RX Vega 64 is the top of the current Radeon gaming tech tree, but almost a year after its launch how does it stack up against the competition? Short answer… not great.

The RX Vega 64 is finally back in stock after the wholesale mining boom took practically all Radeon GPUs off the market, but with demand from the crypto farms dying they’re back, even if they’re still generally more expensive than a litigious Twitter spat with El Pres 45. But, after a ton of delays, a huge amount of speculation, leaked information and performance numbers – some accurate, and some rather more apocryphal – was it worth the wait? To be honest, probably not.

Though I have to say this top-end Vega variant still represents everything I like about AMD. There’s something intrinsically lovable about everything it tries to do within the realms of PC hardware. It probably stems from my hardwired championing of the underdog – something almost endemic in British culture – from a desire to see it, as the Dave of Bible fame, going up against the Goliaths of the tech world.

AMD is always pushing fair, open practises, going above and beyond to find new techniques and technologies to push computing, and computer graphics forward. It’s always looking to the horizon. Unfortunately, such a laudable approach doesn’t necessarily translate into hardware with the absolute best real world gaming performance. At least, very rarely right away at launch. Will things be different with the AMD Radeon RX Vega 64 graphics card?

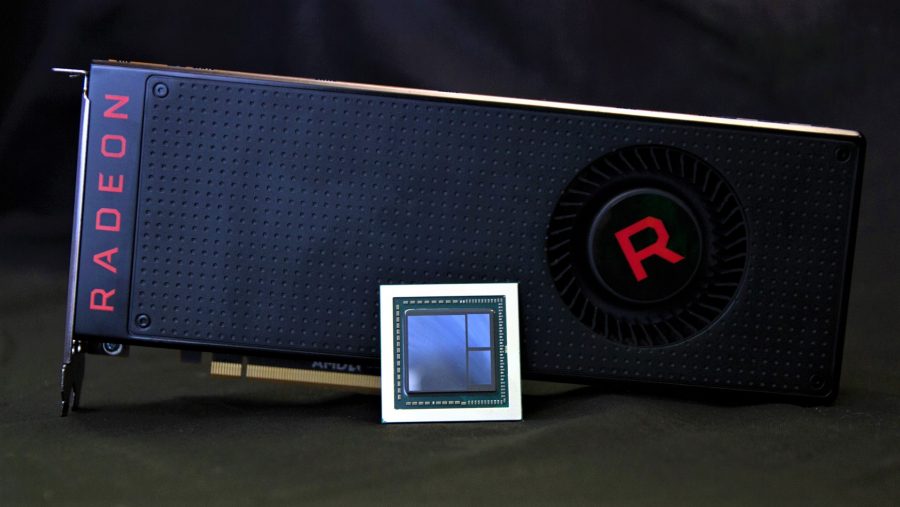

This is the first consumer-facing Vega, a card with a likely never-to-be-seen-again $499 (£450) MSRP, designed to go head-to-head with the Nvidia GTX 1080, and deliver team Radeon their first high-end graphics core since 2015’s R9 Fury X.

It’s pricing has settled a little now the crypto market has died off a touch, but you’re still looking at $580 (£529) for the cheapest RX Vega 64 we’ve found. AMD’s slightly cut-down version, the $480 (£452) RX Vega 56, performs a little below this higher-end card. That’s aiming at the GTX 1070 performance wise, while the RX Vega 64 has the GTX 1080 in its sights.

But price wise both cards are out of touch with the Nvidia cards they’re trying to go up against.

AMD RX Vega 64 architecture

The AMD Vega architecture represents what it called the most sweeping architectural change its engineers made to the GPU design in five years. That was when the first Graphics Core Next chips hit the market and this fifth generation of the GCN architecture marks the start of a new GPU era for the Radeon team.

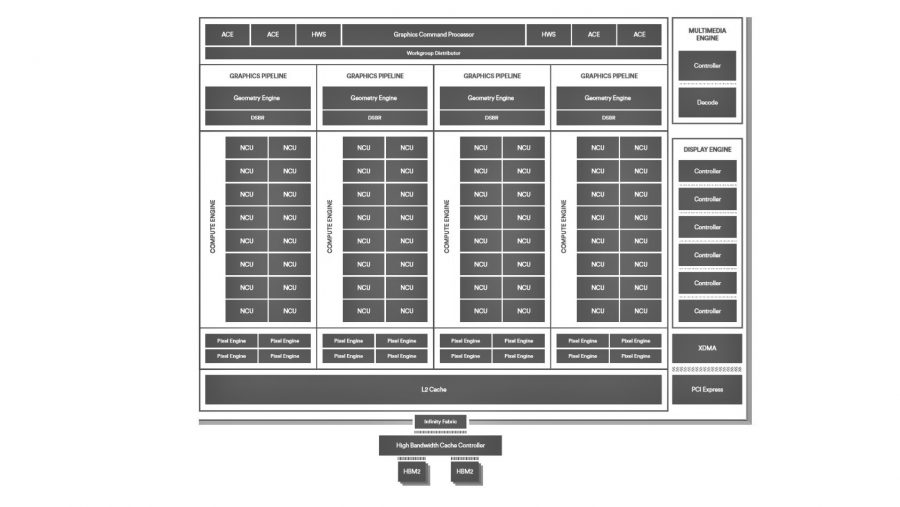

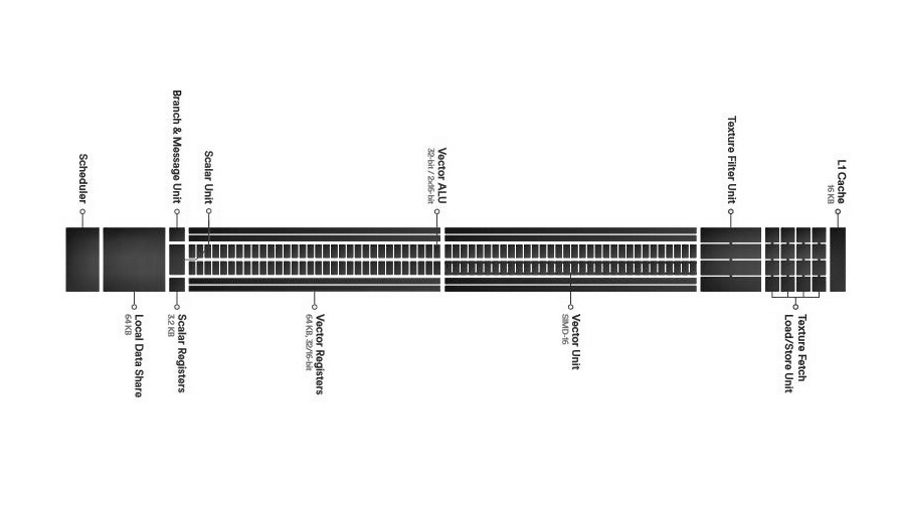

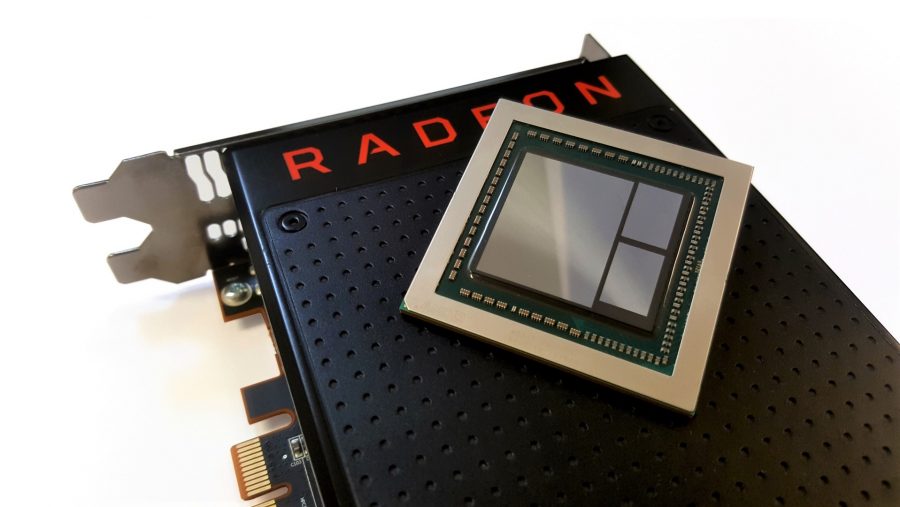

Fundamental to the Vega architecture, represented here by the inaugural Vega 10 GPU, is the hunt for higher graphics card clockspeeds. The very building blocks of the Vega 10, the compute units, have been redesigned from the ground up, almost literally. These next-generation compute units (NCU) have had their floorplans completely reworked to optimise and shorten the physical wiring of the connections inside them.

They also include high-speed, mini memory SRAMs, stolen from the Zen CPUs and optimised for use on a GPU. But that’s not the only way the graphics engineers have benefitted from a resurgent CPU design team; they’ve also nabbed the high-performance Infinity Fabric interconnect, which enables the discrete quad-core modules, used in Ryzen and Ryzen Threadripper processors, to talk to each other.

Vega uses the Infinity Fabric to connect the GPU core itself to the rest of the graphics logic in the package. The video acceleration blocks, the PCIe controller and the advanced memory controller, amongst others, are all connected via this high-speed interface. It also has its own clock frequency too, which means it’s not affected by the dynamic scaling and high frequency of the GPU clock itself.

This introduction of Infinity Fabric support for all the different logic blocks makes for a very modular approach to the Vega architecture and that in turn means it will, in theory, be easy for AMD to make a host of different Vega configurations. It also means future GPU and APU designs (think the Ryzen/Vega-powered Raven Ridge) can incorporate pretty much any element of Vega they want to with minimal effort.

The NCUs still contain the same 64 individual GCN cores inside them as the original graphics core next design, with the Vega 10 GPU then capable of housing up to 4,096 of these li’l stream processors. But, with the higher core clockspeeds, and other architectural improvements of Vega, they’re able to offer far greater performance than any previous GCN-based chip.

These NCUs are also capable of utilising a feature AMD is calling Rapid Packed Math, and which I’m calling Rapid Packed Maths, or RPM to avoid any trouble with our US cousins. RPM essentially allows you to do two mathematical instructions for the price of one, but does sacrifice the accuracy. Given many of today’s calculations, especially in the gaming space, don’t actually need 32-bit floating point precision (FP32), you can get away with using 16-bit data types. Game features, such as lighting and HDR, can use FP16 calculations and with RPM that means Vega can support both FP16 and FP32 calculations as and when they’re necessary.

We’ve seen the first game supporting RPM, and other Vega-supported features, like asynchronous compute, with Wolfenstein II: The New Colossus, which seems to be rather taken with the RX Vega architecture. The Far Cry 5 developers have also come out in support of RPM, and have made FC5 Vega-friendly too. 3D technical lead, Steve Mcauley, has gone on record stating: “there’s been many occasions recently where I’ve been optimising shaders thinking that I really wish I had rapid packed math available to me right now. [It] means the game will run at a faster, higher frame rate, and a more stable frame rate as well, which will be great for gamers.”

The Vega architecture also incorporates a new geometry engine, capable of supporting both standard DirectX-based rendering as well as the ability to use newer, more efficient rendering pipelines through primitive shader support. The revised pixel engine has been updated to cope with today’s high-resolution, high refresh rate displays, and AMD have doubled the on-die L2 cache available to the GPU. AMD has also freed the entire cache to be accessible by all the different logic blocks of the Vega 10 chip, and that’s because of the brand new memory setup of Vega.

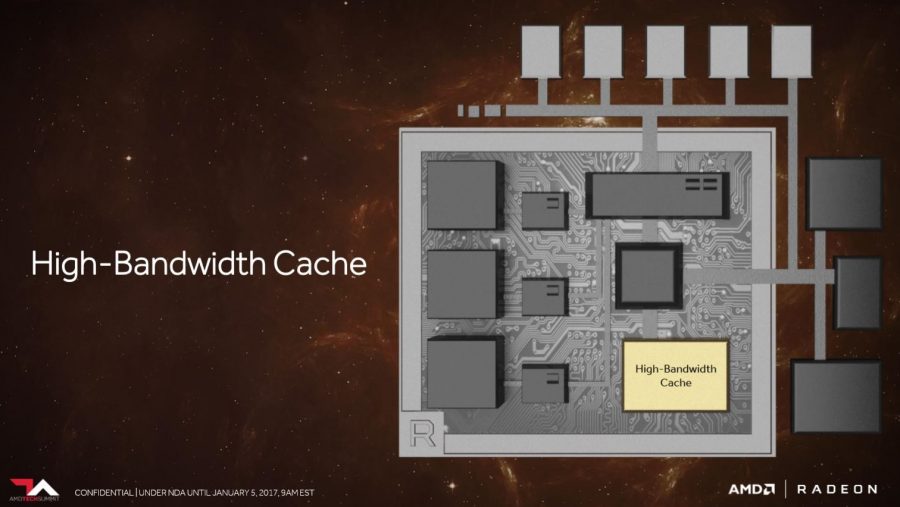

AMD’s Vega architecture uses the second generation of high-bandwidth memory (HBM2) from Hynix. HBM2 has higher data rates, and larger capacities, compared with the first generation used in AMD’s R9 Fury X cards. It can now come in stacks of up to 8GB, with a pair of them sitting directly on the GPU die, making the memory both more efficient and with a smaller footprint compared to standard graphics chip designs. And that could make it a far more tantalising option for notebook GPUs.

Directly connected with the HBM2 is Vega’s new high-bandwidth cache and high-bandwidth cache controller (HBCC). Ostensibly this is likely to be of greater use, at least in the short term, on the professional side of the graphics industry, but the HBCC’s ability to use a portion of the PC’s system memory as video memory should bare gaming fruit in the future. The idea is that games will see the extended pool as one large chunk of video memory, so if tomorrow’s open-world games start to require more than the Vega 64’s 8GB you can chuck it some of your PC’s own memory to compensate for any shortfall.

“You are no longer limited by the amount of graphics memory you have on the chip,” AMD’s Scott Wasson explains. “It’s only limited by the amount of memory or storage you attach to your system.”

The Vega architecture is capable of scaling right up to a maximum of 512TB as the virtual address space available to the graphics silicon. Nobody tell Chris Roberts or we won’t see Star Citizen this side of the 22nd Century.

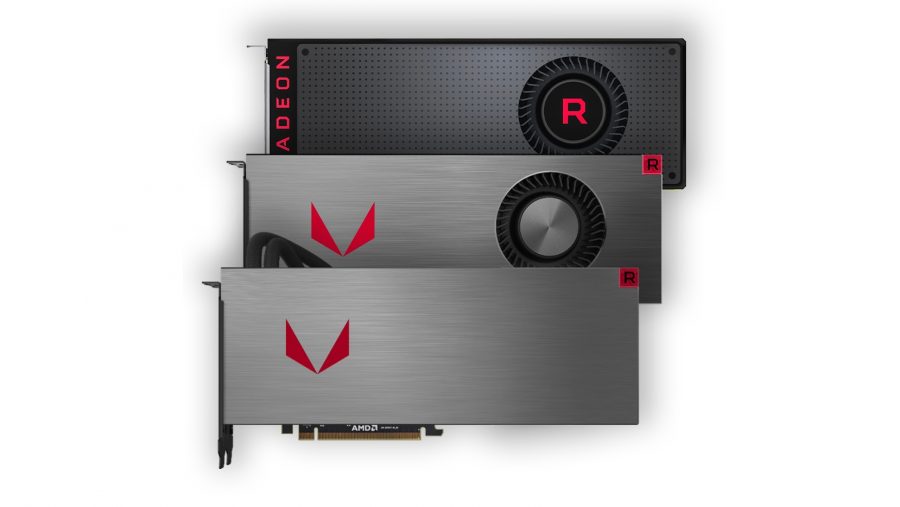

AMD RX Vega 64 specs

This version of the RX Vega 64 isn’t quite the top-end iteration of the consumer Vega GPU range; AMD has also created a liquid-chilled version with an all-in-one water-cooling unit attached to its metallic shroud. That card is almost identical in specification to this standard RX Vega 64, except from the wetware version gets a higher base clockspeed and a much higher boost clock too. And, y’know, is a bit less blowy as well, and pretty tough to find.

| AMD RX Vega 64 | AMD RX Vega 56 | AMD RX 580 | Nvidia GTX 1080 TI | |

| GPU | AMD Vega 10 | AMD Vega 10 | AMD Polaris 20 | Nvidia GP102 |

| Architecture | GCN 4.0 | GCN 4.0 | GCN 4.0 | Pascal |

| Lithography | 14nm FinFET | 14nm FinFET | 14nm FinFET | 16nm FinFET |

| Transistors | 12.5bn | 12.5bn | 5.7bn | 7.2bn |

| Die size | 486mm2 | 486mm2 | 232mm2 | 314mm2 |

| Base clockspeed | 1,247MHz | 1,156MHz | 1,257MHz | 1,480MHz |

| Boost clockspeed | 1,546MHz | 1,471MHz | 1,340MHz | 1,645MHz |

| Stream Processors | 4,096 | 3,584 | 2,304 | 3,584 |

| Texture units | 256 | 256 | 144 | 224 |

| Memory Capacity | 8GB HBM2 | 8GB HBM2 | 8GB GDDR5 | 11GB GDDR5X |

| Memory bus | 2,048-bit | 2,048-bit | 256-bit | 352-bit |

| TDP | 295W | 210W | 185W | 250W |

Other than that, the two full-spec GPUs sing from the same specs sheet. That means they’re both sporting the same Vega 10 silicon, with 12.5bn transistors packed into its pretty massive 486mm2 die, with the smallest working on the 14nm FinFET lithography. It’s worth noting here that part of the reason the chip is so damned big is because it’s got two 4GB stacks of HBM2 directly on-die, rather than arrayed around the outside of the GPU on the circuit board as is more traditional.

There is a limited edition version of the air-cooled RX Vega 64 with a similar metallic shroud to the liquid-chilled card, but that’s only available to those taking advantage of the somewhat bizarre Radeon Pack bundles.

The Vega 64 version of the Vega 10 GPU contains 64 of the new next-gen compute units (not just a clever name, eh?) and therefore 4,096 GCN cores. The lower-spec, nominally $399 partner card – continuing AMD classic rule of two for GPU releases – is the Vega 56, which has 56 NCUs and 3,584 GCN cores.

Though obviously that $399 price tag didn’t really stick around for long…

Historically the second-tier version of a high-end Radeon card is the card that’s arguably more tempting. It has the same basic GPU, with only a little performance-related hardware stripped out or turned off. That should mean it performs mighty close to the Vega 64, especially if you factor in the overclocking potential of the Vega 56 GPU.

AMD RX Vega 64 benchmarks

PCGamesN Test Rig: Intel Core i7 8700K, Asus ROG Strix Z370-F Gaming, 16GB Crucial Ballistix DDR4, Corsair HX1200i,

Philips BDM3275

AMD RX Vega 64 performance

Well, this is where it all gets a bit awkward, isn’t it? We were told the AMD RX Vega 64 was being designed to go head-to-head with Nvidia’s GTX 1080, but, despite that now being the second-tier consumer GeForce card, it’s not a particularly favourable comparison. At least not across the full breadth of our current benchmarking suite.

We still test GPUs against legacy DirectX 11 titles, because we still play those games, and the last-gen API gets used in new games too. And in those legacy titles the Vega architecture is unable to really show its true worth, posting performance numbers which are generally far slower than the reference Founders Edition GTX 1080.

I’ll admit there was a definite sinking feeling as I started my benchmark testing with the DirectX 11 titles first. Thankfully, things take on a more positive countenance when you feed it the sorts of workloads the advanced architecture has been created for. With some DirectX 12 games, and the AMD-sponsored Far Cry 5, things do look a little better.

In Far Cry 5 the Vega 64 is actually the fastest of the comparable cards when it comes to 4K gaming. That changes as the resolution moves to 1440p or 1080p, but it still looks more positive than the rest of the results.

That’s a much more reassuring result than it was initially looking like we were going to get. What’s less reassuring, though, is when you start throwing overclocked versions of Nvidia’s finest GPUs into the picture. It’s probably a given that a factory-overclocked GTX 1080 will outperform a stock Vega 64, especially given the price premium of those cards. But, when an overclocked GTX 1070 is capable of beating AMD’s Vega 64 pride and joy, that’s a lot harder to swallow.

The Galax GTX 1070 EXOC SNPR is around the same price as the Vega 64’s suggested retail price and, in terms of DirectX 11 performance, it’s often faster than the new Radeon card. The modern APIs, however, do allow the Vega 10 GPU to show it’s genuine potential. You wouldn’t necessarily recommend someone picks up a suped-up GTX 1070 instead of the Vega 64, but equally GTX 1070 owners shouldn’t feel too despondent about their card’s relative performance just yet.

What’s got me a little less enthused, however, is the fact that AMD has needed to create a GPU with 5.3 billion more transistors, and a total platform power consumption that’s some 43% greater, in order to compete with the GP104 GPU. And don’t forget it was released over a year later and still finds itself behind the older silicon in many instances.

That power draw is also a little concerning – though didn’t keep the miners at bay – and we had a minor issue with our sample shutting down under load. The reference RX Vega 64 requires a pair of 8pin PCIe power connectors, and our 1,200W Corsair PSU has individual PCIe power cables which split out into two of these 8pin plugs. Match made in heaven, right? Nope. If you try and draw all the juice down one of these cables our Vega card freaks out and reboots the system. It’s absolutely rock solid when using separate 8pin cables, but it’s something worth considering if you’re in the market for a Vega GPU. Your PSU has to be up to scratch.

AMD RX Vega 64 verdict

The AMD Radeon RX Vega 64 is a tough card to recommend you buy… it was so at launch and it still is now, even if pricing has started to level out. It’s a card that would’ve been great if it was released a year before, but some twelve months down the line the Vega line is still struggling to show its potential in a market still dominated by cheaper Nvidia cards.

The classic AMD black blower cooler gets rather loud when it’s running at full chat, though is more than capable of keeping the chip chilled even when you’re overclocking the nuts off it.

Pricing is also still real issue for AMD’s graphics card at this crunch point in PC gaming hardware, but even if we were talking about the MSRP of the Vega 64, and indeed the Vega 56, it would still be very difficult to recommend.

Even at that level the Vega is moving into what overclocked GTX 1080 cards should cost, and that’s a place where the best AMD GPU struggles to compete, even in DirectX 12 tests. Right now the frame rate performance isn’t there for the vast majority of games, at least not in terms of trying to compete against a reference-clocked GTX 1080. But that’s based on games that are currently installed on our rigs, based on legacy technologies and optimised for traditional GPU designs. The titles that really take advantage of the modern DirectX 12 and Vulkan APIs, however, show much more promise in terms of the relative Vega gaming performance. Just not against an equivalently priced GTX 1080…

Still, that Far Cry 5 performance gives me the tiniest modicum of hope the classic AMD ‘fine wine’ approach might just bare even more fruity things if more developers jump on the RPM bandwagon, or start to take advantage of the open world benefits HBCC might be capable of delivering. I’ll admit, that’s a relatively big ‘if’ considering the amount of pull Nvidia has in the gaming market as a whole, and the number of devs who may not be willing to knowingly ring-fence the best gaming performance just for AMD’s quarter of PC gaming’s installed user base.

But, with the latest console custom ASICs starting to use similar AMD technology to that which has now appeared in Vega, there’s a possibility more cross-platform developers will start to take advantage of these new features. If that’s the case then, even if AMD gamers only make up a quarter of PC gamers, the PS4 Pro and Xbox One X install base will add in a huge potential pool of users for devs to target.

To be fair, it’s also worth remembering AMD is still the underdog. It may have a similar level of R&D investment as Nvidia, but that’s based on it fighting on the twin CPU and GPU battlefronts. That means AMD has to pick its fights, and if it means trading legacy performance for long-term benefit, that could be the right choice… but, honestly, only time will tell.