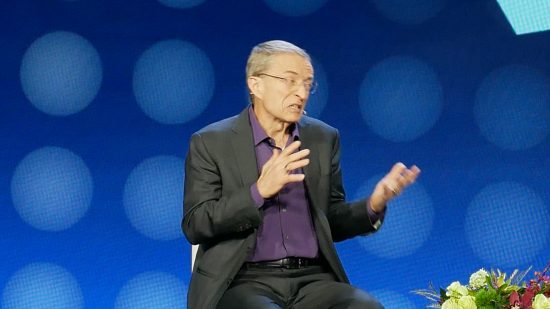

While I’ve been mostly seeking out the latest in gaming PC tech at CES 2024, the single biggest theme of the world’s biggest trade show so far has been AI. AI integrated into new Intel CPUs, AI workloads run on GPUs, a clearly AI-derived logo for the show, and even AI-driven speakers. Most of it is a little too “crypto enthusiast” for my liking but while watching the CES keynote conversation with Intel CEO, Pat Gelsinger, I was somewhat won over.

The key aspect of the CES discussion was what AI PCs are and why they matter, and the answers to both questions were well covered. In essence, the vision of an AI PC is one where AI workloads can be run locally in a satisfyingly quick time. Most such workloads can be run on a CPU and will also run even quicker on a GPU. However, having dedicated accelerators – the NPUs in Intel’s new Core Ultra CPUs, for instance – allows for speedier processing of those tasks while working as efficiently as possible.

Whatever you think of AI-generated content, it’s here and very soon it will simply be commonplace for us all to quickly throw together an AI-generated image for a project, use AI video processing, and much more. As such, having a machine that is able to accelerate those workloads efficiently is something we’re all going to appreciate soon enough.

The thing that sold it for me a little more, though, was Gelsinger’s points regarding what he called the three laws: of physics, of economics, and of the land. With so much of the visible progress in AI so far being based on cloud services, there’s an inherent question mark over how long it can take for your own data that you might want to use to inform your AI-generated content to get uploaded to and processed by that service (the law of physics).

Meanwhile, the law of economics deals with the fact that you have to pay for most of these cloud services. If you can feed in your own data and process it locally, you might be able to avoid the cost of an AI service. Then, finally, there’s Gelsinger’s law of the land, which he described as allowing users to avoid having to deal with the potential legal complications of using a third-party service. If you’re processing everything locally, you don’t need to worry about rights over the use of the data or anything of that nature.

Of course, there’s a big question over just how long it will take for this transition to local processing to happen and to what extent it will happen. As Gelsinger points out, as AI evolves so will its complexity. We might soon be able to run AI language models on our AI PCs, but AI-generated 3D models will take another level of processing power.

So, do you really need an Intel Core Ultra-powered PC or laptop – with a CoPilot key, of course – right now? No, clearly not. For gaming, your priority should still be the usual speed/cost/power consumption factors that have ruled CPU and GPU buying trends for years. But, in a few years time, NPU-type processors are going to be thought of just like video encoders, ray tracing cores, or, heck, GPUs. The AI revolution is here and it’s maybe just a little bit less scary than I thought before.

If all this talk of AI is a little too much too soon for you, cleanse your senses with some good old-fashioned gaming hardware recommendations. Check out our best gaming keyboard, best gaming mouse, or best gaming monitor guides for a more tangible upgrade to your setup. Or, see our CES news feed for more updates from the show.