At the Game Developer Conference today both Microsoft and Nvidia are announcing a partnership which will mean we have real-time raytracing in PC games released this year. I’m banking on Metro Exodus being one hell of a high-end GPU benchmark test…

Think your GPU can handle it? Think again, even our list of the best graphics cards might struggle.

Microsoft and Nvidia have been working together to create an industry standard API designed specifically around interactive, real-time raytracing. The big M are adding DirectX Raytracing (DXR) to DirectX 12 as the industry standard API, and the green team are building that out to create RTX, a set of software and hardware algorithms designed to accelerate raytracing on the Nvidia Volta architecture.

“It’s something that those of us in the graphics industry have dreamed about for the better part of two decades,” explains Nvidia’s Tony Tomasi. “What we’re going to do is take our first big steps towards real-time ray-tracing in games.

“The combination of RTX and DXR should enable developers to make incredible leaps forward in terms of real-time raytracing and start the developers working on – what I call – the next twenty years worth of graphics… I would expect that you’ll see games shipped this year that have real-time raytracing using DXR and RTX.”

And Tomasi certainly has history within the graphics industry, having worked with both 3DFX and Nvidia, as well as Apple and SGI before that.

But why is raytracing seen as some sort of holy grail for computer graphics? Well, essentially Microsoft explain it as current 3D graphics being built on lies. The current technique for displaying 3D on a flat screen is called rasterization, and it has done a great job for decades. But we’re getting to the point where we’re seeing more layers of realism, mostly around lighting, which isn’t compatible with the way rasterization basically just tries to draw only what the camera-eye can see.

What raytracing offers is realism. You’re essentially modelling the rays of light physically bouncing around a scene and visual effects, such as shadows, reflections, and ambient lighting, are all created as almost a byproduct of raytracing, not some sort of special effect.

“You can achieve incredible levels of fidelity,” explains Tomasi. “You can simulate indirect lighting, light bouncing, you can do refraction, shadows are done properly. Essentially all of the physical behaviours of light are modelled.

“The disadvantage for this kind of rendering historically is that it just hasn’t been possible to do in real-time, at least certainly not practical.”

Tomasi leaves the implicit “until now…” floating unsaid in the air.

While Microsoft’s DXR is capable of running on any DirectX 12 compatible graphics card, Nvidia’s RTX is only enabled on Volta “and forward” GPUs.

“DXR, Microsoft’s raytracing API,” says Tomasi, “is basically built on top of DirectX… and I expect GPUs that are capable of DX12 class compute should be capable of running DXR.”

Which is good news for AMD fans worried that this MS / Nvidia supergroup might push Radeon GPUs out of the future of compute-based graphics rendering. The red team’s GPUs have long been focusing on GPU compute, and with AMD’s work on their own Instinct range of graphics cards there’s a good chance future Radeon cards will be capable of DXR-based effects.

AMD are also committed to helping shape the future of DirectX Raytracing, and have released their own statement on the technology.

“AMD is collaborating with Microsoft to help define, refine and support the future of DirectX12 and ray tracing,” they say. “AMD remains at the forefront of new programming model and application programming interface (API) innovation based on a forward-looking, system-level foundation for graphics programming. We’re looking forward to discussing with game developers their ideas and feedback related to PC-based ray tracing techniques for image quality, effects opportunities, and performance.”

For the RTX goodies though you’re going to have to be a card-carrying GeForce gamer. And, as it’s only accessible via the Volta architecture, that means you’ve got to spend at least $3,000 on a Titan V card. Which makes us think gaming versions of the Volta architecture can’t be too far off now.

Quite what the Volta GPU has inside it that can leverage the demanding RTX feature set Nvidia won’t say. “There’s definitely functionality in Volta that accelerates raytracing,” Tomasi told us, “but I can’t comment on what it is.”

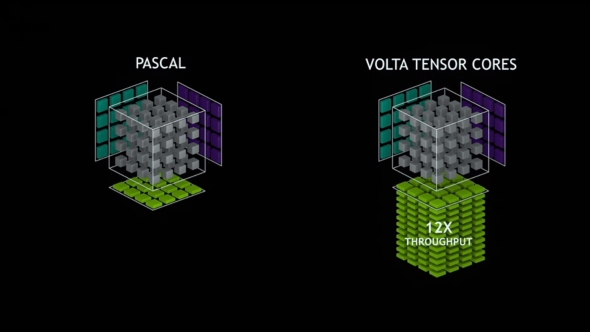

But the AI-happy Tensor cores present inside the Volta chips certainly have something to do with it as Tomasi explains:

“The way raytracing works is you shoot rays into a scene and – I’m going to geek out on you a little bit for a second – if you look at a typical HD resolution frame there’s about two million pixels in it, and a game typically wants to run at about 60 frames per second, and you typically need multiple, many, many rays per pixel. So you’re talking about needing hundreds of millions, to billions, of rays per second so you can get many dozens, maybe even hundreds, of rays per pixel.

“Film, for example, uses many hundreds, sometimes thousands, of rays per pixel. The challenge with that, particularly for games but even for offline rendering, is the more rays you shoot, the more time it takes, and that becomes computationally very expensive.”

Nvidia’s new Tensor cores are able to bring their AI power to bear on the this problem using a technique called de-noising.

“It’s also called reconstruction,” says Tomasi. “What it does is it uses fewer rays, and very intelligent filters or processing, to essentially reconstruct the final picture or pixel. Tensor cores have been used to create, what we call, an AI de-noiser.

“Using artificial intelligence we can train a neural network to reconstruct an image using fewer samples, so in fact Tensor cores can be used to drive this AI denoiser which can produce a much higher quality image using fewer samples. And that’s one of the key components that helps to unleash the capability of real-time raytacing.”

Before you get too excited about ultra-realistically rendered games hitting our screens before the end of the year we may need to temper your expectations a little bit. Raytracing isn’t going to replace rasterization overnight, and not simply because the existing consoles don’t have the compute capabilities to deal with it.

Full-spectrum real-time raytracing is still well beyond the power of our graphics hardware, so to being with it’s only going to exist as a supplemental effects-based feature for games. You’ll see raytraced shadows, ambient occlusion, and reflections being used initially, with EA, Epic, Remedy, and 4A Games all lining up to show off their raytracing goodies over GDC this week.

And with DXR and RTX working on the Unreal, Unity, and Frostbite engines there’s a pretty broad set of potential devs and publishers that could start raytracing with their next games.

“These will be the ‘turn it up to 11’ capabilities,” says Tomasi. “But what I would expect is that over time, just as happened with pixel shaders – early on it was an advanced feature – but of course today you can’t find a game that doesn’t use pixel shaders.

“And what I would expect in the future, some time out there, you won’t find a game that isn’t rendering primarily using raytracing. The game industry will go much like the film industry where 20 years ago raytracing was the exception for the film industry and today essentially every film is raytraced.”