The latest generation of GeForce graphics cards run on the new Nvidia Turing GPU architecture. It’s the Volta tech, with all its AI chops, plus a whole lot of dedicated ray-tracing goodness thrown in for future high-fidelity fun. When Microsoft can get its October Update working properly that is…

We had originally thought Nvidia would translate the Volta architecture down into a more consumer-friendly form, but Nvidia has moved on, producing a new set of discrete GPUs for our gaming graphics cards under the Turing name. But that doesn’t mean this is a complete departure from the Volta design, as Nvidia’s Tom Petersen explained at the RTX 20-series unveiling.

“A lot of Volta’s architecture is in Turing,” he told us. “Volta is Pascal Plus, Pascal was Maxwell Plus, and so we don’t throw out the shader and start over, we are always refining. So it’s more accurate to say that Turing is Volta Plus… a bunch of stuff.”

And there is a whole lot of new stuff going into the Turing GPUs, and not just the headline-grabbing real-time ray tracing tech that has been plastered all over the intermawebs since it was first demonstrated.

Vital stats

Nvidia Turing release date

The first Turing cards were announced at SIGGRAPH in August 2018 under the Quadro RTX name, with the GeForce RTX cards unveiled at Gamescom shortly afterwards. But the consumer cards have launched first, with the RTX 2080 and RTX 2080 Ti on sale in September.

Nvidia Turing specs

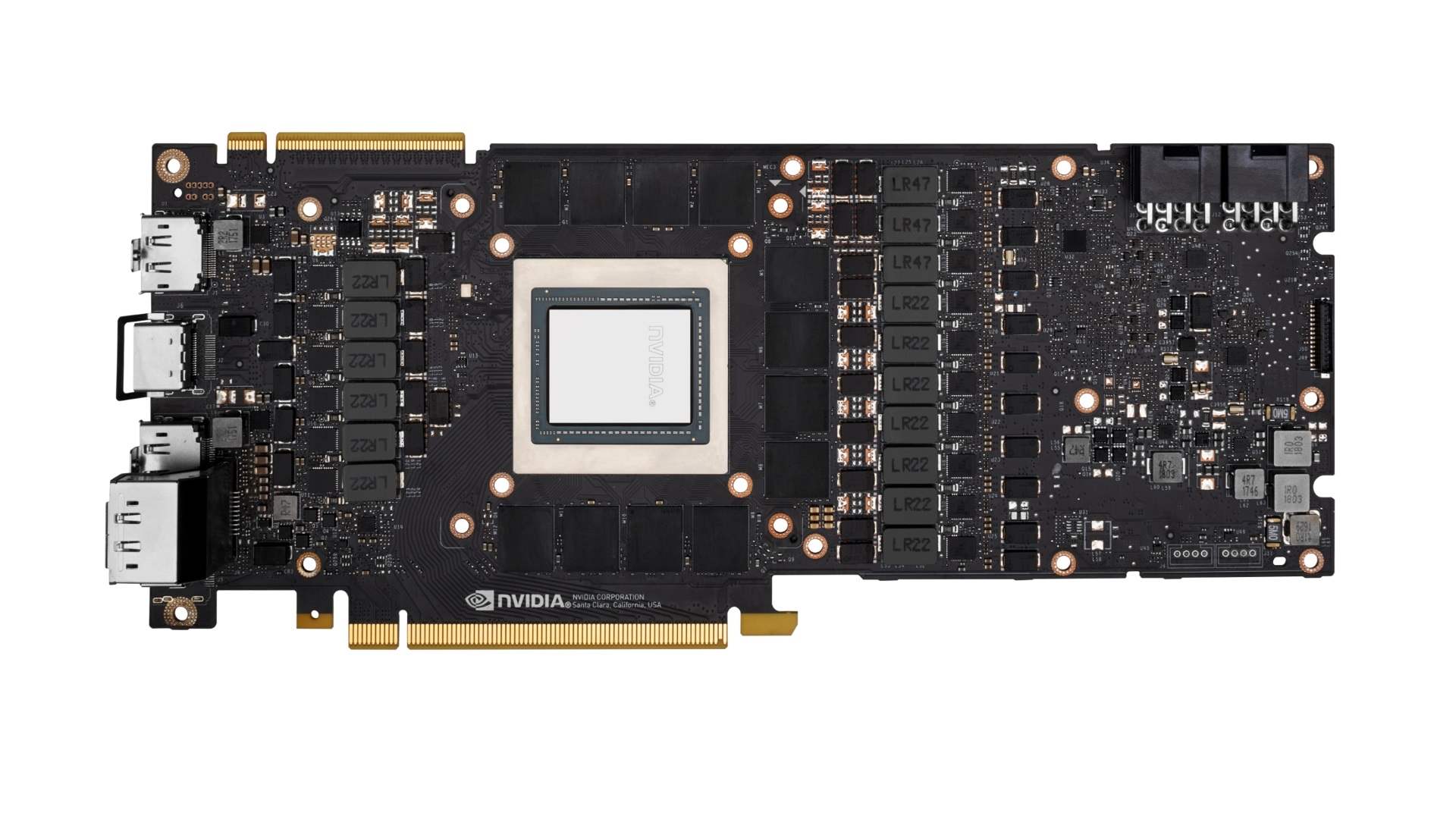

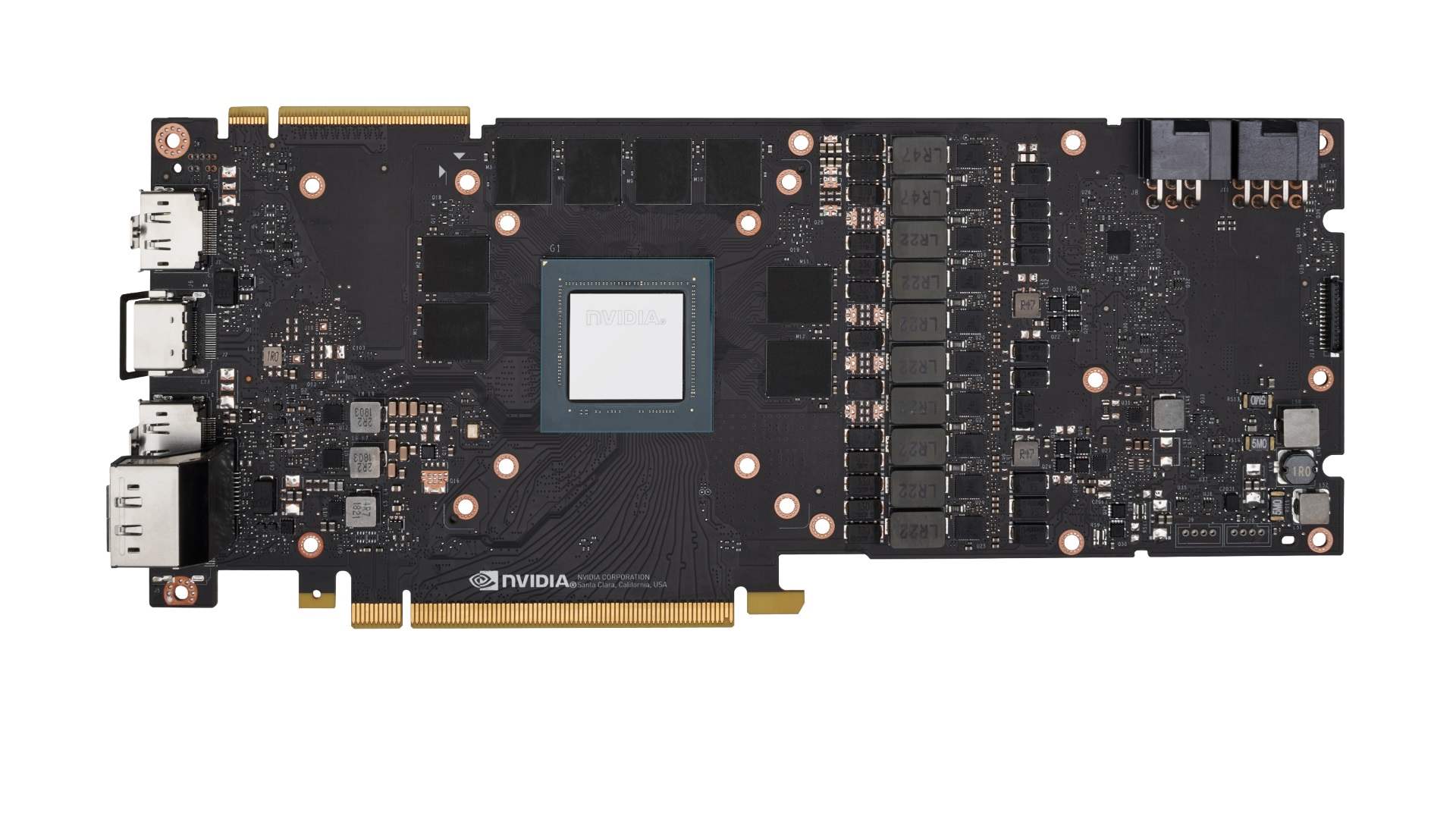

There are three separate GPUs in the first wave of Turing chips. The full top-spec TU102 GPU has 72 SMs with 4,608 CUDA cores inside it. It’s also packing 576 AI-centric Tensor Cores and 72 ray tracing RT Cores. The TU104 and TU106 GPUs fit in underneath it, with 3,072 and 2,304 CUDA cores respectively. You also get GDDR6 memory support to.

Nvidia Turing architecture

The Turing GPUs have been designed with a focus on compute-based rendering, as such they combine traditional rasterized rendering hardware with AI and ray tracing-focused silicon. The individual SMs of Turing have also been redesigned to offer a 50% performance improvement over the Pascal SM design.

Nvidia Turing price

If you want the absolute pinnacle of Turing hardware then the Quadro RTX 8000 is the one for you, though it will set you back $10,000. That makes the $1,199 for the GeForce RTX 2080 Ti Founder’s Edition card seem like a bargain. The cheapest of the announced Turing GPUs, the RTX 2070, costs $499 for the reference-clocked card.

Nvidia Turing performance

We now have a full idea of how the Turing GPUs perform in traditional rendering with the RTX 2080 and RTX 2080 Ti benchmarks shown in our reviews of the two cards. But the real-time ray tracing and AI potential is still yet to be seen in full.

Nvidia Turing release date

The first time you’ll be able to get your hands on Nvidia’s Turing-based graphics cards will be when the RTX 2080 Ti and RTX 2080 launched on September 20, 2018, though general availability for the top card was delayed until a week later. The professional-level Quadro RTX cards, with the full-spec Turing TU102 and TU104 GPUs, will become available later in Q4 of 2018.

The new GPU architecture was first announced at SIGGRAPH, where the Quadro RTX cards had their first outing, but it was at Gamescom later on August 20 that Jen-Hsun Huang showed off the first GeForce RTX graphics cards on stage at a pre-show event.

A third Turing graphics card has also been announced, the RTX 2070, though that is set to be released later than the two flagship GPUs, with an October 2018 launch window now confirmed. We expect it will likely be around the end of the month, probably on the October 20 if Nvidia sticks to the recent traditions of announcement and release dates.

Interestingly Nvidia have launched its consumer Turing GPUs with its own overclocked Founder’s Edition cards, but is looking to its graphics card partners to release the reference-clocked versions on the same day. This is the first time in recent memory that Nvidia has launched a new generation of graphics cards along with its partners.

Nvidia Turing specs

The specs sheet for Turing makes for fascinating reading. These are monster GPUs, with even the third-tier TU106 chip measuring some 445mm2. That’s only a tiny bit smaller than the top-end GP102 chip that powered the previous generation’s GTX 1080 Ti and Titan cards. The TU102 at the other end of the Turing scale measures 754mm2 and packs in nearly 19 billion 12nm transistors.

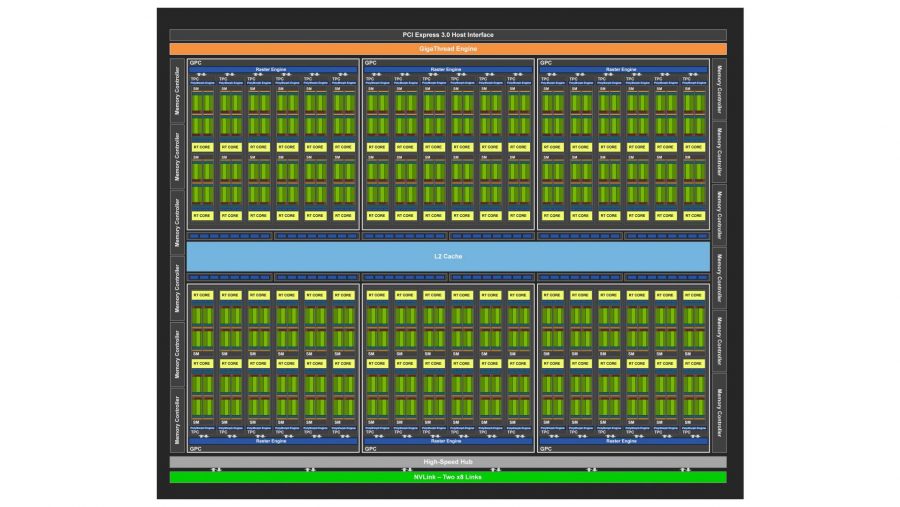

The TU102 has 72 streaming multiprocessors (SMs) with 64 FP32 cores and 64 INT32 cores inside each, in total making up a full 4,608 CUDA cores across six separate general processing clusters (GPCs).

| Quadro RTX 8000 | RTX 2080 Ti | RTX 2080 | RTX 2070 | GTX 1080 Ti | |

| GPU | TU102 | TU102 | TU104 | TU106 | GP102 |

| GPC | 6 | 6 | 6 | 6 | 6 |

| SM | 72 | 68 | 46 | 36 | 28 |

| CUDA Cores | 4608 | 4352 | 2944 | 2304 | 3584 |

| Tensor Cores | 576 | 544 | 368 | 288 | NA |

| RT Cores | 72 | 68 | 46 | 36 | NA |

| Memory | 48GB GDDR6 | 11GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 11GB GDDR5X |

| Memory bus | 352-bit | 352-bit | 256-bit | 256-bit | 352-bit |

| Memory speed | 14Gbps | 14Gbps | 14Gbps | 14Gbps | 11Gbps |

| ROPs | 96 | 88 | 64 | 64 | 88 |

| Texture Units | 288 | 272 | 184 | 144 | 224 |

| TDP | 260W | 260W | 225W | 185W | 250W |

| Transistors | 18.6bn | 18.6bn | 13.6bn | 10.8bn | 12bn |

| Lithography | 12nm FFN | 12nm FFN | 12nm FFN | 12nm FFN | 16nm |

| Die Size | 754mm2 | 754mm2 | 545mm2 | 445mm2 | 471mm2 |

Each SM also has a single RT Core and eight Tensor Cores. These are the dedicated silicon blocks assigned to calculating real-time ray tracing and AI-specific workloads. That means the full TU102 chip contains 72 RT Cores and 576 Tensor Cores.

We keep referencing the “full TU102 chip” because the RTX 2080 Ti doesn’t actually come with the full-spec GPU. There are 4 missing SMs from the final 68 SM count of the RTX 2080 Ti, and therefore the top GeForce RTX card has 256 fewer CUDA cores than the Quadro RTX 6000 and RTX 8000 cards which do use the full-fat TU102.

It’s a similar situation with the TU104 GPU used in both the Quadro RTX 5000 and GeForce RTX 2080. The full chip has 48 SMs and 3,072 CUDA cores, but there are two SMs shaved off the RTX 2080’s GPU, so it has 128 fewer cores inside.

The TU106, however, is only showing up in the RTX 2070 so far, and it is sporting the full GPU with nothing missing. It houses 36 SMs with 2,304 CUDA cores, 288 Tensor Cores, and 36 RT Cores.

While the SM design is identical for all three Turing GPUs announced so far, the actual make up of the different chips is very different. Originally we expected the RTX 2080 and RTX 2070 to share the same GPU, only with a few cuts made to create the third-tier option. But the TU104 and TU106 chips are rather different in their design, as are the TU102 and TU104.

In fact the TU106 is more similar to the TU102, only effectively sliced in half. The TU102 and TU106 GPUs have 12 SMs in each GPC, while the TU104 has only eight in its design. This means the RTX 2080 Ti and RTX 2080 chips both come with six GPCs, but with fewer SMs in the smaller chip.

But all three Turing GPUs retain support for the new GDDR6 memory, with 11GB of 14Gbps GDDR6 and a 352-bit memory bus going into the RTX 2080 Ti, and 8GB of 14Gbps GDDR6, and 256-bit memory buses, going into both the RTX 2080 and RTX 2070 cards.

Nvidia Turing architecture

There are some key architectural differences going into the new Nvidia Turing GPUs that separate them out from the previous Pascal generation of consumer graphics cards. They are more akin to the Volta generation of GPUs, but with important differences even compared with those.

As well as the real-time ray tracing abilities of the new chips there are a host of new rendering techniques to speed gaming performance as well as some new methods for putting the AI power of the Nvidia Tensor Cores to good use in PC gaming. Not all of the potential of these capabilities are going to be realised right away, which could give this generation of GPUs the sort of fine wine approach normally attributed to AMD’s graphics cards.

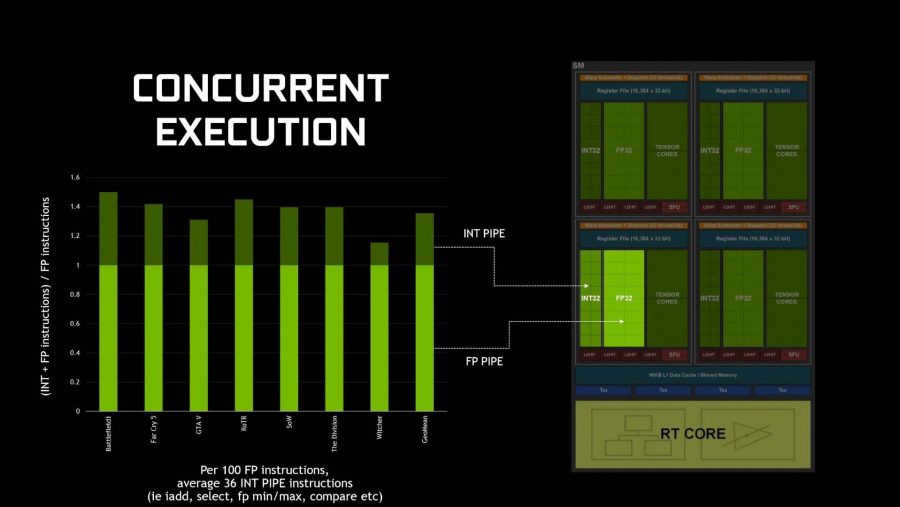

One of the most major architectural differences is the changes to the actual streaming multiprocessor (SM) of the Turing GPU. The new independent integer datapath may not sound massively exciting, but it means that instead of doing floating point and integer instructions consecutively it means they can operate concurrently.

Your graphics card spends most of its time doing floating point calculations, but Nvidia has worked out that, on average, when you’re gaming your GPU will deal with around 36 integer instructions for every 100 floating point instructions. With Pascal that meant that every time the integer cores were being used the FP cores sat idle, wasting performance.

With Turing both can be active at the same time, meaning that there should never be a time where the INT32 and FP32 cores are hanging around twiddling their thumbs waiting for the other to finish what they’re doing. This is what’s largely responsible for the 50% performance boost of the Turing SM over Pascal.

Although Nvidia has also improved the memory architecture, unifying the shared memory design, allowing the GPU to dynamically allocate L1 cache effectively doubling it when there is spare capacity. The L2 capacity on Turing has also been doubled.

Within each of the Turing SMs you get eight Tensor Cores. These are the AI-focused cores designed for inferencing and chewing through deep learning algorithms at a pace not seen outside of the professional space. You may rightly ask, ‘what the hell are these extra chunks of AI silicon doing in my gaming card?’ but with the introduction of Nvidia’s Neural Graphics Acceleration (NGX) technology there are more areas when deep learning can improve games.

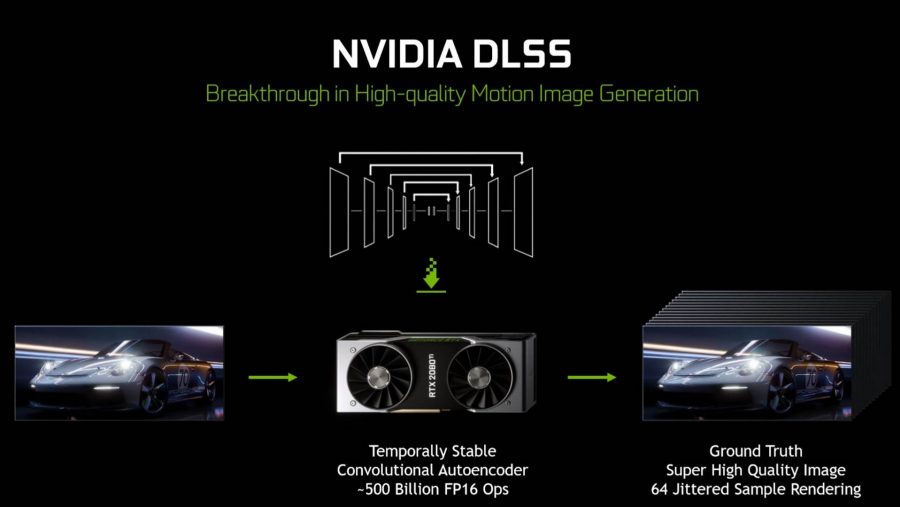

At the moment the only tangible benefit is Deep Learning Super Sampling (DLSS) an AI-based post-processing feature that improves on the appearance of Temporal Anti-Aliasing (TAA) while simultaneously boosting performance. Win, win, right? At its most simple, DLSS uses image data downloaded in the Nvidia drivers, feeds that into the Tensor Cores of your Turing GPU and allows the little AI smarts of your graphics card to fill in the blanks of a compatible game using far fewer samples than TAA needs. Essentially it no longer needs to render every pixel because it just knows what that pixel should be because it’s learned what games should look like when they’re upscaled.

Nvidia gets this data by feeding its Saturn V supercomputer with millions of images from that specific game, and others like it, at very high resolutions, so that it can learn what a high resolution image should look like. The images it uses are all 64x super-sampling, and then once Saturn V has learned how to recreate an image that matches the super high-res pictures it’s been given then it’s ready to roll.

Then, on a local level, your Turing GPU will be able to create smooth, jaggy-less visuals in-game on the fly using the Tensor Cores to infer the details without needing the extreme number of samples TAA does, significantly cutting down the render time. It can also fix the sometimes blurry or broken images you can get with TAA, while also making compatible games run faster than when they use TAA.

There are, in total, 25 games in development at the moment which will support DLSS. Though unfortunately we don’t know when that support will appear.

In the future, however, those Tensor cores will be able to use their smarts to accelerate genuine AI in games, via Microsoft’s WinML API, as well as provide super slow-mo visuals to replays and highlights, and hopefully other cool AI-based features we haven’t even thought of yet. I like AI, I think robots are cool, and I’m looking forward to having one in my PC. Until it inevitably rises up and enslaves me, obvs.

That’s all the stuff which will help traditional rasterized rendering of games, but the Turing architecture is also introducing the next generation of graphics rendering, real-time ray tracing.

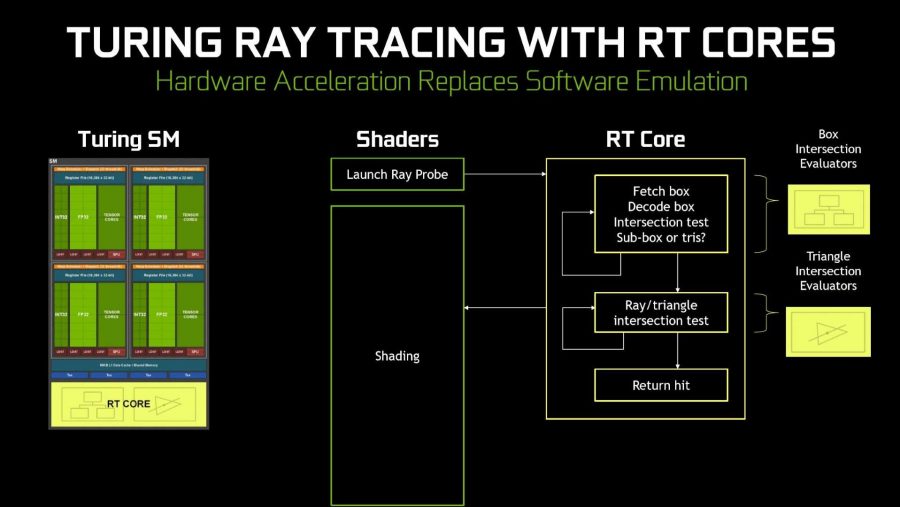

Intel, AMD, and Nvidia have all been demonstrating ray tracing on their hardware for years, with Intel even showing entire games running ray traced on their tech. But Nvidia is the first to actually demonstrate games that will be released with ray traced features inside them, running genuinely in real time. The new RT Cores aren’t going to give you games that are entirely ray traced, but Nvidia is using the new Microsoft DirectX Raytracing API to accelerate a hybrid ray tracing/rasterized rendering technique. This allows game engines to use the efficiency of rasterization and the accuracy of ray tracing to balance of fidelity and performance.

The first games we’ve played using the new technique are Shadow of the Tomb Raider and Battlefield 5, both of which are using it in different ways. Lara’s using the tech to ray trace her shadows and lighting in real time, while DICE is using it to ray trace accurate reflections across its entire game world.

Turing enables what once took entire rendering farms to do in real time to be done on a single GPU. To do this it uses dedicated silicon as well as a redesigned rendering pipeline, allowing rasterization and ray tracing to be done simultaneously.

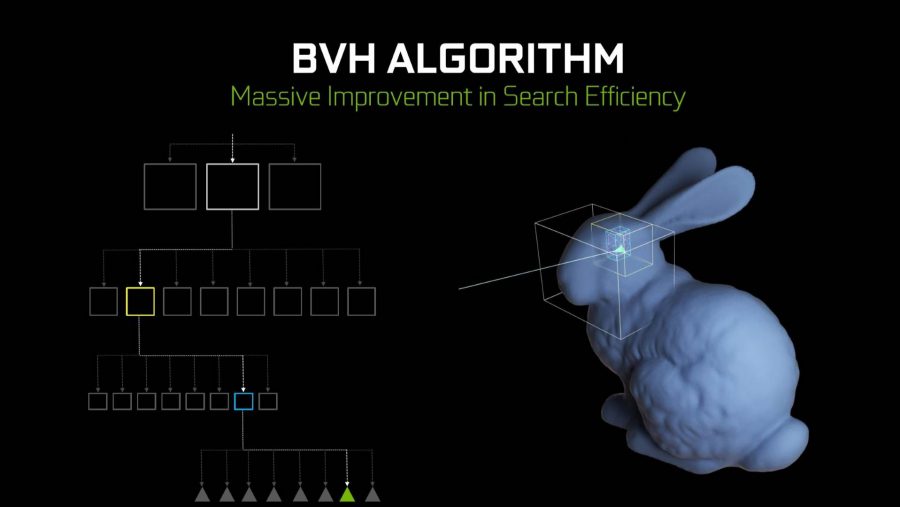

But that dedicated silicon is one of the biggest changes to the Turing GPU architecture and they’re fixed function cores designed to accelerate the specific technique which has become the industry standard for ray tracing – bounding volume hierarchy (BVH).

“It wasn’t that long ago there were many different competing technologies for doing ray tracing,” Nvidia’s Tom Petersen told me recently. “BVH has, over the last several years, become clearly a great way of doing this sort of like projection and intersection of geometry.

“So once you kind of know the algorithm then it’s a question of how do you map that algorithm to hardware and that problem itself is quite complicated. I would say Turing is what it is primarily because we know the technology is at the right intersection time and we get great results.”

BVH is the process by which the hardware can track the traversal of individual rays of light generated in a scene, as well as the exact point at which each ray intersects with objects. The algorithm checks ever smaller boxes on a target object to nail down its motion through a scene, testing and retesting until the ray finally strikes an object. Then it needs to carry on the box checking to see where exactly on the object it’s hit. Currently that’s all done with the standard silicon inside each SM, tying it up calculating the billions of rays necessary to create a believable ray traced effect. The RT Cores, however, offload that work from the SM, leaving it to its traditional work, and accelerating the whole process massively.

There are two specific units inside the RT Core – one does all the bounding box calculations, and the second carries out the triangle intersection tests, ie where on an object the ray in question strikes it.

The example is the GTX 1080 Ti can accurately track around 1 billion rays of light per second, while the equivalently priced RTX 2080 can deal with 8 billion rays. Nvidia’s shorthand for this is noted as Giga Rays per Second and the RTX 2080 Ti can manage more than 10 billion rays, or 10 Giga Rays per Second.

As well as the new hardware inside the Turing GPUs themselves, Nvidia has also created a set of new rendering techniques that can be used to enhance the visual fidelity of a game world and/or improve a game engine’s performance. The first is the introduction of Mesh Shading into the graphics pipeline, a feature which reduces the CPU bottleneck for processing the different objects in a scene and allows a game world to have far more objects without tanking performance as it no longer needs a unique draw call from the processor for each of them.

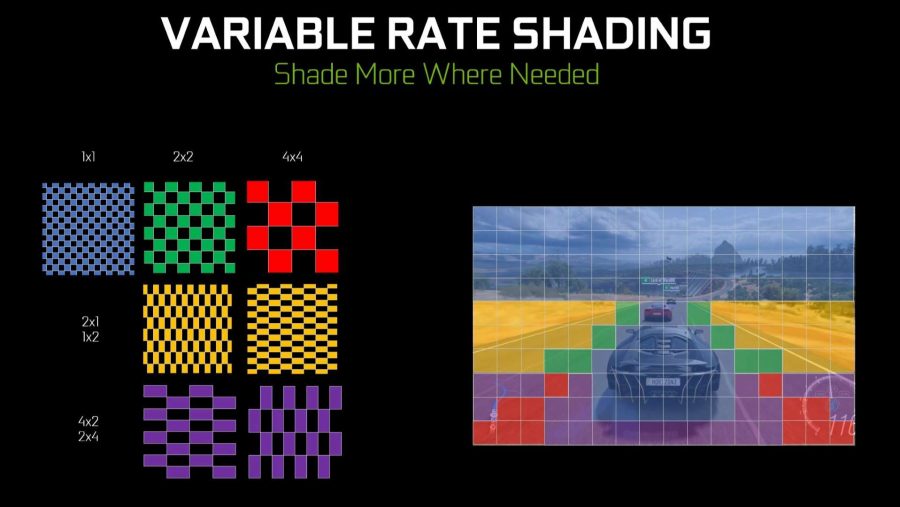

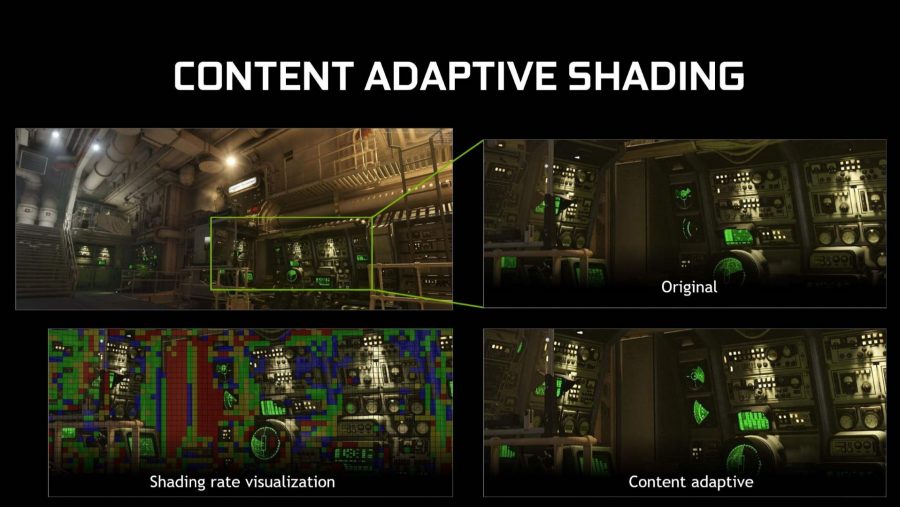

Variable Rate Shading (VRS) is a potentially incredibly powerful tool which reduced the amount of shading necessary in a game scene by allowing the Turing GPU to segment the screen into 16-pixel by 16-pixel regions and allowing each of them to have a different shading rate. VRS gives developers seven different shading rates from where all the pixels are shaded in full, to one where the GPU only needs to shade 16 out of 256 pixels in a region.

VRS is then split into three different usage cases: Content Adaptive Shading, Motion Adaptive Shading, and Foveated Rendering. The last one is specifically aimed at taking some of the heavy lifting out of VR rendering by allowing only rendering the main focus of the viewer’s eyes in full detail, with the periphery rendered in lower detail. With eye-tracking in VR this method will hugely reduce the amount of strain placed on a system to render a high-res VR scene.

Content Adaptive Shading is a post-processing step added into the end of the current frame which allows the GPU to understand the amount of detail in the different regions of that frame and adjust the shading rate accordingly for subsequent frames. If a region doesn’t have a lot of detail in it, a flat wall, for example, then the shading rate can be lowered, but if it is high then it can be rendered in full.

Motion Adaptive Shading can be used in conjunction with Content Adaptive Shading, and works on the idea that it is wasteful, in shading terms, to render regions which are moving quickly in full detail as the eye cannot focus on them anyway. A driving game is a good example, where the terrain zipping past doesn’t have to rendered in full as it is barely noted by the eye, while the middle of the screen, the focus of the view, needs to be rendered in full.

There are other new features in Turing, such as Acoustic Simulation and Multi-View rendering for VR, and Texture Space Shading to improve rendering time by reusing previously completed rendering computations for a specific texture.

In short, there’s a lot of new stuff in the Turing GPU architecture that could end up making the GPUs released this year perform even better next year.

Nvidia Turing pricing

So yeah, if you want the absolute pinnacle of Turing performance then you want to get yourself an Quadro RTX 8000 card, with the full-fat TU102 GPU inside it and 48GB of GDDR6 memory. Of course you’re looking at a street price of around $10,000 for that, and the same GPU with 24GB of memory, the Quadro RTX 6000, is over $6,000.

Which all makes the $1,199 (£1,099) Nvidia is asking for the Founder’s Edition GeForce RTX 2080 Ti look rather reasonable. Well, almost. There may eventually be reference-clocked versions of the RTX 2080 Ti retailing for the $999 MSRP, but that won’t happen until supply goes up and demand comes down post launch.

The RTX 2080 is a little more affordable, at $799 (£749) for the Founder’s Edition and with a base MSRP of $699 for the reference-clocked cards.

Nvidia Turing performance

We’ve now benchmarked the first Turing GPUs in traditional games and the performance varies between unprecedented – for the Titan-esque RTX 2080 Ti – and something altogether more familiar. Yes, the RTX 2080 Ti might perform spectacularly, but the RTX 2080 only just manages a few extra frames per second on average over and above the GTX 1080 Ti. Which is an older, cheaper card.

But it’s the ray tracing performance that’s going to be really interesting going forward. Ray tracing is an incredible demanding workload for any current generation graphics card. This rendering technique traces paths of light interacting with virtual objects to capture much more detail and realism in the finished scene. Ray tracing captures shadowing, reflections, refraction, and global illumination much better than current rendering techniques, which often require workarounds to accomplish the same results with less computational demand.

A single Nvidia RTX 2080 or 2080 Ti can run the Unreal Engine 4 reflections demo in real-time, which is incredible The Star Wars ray tracing demo previously required one of Nvidia’s DGX station powered by four Tesla V100 GPUs. That’s $70,000 worth of Volta tech – the Turing RTX chips distills all that ray tracing performance into a single GPU.

It’s not the full enchilada, however, the demo is a little more cut down than the full thing, but still looks stunning if a tiny bit noisier. It’s likely got fewer rays and relies more heavily on the DLSS-based denoising.

UL will have a new 3DMark Ray Tracing demo out before the end of the year, and we’ve had an early demo of it running on our test rig. Though it’s not meant to be representative of final performance the RTX 2080 Ti was running at around 45fps at 1080p. See, told you ray tracing was intensive…