It’s testament to the Nvidia marketing team that one of its buzzwords has now slipped into common parlance. Not only did the 1st-gen Nvidia GeForce 256 introduce us to its now famous GeForce gaming graphics brand, but it also brought the term ‘GPU’ to the gaming PC with it.

An initialism that we now use as shorthand for any PC graphics chip, or even one of the best graphics cards, started life on the PC as an Nvidia marketing slogan. To give you an idea of how long ago this was, I was introduced to the term “GPU” by a paper press release the same week I started my first tech journalism job in September 1999.

We didn’t get press releases via email then – they were physically posted to us, and the editorial assistant sorted them all into a box for the team to peruse. “In an event that ushers in a new era of interactivity for the PC, Nvidia unveiled today the GeForce 256, the world’s first graphics processing unit (GPU)”, it said.

At the time, I thought it seemed pompous – how could this relative newcomer to the 3D graphics scene have the nerve to think it could change the language of PC graphics? But I now see that it was a piece of marketing genius. Not only did “GPU” stick for decades to come, but it also meant Nvidia was the only company with a PC GPU at this point.

GeForce 256 transform and lighting

Nvidia’s first GPU did indeed handle 3D graphics quite differently from its peers at the time, so it’s time for a little history lesson. If we want to understand what made the first GeForce GPU so special, we first have to take a look at 3D pipelines of the time.

It was October 1999, and the first 3D accelerators had only been doing the rounds for a few years. Up until the mid-1990s, 3D games such as Doom and later Quake were rendered entirely in software by the CPU, with the latter being one of the first games to require a floating point unit.

If you want to display a 3D model, it has to go through the graphics pipeline, which at this stage was all handled by the CPU. The first stage is the geometry, where the CPU works out the positioning (where polygons and vertices sit in relation to the camera) and lighting (how polygons will look under the lighting in the scene). The former involves mathematical transformations, and is usually referred to as “transform” with the two processes together called “transform and lighting” or T&L for short.

Once the geometry is nailed, the next step is to fill in the areas between the vertices, which is called rasterization, and pixel processing operations, such as depth compare and texture look-up. This is, of course, a massive oversimplification of the 3D graphics pipeline of the time, but it gives you an idea. We started with the CPU handling the whole graphics pipeline from start to finish, which resulted in low-resolution, chunky graphics and poor performance.

We then had the first 3D accelerators, such as the 3dfx Voodoo and VideoLogic PowerVR cards, which handled the last stages of the pipeline (rasterization and pixel processing), and massively improved the way games looked and performed, while also ushering in the wide use of triangles rather than polygons for 3D rendering.

With the CPU no longer having to handle all these operations, and dedicated hardware doing the job, you could render 3D games at higher resolutions with more detail and faster frame rates. At this point, the CPU was still doing a fair amount of work though. If you wanted to play 3D games, you still needed a decent CPU.

Nvidia aimed to change this situation with its first ‘GPU’, which could process the entire 3D graphics pipeline, including the initial geometry stages for transform and lighting, in hardware. The CPU’s only job then was to work out what should be rendered and where it goes.

GeForce 256 vs 3dfx Voodoo 3

As with any new graphics tech, of course, the industry didn’t instantly move toward Nvidia’s hardware T&L model. At this point, the only real way to see it in action in DirectX 7 was to run the helicopter test at the start of 3DMark2000, although some games using OpenGL 1.2 also supported it.

The latter included Quake III Arena, but the undemanding nature of this game meant it practically ran just as well with software T&L. DirectX 7 also didn’t require hardware-accelerated T&L to run – you could still run DirectX 7 games using software T&L calculated by the CPU, it just wasn’t as quick.

The GeForce was still a formidable graphics chip whether you were using hardware T&L or not though. Unlike the 3dfx Voodoo 3, it could render in 32-bit color as well as 16-bit (as could Nvidia’s Riva TNT2 before it), it had 32MB of memory compared to the more usual 16MB, and it also outperformed its competitors in most game tests by a substantial margin.

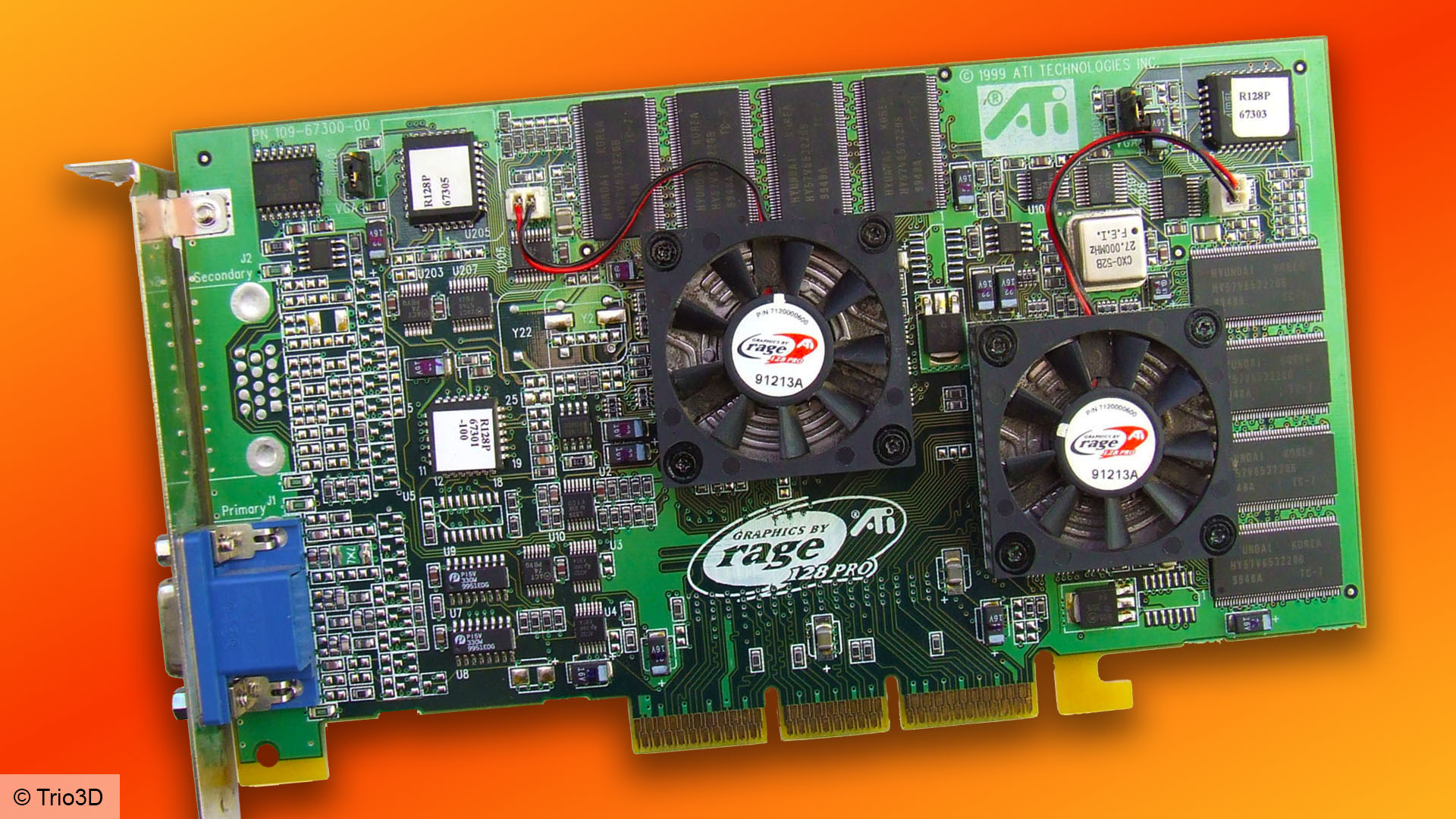

The response from ATi (now AMD) at the time was a brute-force approach, putting two of its Rage 128 Pro chips onto one PCB to make the Rage Fury Maxx, using alternate frame rendering (each graphics chip handled alternate frames in sequence – note how I’m not using the term ‘GPU’ here!) to speed up performance. I tested it shortly after the release of the GeForce 256 and it could indeed keep up.

The GPU wins

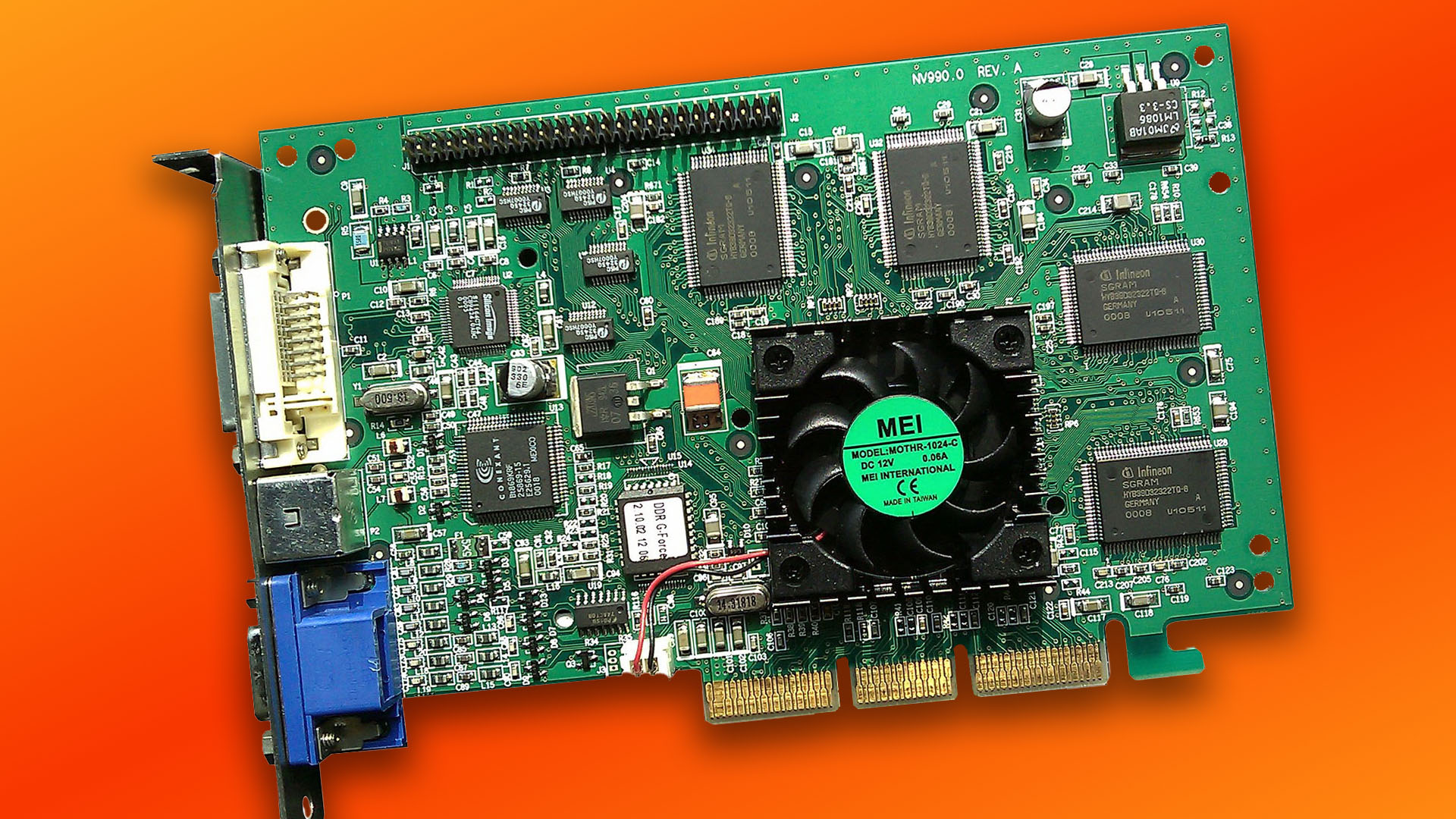

The Rage Fury Maxx’s limelight was cut shortly afterward, though, when Nvidia released the DDR version of the GeForce in December 1999, which swapped the SDRAM used on the original GeForce 256 with high-speed DDR memory. At that point, Nvidia had won the performance battle – nothing else could compete.

It also took a while for everyone else to catch up, and at this point, various people in the industry were still swearing that the ever-increasing speed of CPUs (we’d just passed the 1GHz barrier) meant that software T&L would be fine – we could just carry on with a partially accelerated 3D pipeline.

When 3dfx was building up to the launch of the Voodoo 5 in 2000, I remember it having an FAQ on the website. Asked whether the Voodoo 5 would have software T&L support, 3dfx said, “Voodoo4 and Voodoo5 have software T&L support.” It’s not deliberately dishonest, but every 3D graphics card could support software T&L at this time – it was done by the CPU – it looked as though the answer was there to sneakily suggest feature parity with the GeForce 256.

In fact, the only other graphics firm to come up with a decent competitor in a reasonable time was ATi, which released the first Radeon half a year later, complete with hardware T&L support. Meanwhile, the 3dfx Voodoo and VideoLogic PowerVR lines never managed to get hardware T&L support on the PC desktop, with the Voodoo 5 and Kyro II chips still running T&L in software.

But 3dfx was still taking a brute-force approach – chaining VSA-100 chips together in SLI configuration on its forthcoming 3dfx Voodoo 5 range. The Voodoo 5 5500 finally came out in the summer of 2000, with two chips, slow SDRAM memory, and no T&L hardware. It could keep up with the original GeForce in some tests, but by that time Nvidia had already refined its DirectX 7 hardware further and released the GeForce 2 GTS.

By the end of the year, and following a series of legal battles, 3dfx went bust, and its assets were bought up by Nvidia. GeForce, and the concept of the GPU, had won.

We hope you’ve enjoyed this personal retrospective about the very first Nvidia GeForce GPU. For more articles about the PC’s vintage history, check out our PC retro tech page, as well as our guide on how to build a retro gaming PC, where we take you through all the hardware you need.