A group of top Nvidia marketing gurus gather together to decide how to cool their latest top-end graphics card, the Nvidia GeForce FX 5800 Ultra. “We could be the Harley Davidson of graphics cards,” suggests Steve Sims, senior product manager. “When people buy a Harley or a Porsche one of the things they’re looking for is that distinct noise that it makes,” concurs Dan Vivoli, vice president of marketing.

The video of the meeting, titled The Decibel Dilemma, then cuts to a dentist using a drill, and other clips of the GeForce FX 5800 Ultra graphics card being used in humorously noisy situations. It’s pictured being used as a hairdryer, part of a coffee grinder, and on the end of a leaf blower. Make no mistake, Nvidia knows this is not the best graphics card right now.

Having realized that the criticism of the noise from its latest FX Flow cooler isn’t going to go away, Nvidia has decided to put its hands up and own the situation with a comedy video, based on ideas contributed by the PC enthusiast community.

It’s difficult to imagine a tech manufacturer having a similar delf-deprecating sense of humor now – Nvidia did, after all, originally demand $1,199 for the GeForce RTX 4080 at launch, apparently with a completely straight face. You can see the video below.

The Nvidia dustbuster cooler

Was this card’s cooler really that bad? I was one of the reviewers who tested some of the first samples of the GeForce FX 5800 Ultra in early 2003, and I can tell you that the comparison with a hairdryer wasn’t far off. There was a very sudden contrast between the quiet noise the cooler made when it was idle in Windows and the wind tunnel-like sound it made when the tiny fan spun up during our gaming benchmarks.

You could hear it all the way from the other side of the lab, provoking lots of laughter from the staff working there at the time. The solid wall of noise could be likened to a hairdryer, but it also had a high-pitch whir – combine a dentist drill with a hairdryer and you’ll have an idea of how it sounded.

We criticized the noise from the blower-style coolers AMD used on its 1st-generation Navi cards a few years ago, but that noise was nowhere near as ridiculous as the racket from the FX 5800 Ultra. Nvidia had got it so wrong that the only way to beat the comedians was to join them in laughing at itself. How did it happen?

Nvidia’s new GeForce FX 5800 Ultra saw Nvidia taking a new approach to cooling. Up until this point, most (although not all) graphics cards had occupied a single expansion slot bracket and only required a single fan on the GPU itself. Nvidia’s new GPU architecture prompted the company to take a new approach to cooling.

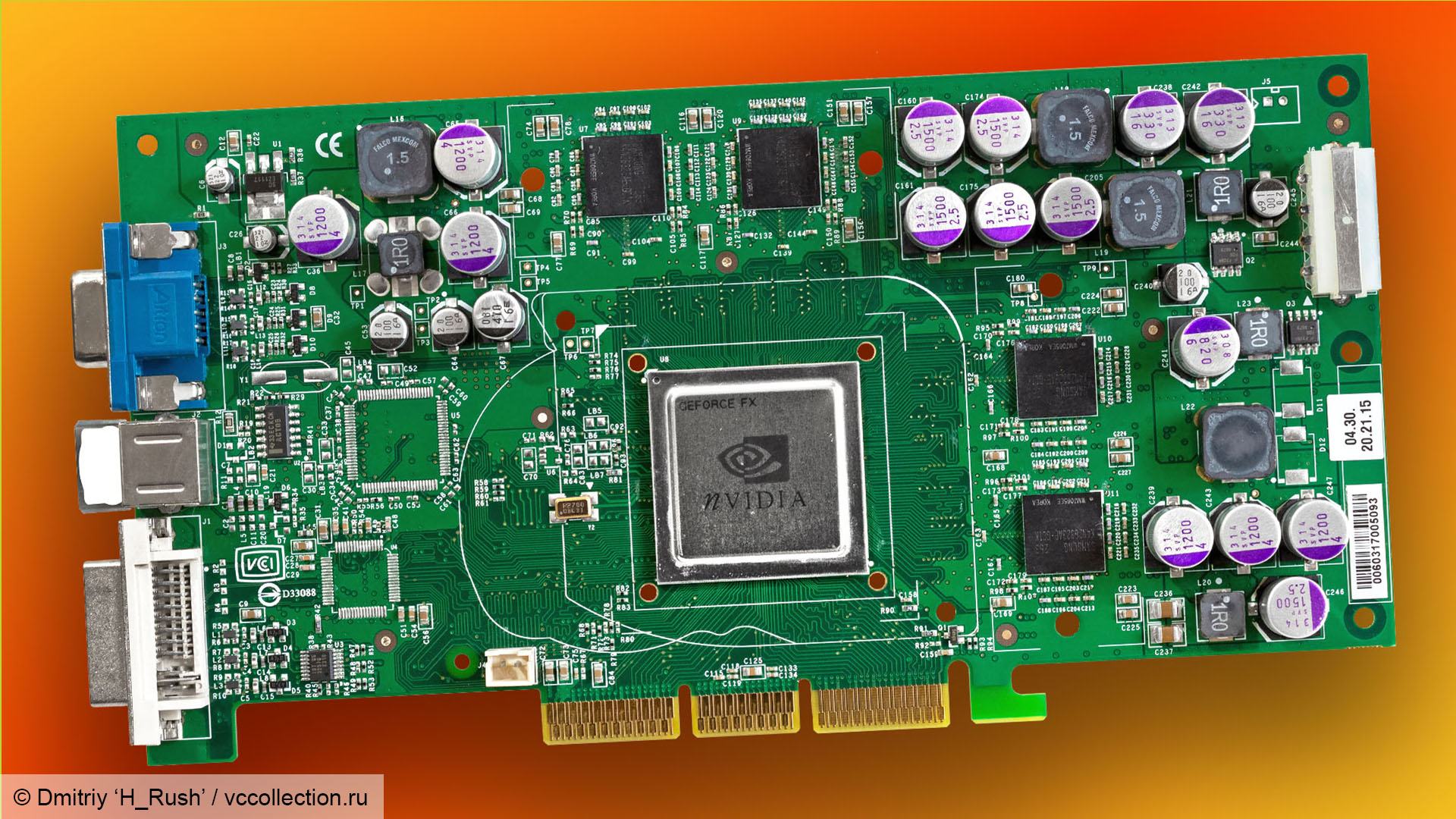

The move to the new GeForce FX architecture, codenamed Rankine, saw Nvidia moving to TSMC’s 130nm manufacturing process in a chip clocked at 500MHz, and also using GDDR2 memory clocked at 500MHz (1GHz effective). Nvidia wanted a cooling setup that could tame the comparatively hot-running hardware and its solution to this cooling problem was called FX Flow.

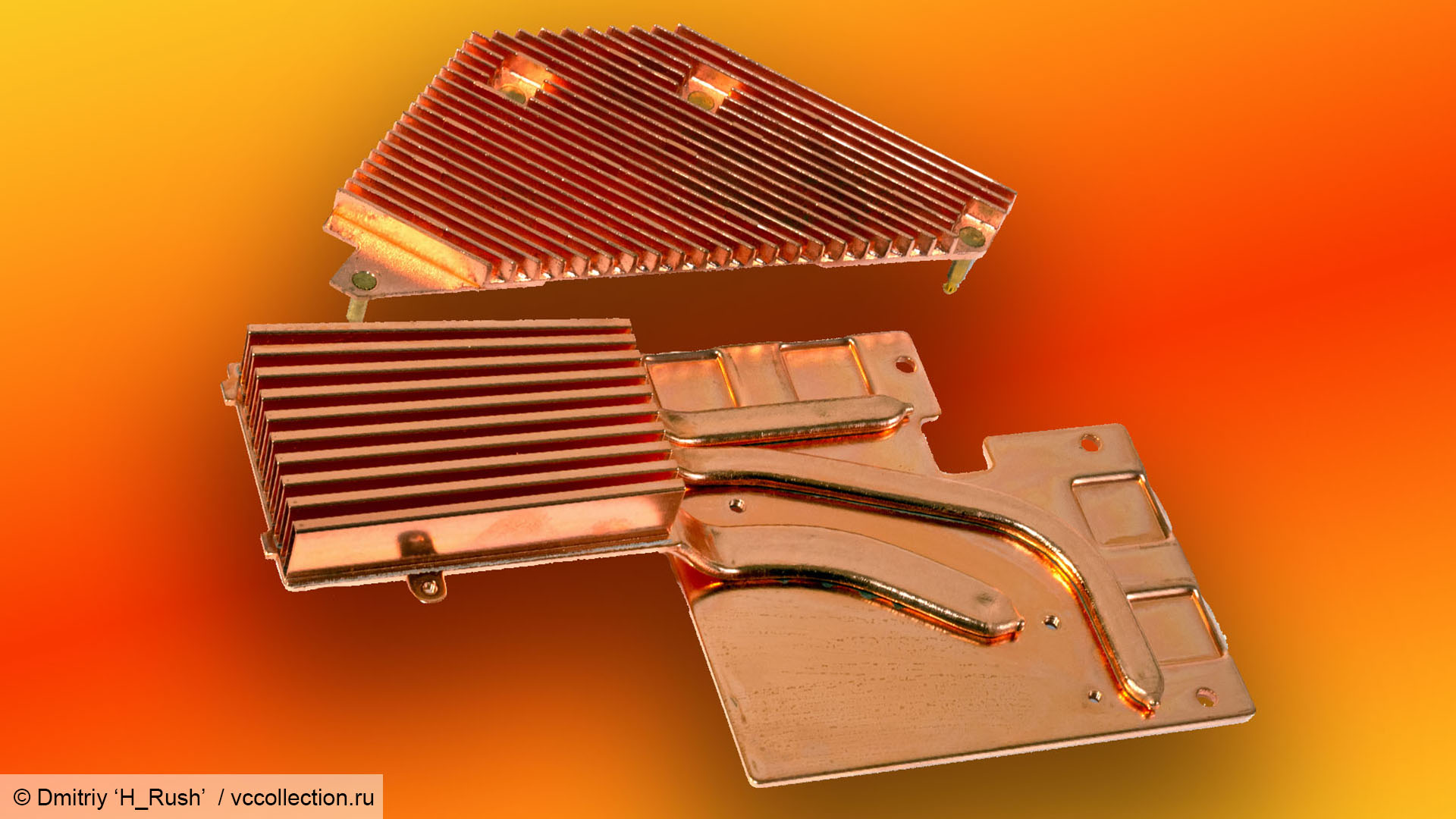

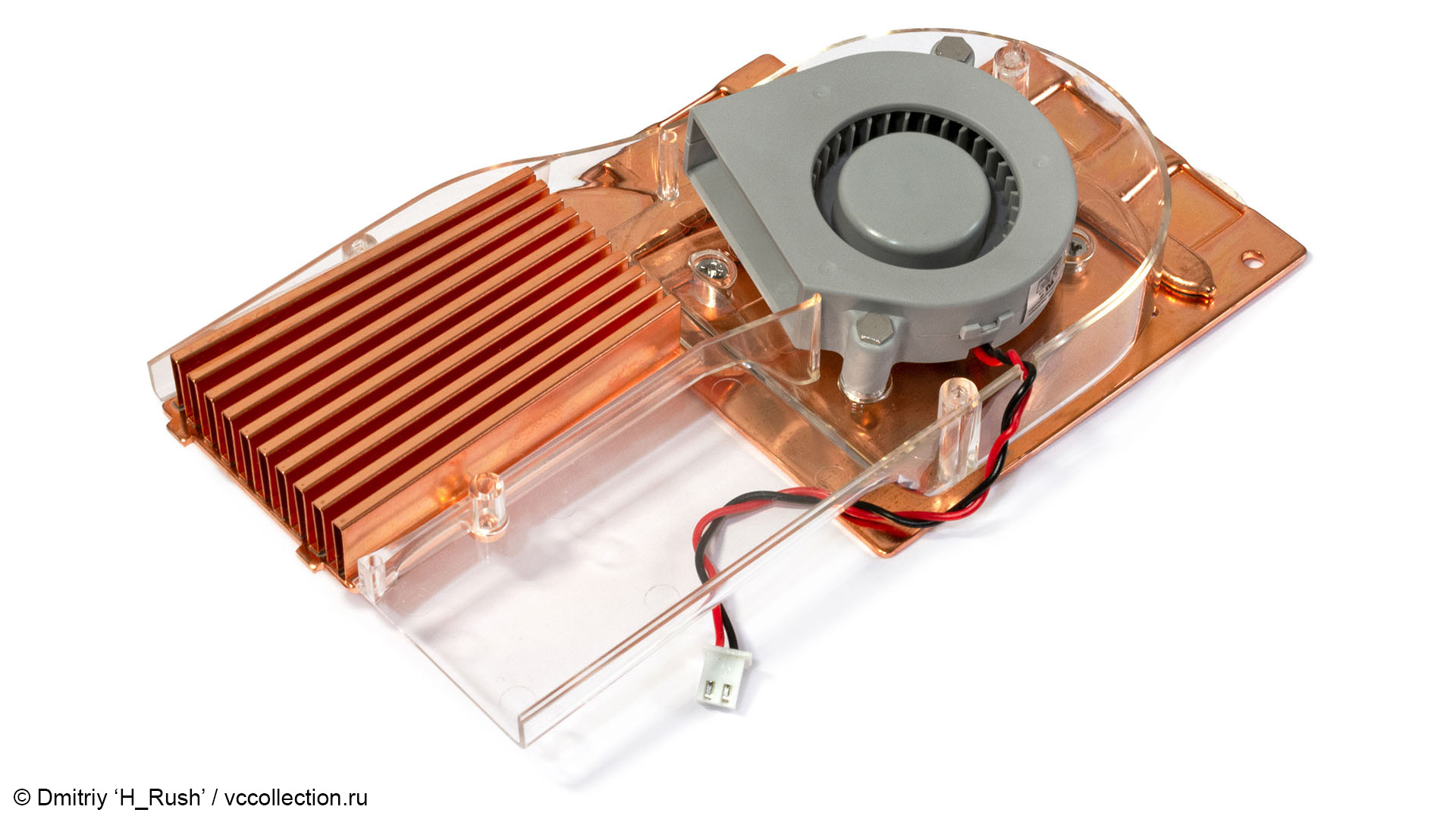

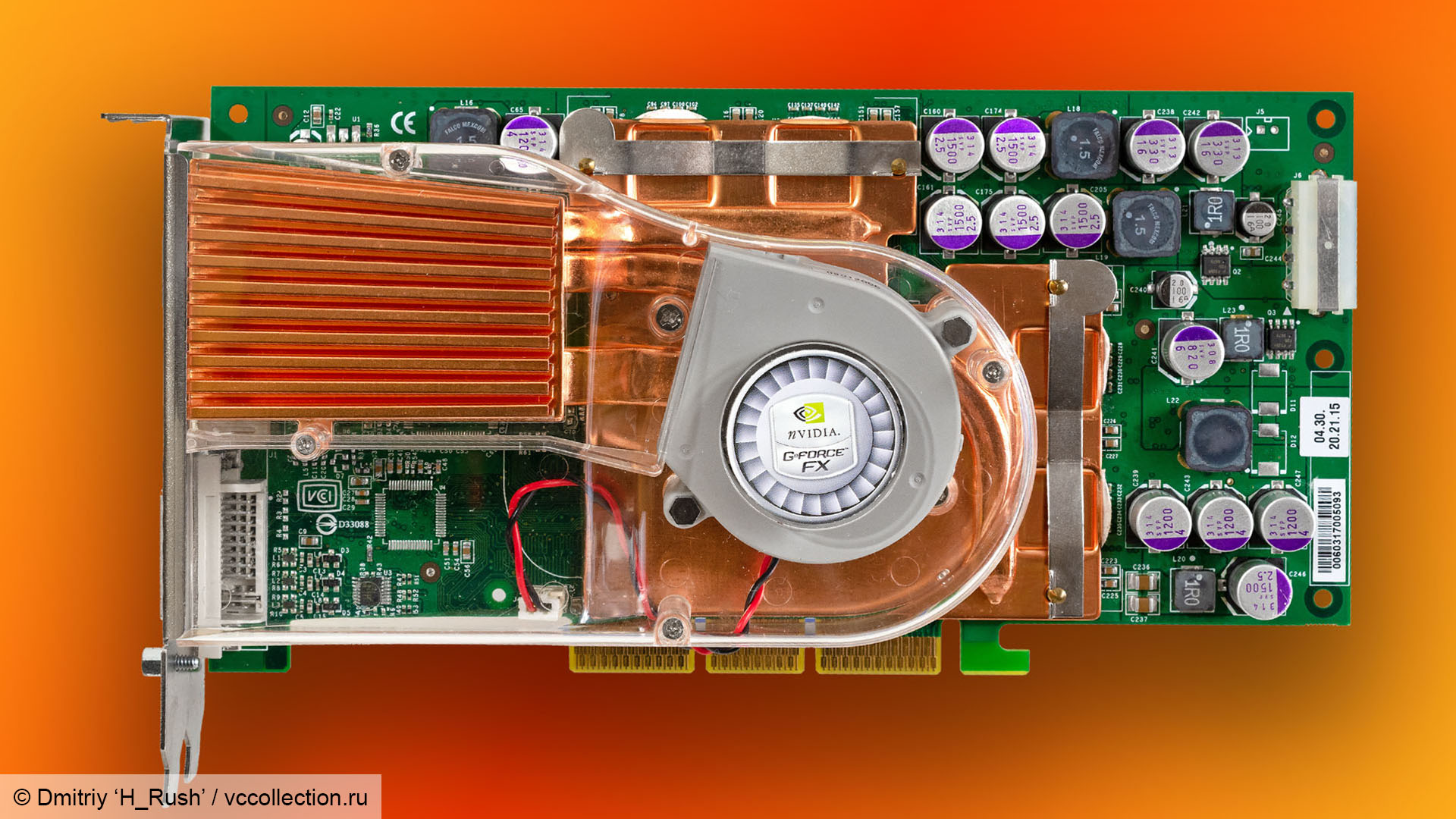

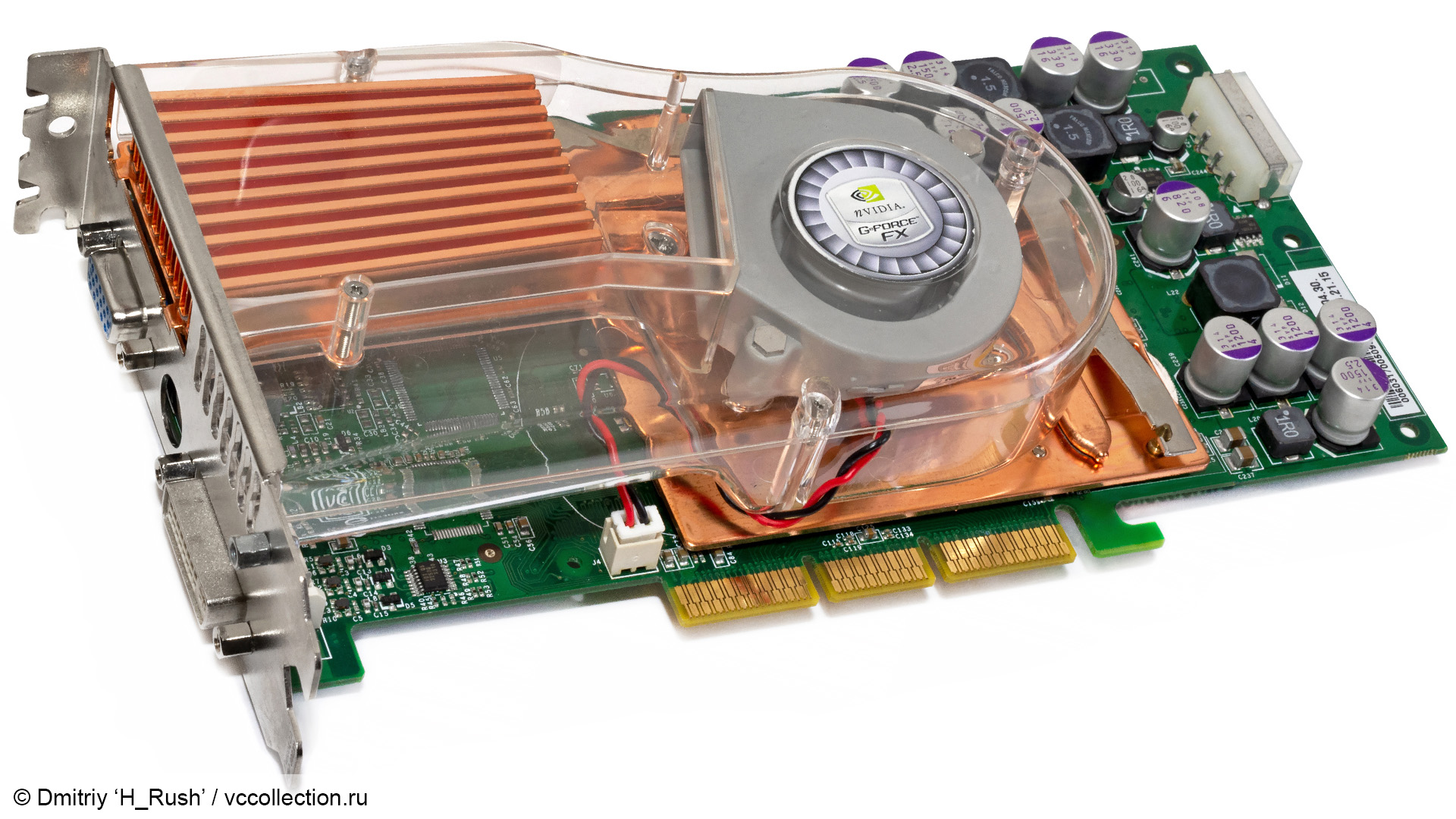

The idea was to cool the whole lot with one small fan, using a concentrated airflow system. A large copper plate covered the memory and GPU on the front of the card, which was linked to a copper heatsink by three heatpipes. A small fan then pulled air from the top vent on the card’s backplate, going across the heatsink to cool the GPU and memory plate.

The air was then directed (by the same fan) out the bottom vent on the card’s backplate, with a plastic shroud forcing the system to direct the airflow in this way.

Meanwhile, a second copper heatsink was attached to the memory chips on the back of the card. You can see how it all worked in the great photos of a Gainward GeForce FX 5800 Ultra card on this page, kindly provided by Dmitriy ‘H_Rush’ from vccollection.

It was a sound design in theory, and Abit had already demonstrated a similar cooling system on its OTES-branded graphics cards. With this method, Nvidia would ensure that the hot-running components were kept cool, and that any hot air dumped inside your case was kept to a minimum. The main problem was that the tiny fan had to run at a very high speed to ensure that it worked properly, and the noise was horrendous, gaining it the nickname of the “dustbuster” among enthusiasts.

Was GeForce FX good?

Nvidia’s joke marketing video did one good job for the company, which was to focus attention on the cooling system, rather than the GPU. Even the discussion at the beginning of the video describes the GeForce FX 5800 Ultra as “the most powerful graphics card on the planet,” which was stretching the truth at best. It was definitely more powerful than the GeForce 4 Ti cards that preceded it, but it was also up against some seriously powerful competition from ATi (before it was bought by AMD).

Before we get into the details, let’s step back and take a look at the graphics landscape at this time. DirectX 8 had introduced us to the potential for programmable shaders – small programs that could be run directly on a GPU by its dedicated pixel and vertex processors, sometimes called pipelines. We still use pixel and vertex shaders today, but they’re now processed by all-purpose stream processors, rather than dedicated pixel and vertex processors.

There was clearly a lot of potential here – you could see it in the beautiful Nature section of the 3DMark2001 benchmark, but games that supported DirectX 8’s shader model were few and far between. It was supported by Nvidia’s GeForce 3 and GeForce 4 Ti chips, as well as the Radeon 8500, but it was rarely used at the time.

It’s for this reason that cheaper GPUs, such as the Radeon 7500 and GeForce 4 MX, had no support at all for shaders and were available to buy at the same time. For context, the Nintendo Wii still had no shader support when it was released in 2006.

There were some notable games that supported early shaders, such as The Elder Scrolls III: Morrowind and Unreal Tournament 2003, but the vast majority of games still used fixed-function pipelines. That situation started to change over the next year, with the launch of more high-profile titles such as Doom 3 and Half-Life 2, as well as the launch of DirectX 9 and Shader Model 2, which introduced a more flexible shader model.

Shader Model 2 massively increased the number of pixel shader instruction slots from 8+4 to 32+64, and it upped the texture instruction limit from four in Pixel Shader 1.3 to 32 in Pixel Shader 2. Likewise, the number of vertex shader instruction slots doubled from 128 to 256, with the ability to execute a maximum of 1,024 vertex instructions in Vertex Shader 2, compared to just 128 in Vertex Shader 1.1.

The problem for Nvidia was that ATi had got to Shader Model 2 long before the launch of GeForce FX. The ATi Radeon 9700 had eight pixel processors and four vertex processors. It didn’t use all the latest tech – it came with 128MB of DDR memory and was built on a 150nm process, but it had a single-slot cooler that didn’t make an awful noise and it beat Nvidia in the Shader Model 2 arms race by several months.

To make matters worse, ATi launched an update, the Radeon 9800 Pro, in the same time frame as the GeForce FX launch in early 2003. Based on the same Rage 8 architecture, it upped the GPU clock speed from the 9700’s 275MHz to 380MHz, and increased the memory frequency from 270MHz (540MHz effective) to 340MHz (680MHz effective).

When the GeForce FX 5800 Ultra finally launched in March 2003, the battle was already over before it had begun. It launched with four pixel processors, compared to the Radeon 9700’s eight, and three vertex processors, compared to four on the 9700. It could barely compete with the GPU that had come out nine months beforehand, let alone ATi’s latest 9800 Pro.

Nvidia GeForce FX bandwidth

Of course, comparing the number of shaders isn’t an apples-to-apples likeness on paper, due to the underlying differences in architecture, and that applies just as much today as it did then. For starters, unlike ATi’s GPUs at that time, GeForce FX supported the superior Shader Model 2a, which was even more powerful than Shader Model 2. The GeForce FX 5800 Ultra also had the benefit of using the latest GDDR2 memory, with a top effective clock speed of 1GHz compared to 680MHz on the 9800 Pro.

However, despite ATI’s card’s using slower memory, they were attached to a much wider interface. With a 256-bit wide interface at its disposal, the Radeon 9800 Pro had a total memory bandwidth of 21.76GB/s from its 680MHz (effective) DDR RAM.

Comparatively, the GeForce FX 5800 Ultra only had a 128-bit wide interface so, despite its faster memory, it only had a total memory bandwidth of 16GB/s. Basically, Nvidia’s choice to pair its GDDR2 memory with a tight interface largely negated the point of using it. That was a big deal – you could get away with slower shader performance in a world where few games used programmable shaders, but restricted memory bandwidth opened up ATi’s lead in real-world gaming tests as well.

Saving GeForce FX

Once Nvidia had nursed its wounds from the reaction to its disappointing GPU and comical cooler, the company then had to make the best of the situation, as the GeForce FX architecture was all it had. A year later, it would be back on top with its superb GeForce 6000-series GPUs, but in the meantime, it had to make GeForce FX work.

The 5800 Ultra was largely abandoned by the industry, and Nvidia brought out the GeForce FX 5900 series of GPUs. Like the ATi competition, these cards went back to using DDR memory, but with a 256-bit wide interface. In the case of the top-end FX 5950, the memory was clocked at 475MHz (950MHz effective) and had a 475MHz GPU clock.

Nvidia also tweaked the cooler design. The FX Flow model was largely abandoned at this point, with many card manufacturers using their own traditional cooler designs for the GeForce FX 5900 XT and 5900 Ultra, squeezing cards into a single-slot design with a single cooling fan.

Nvidia GeForce FX benchmarks

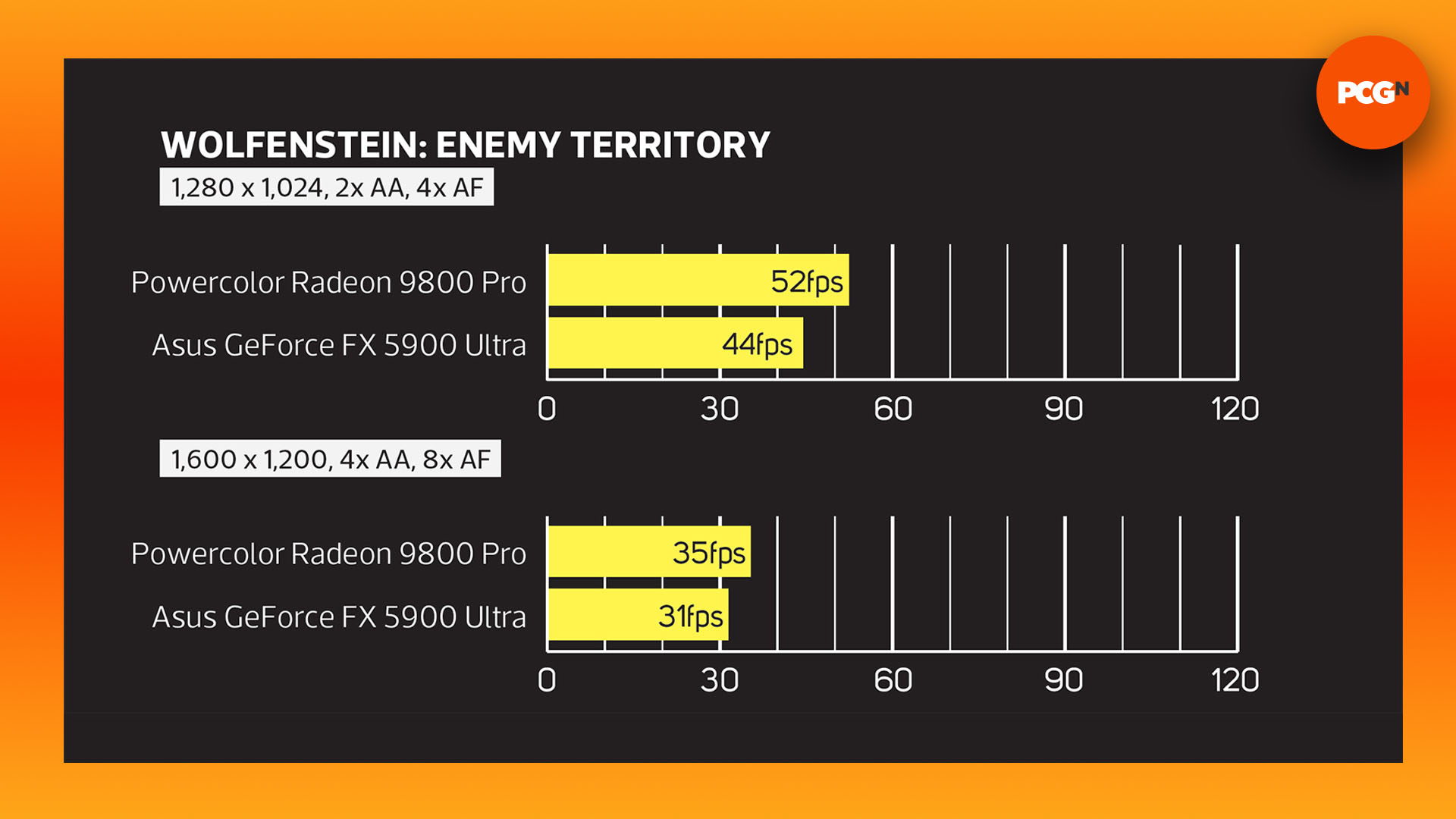

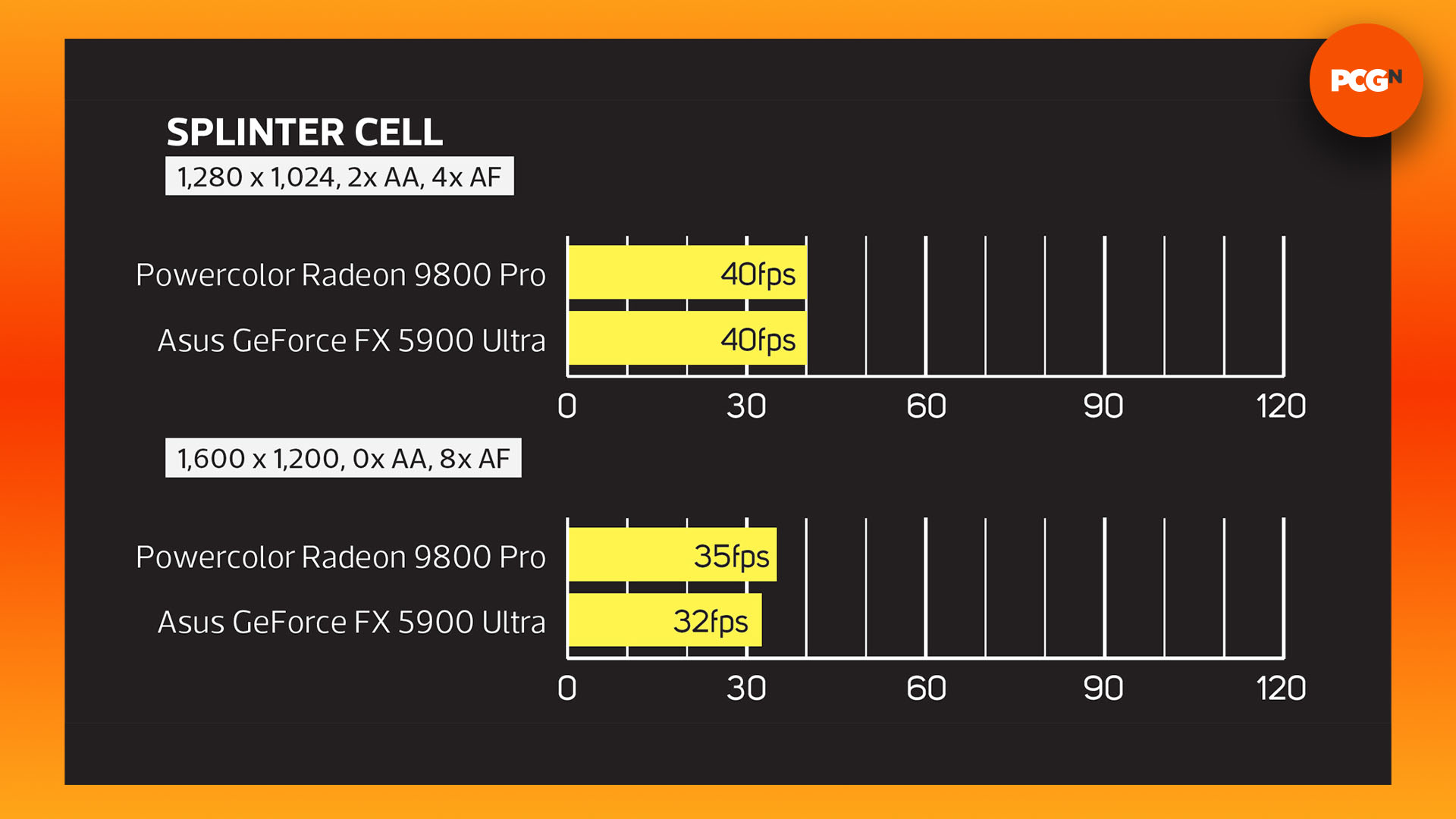

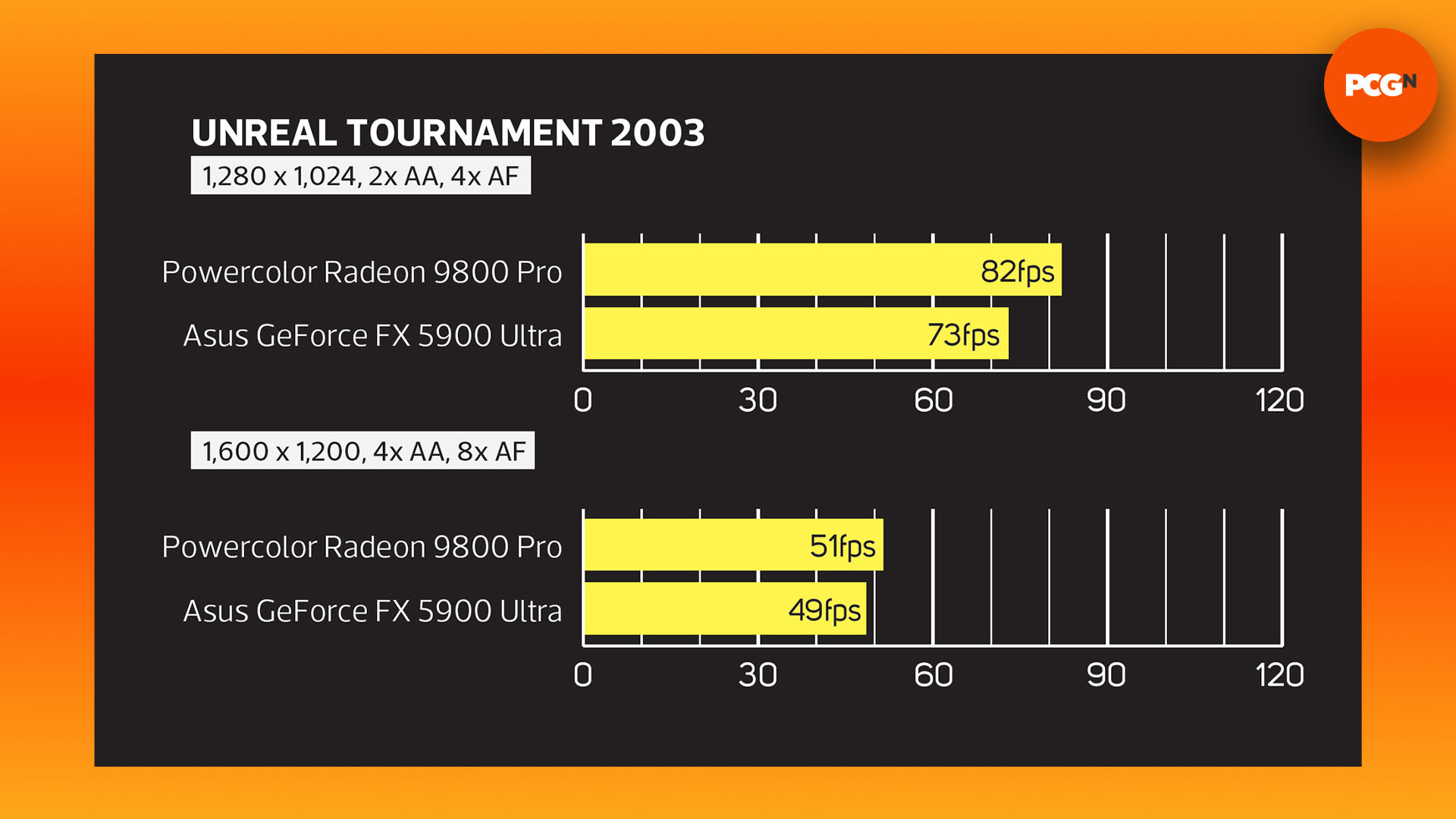

This wasn’t enough to save GeForce FX from embarrassment though. In the very first issue of Custom PC, when it launched as a print magazine in the UK, we conducted a Labs test of Radeon 9800 Pro and GeForce FX 5900 Ultra cards, which at that time sat in the $430-$530 price bracket, with the Nvidia cards generally costing more.

We’ve republished a selection of the results here for a laugh and, as you can see, the Radeon 9800 Pro was either much faster or the same speed as the GeForce FX 5900 Ultra in our tests at the time.

This pattern was generally repeated across the board, with the GeForce FX 5200 and 5600 failing to match the performance of ATi’s equivalents at the time. There was no getting away from the hard fact that GeForce FX stank. Even with a wider memory interface and a quieter cooler, the cards simply couldn’t keep pace with the comparatively priced competition from ATi.

Bizarrely, GeForce FX 5800 Ultra cards now fetch decent prices among collectors, thanks to their rarity and the story that surrounded them at the time. If you were unlucky enough to buy one and still have it lurking in a drawer, it might be worth sticking it on eBay.

We hope you’ve enjoyed this personal retrospective about GeForce FX. For more articles about the PC’s vintage history, check out our retro tech page, as well as our guide on how to build a retro gaming PC, where we take you through the trials and tribulations of working with archaic PC gear.

We would like to say a big thank you to Dmitriy ‘H_Rush’ who very kindly shared these fantastic photos of a Gainward GeForce FX 5800 Ultra with us for this feature. You can visit vccollect to see more of his extensive graphics card collection.