Nvidia has been working on a prototype multi-die AI accelerator chip called RC 18. The 36-module strong chip, developed by Nvidia Research, is currently being evaluated in the labs, and its highly scalable, interconnected design could act as precursor to high-end, multi-GPU graphics cards.

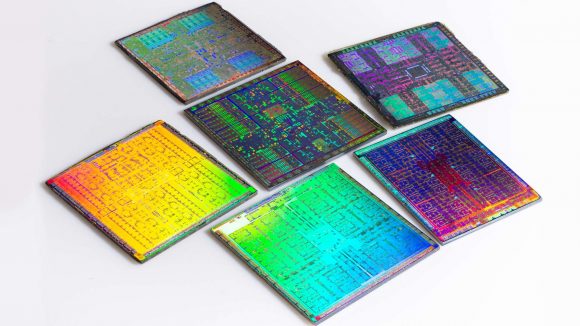

The current prototype for Nvidia’s multi-die solution was taped out on TSMC’s 16nm process – the same one utilised in most 10-series GeForce graphics cards. Its rather small footprint is made up of 36 tiny modules, each one comprised of 16 PEs (Processing Elements), a basic RISC-V Rocket CPU core, buffer memory, and eight GRS (Ground-Referenced Signaling) links totally 100GB/s I/O bandwidth per chip. All in all the chip is fitted with some 87 million transistors in total.

“We have demonstrated a prototype for research as an experiment to make deep learning scalable” Bill Dally, head of Nvidia Research, says at GTC 2019 (via PC Watch). “This is basically a tape-out recently manufactured and is currently being evaluated. We are working on RC 18. It means ‘the research chip of 2018.’ It is an accelerator chip for deep learning, which can be scaled up from a very small size, and it has 16 PEs on one small die.”

RC 18 is built to accelerate deep learning inference, which in itself isn’t entirely fascinating for us gaming lot. Nevertheless, many of the interconnecting technologies that make this MCM (multi-chip module) possible could be antecedent to future GPU architectures.

Related: The best graphics cards for gaming in 2019

“This chip has the advantage of being able to demonstrate many technologies,” Dally continues. “One of the technologies is a scalable deep learning architecture. The other is a very efficient die-to-die on an organic substrate transmission technology.”

Some of the technologies found within RC 18 that could one day become pivotal in larger high-performance MCM GPUs include: mesh networks, low-latency signalling with GRS, Object-Oriented High-Level Synthesis (OOHLS) and Globally Asynchronous Locally Synchronous (GALS).

This experiment is also an attempt by Nvidia to reduce the design time producing its high-performance GPU dies.

Nvidia currently offers its own interconnect fabric technology, NVLink. And it also recently purchased data centre networking specialists Mellanox, further cementing its place in the big data networking world.

Nvidia’s ideal end goal for RC 18 would be an array of MCMs, each package internally and externally interconnected for massive parallelism and compute power. Essentially, future systems would entail multiple interconnected GPUs fitted onto a PCB alongside other similar packages in a grid, all connected together via a high-bandwidth fabric mesh.

And Nvidia isn’t the first to dream of a massively scalable GPU architecture. AMD Navi was once believed to utilise a MCM approach, too. Then Radeon boss, Raja Koduri, had tacitly hinted future GPUs could one day be weaved together through AMD’s Infinity Fabric interconnect – the same one used across its CPU business.

This was later shot down by David Wang, senior VP of Radeon Technologies Group. He later said, while the MCM approach was something the company was looking into, the company has “yet to conclude that this is something that can be used for traditional gaming graphics type of application.”

“To some extent you’re talking about doing CrossFire on a single package,” Wang says. “The challenge is that unless we make it invisible to the ISVs [independent software vendors] you’re going to see the same sort of reluctance.”

But Wang hasn’t ruled anything out yet. And with Nvidia now touting its own primordial research into MCM GPUs, it’s very much looking like the scalable, multi-die approach will one day gain traction within the mainstream. But it might take just a little longer before us gamers can get our hands on 32-GPU discrete add-in cards.