I mean, dialogue trees are fine, I guess. Your character asks a question, the NPC gives a response, you learn what you need to, and for a moment it almost looks like a conversation. But they’re hardly perfect. Playing through Mass Effect: Andromeda, I was reminded how interrogative they make me feel: “YES BUT WHY ARE YOU OUT HERE STUDYING THE REMNANT?”

Think how many of the PC’s best RPGs could be improved by natural dialogue.

They can also make conversations feel like passive lore-dumps. Real people don’t tell you their life story at your first meeting, and thank God, because it can get pretty boring. I wish an NPC would turn around and tell me “the mating rituals of my culture are none of your business. And no, we won’t bang, okay?”

These are among the problems that Spirit AI are trying to fix with their Character Engine. It aims to achieve something that will still feel like a distant dream to many players: natural-seeming conversations with AI characters, in which you use text or even voice chat to speak, and they answer as a real person would, creating their dialogue on the fly.

Dialogue without the training wheels

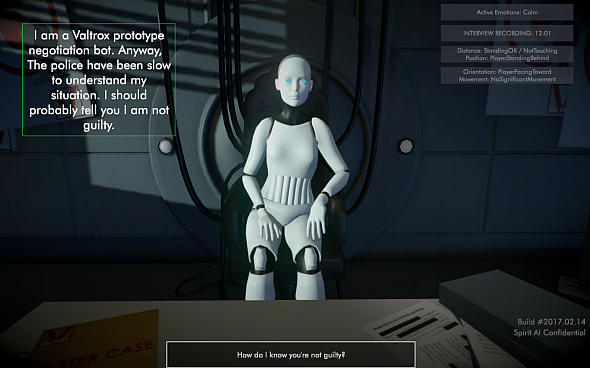

Sounds too good to be true, doesn’t it? Spirit AI’s CCO, Dr Mitu Khandaker, swung by to show us how it works. She began by opening a laptop and showing me a demo; we’re sitting across a table from a robot, who vaguely resembles a crash test dummy. A murder has happened, the robot is a suspect, and it’s up to us to interrogate her.

“This is a really hard design problem,” Khandaker says. “It’s not a normal conversation where someone may be trying to help you. Here, they’ve been accused, so there may be things they feel they can’t tell you, but there are other things they want to tell you. We need to help the player understand how to interrogate [this NPC]. You can type anything, you can say anything, and that overwhelms people a little bit.”

Presented with a text box and the promise that the robot will answer anything I ask, I understand what she means (though I’m later told that Spirit AI are working on contextually-generated dialogue options, for those who aren’t ready to freely engage an AI in a casual chat). I let Khandaker take the lead, and she types: “Who are you?”

“I am a prototype negotiation bot,” she answers, in a pleasantly breezy Scottish brogue, before moving on to her innocence. “Anyway, the police have been slow to understand my situation. You should likely know I am innocent of this killing.” There are jarring shifts in pitch, like a train station announcement or Stephen Hawking’s voicebox, but there it is: dynamic voice, generated in response to a player’s typed question.

Keen to test the robot, and having watched my share of cop shows, I type “where were you at the time of the murder?”

“It’s hard to be sure where time of death is located,” she answers, nonsensically.

“I knew this would happen,” Khandaker says. “Basically, there’s two sources of information that she uses: There’s her script, which the narrative designer will author. Those are the types of things she says, and how she says them, in response to what kinds of things. Then there’s also her knowledge model, which is her mental model of the world, and how entities relate to each other.

“For this demo, we’ve given her the idea: Here are the locations, and here’s the concept of a time of death. So she knows time of death is a concept and that it needs a location, but not what that location is. That’s a little bug to do with the incompleteness of the information.”

I ask how much work it took to get this NPC to its current state. Khandaker says one writer worked on it “not full-time, for a couple of weeks.” Making the writing tool quick and intuitive to use is one of their key priorities: “We’re designing it to look like you’re writing a screenplay, so if you’re a writer, it’s something you’d be familiar with.”

Tools and agnosticism

Khandaker shows me how the Character Engine can even encompass emotional states. “You’ll notice she’s very calm right now,” Khandaker says, “and this also plays into the way she’ll respond. Our system can output not only her dialogue, but her emotional state, and as a developer, you can plug that into whatever.”

On the demo, Khandaker leans in over the desk and asks bluntly: “Are you guilty?”

The robot recoils slightly and moves her face around to avoid eye contact, answering: “Where the murder is concerned, the person who wielded the blunt instrument is guilty – though there might be an accessory. I am unaware of such a person.”

Again, it’s a pretty impressive simulation of an evasive, dynamically-generated answer, with body language conveying nervousness. Khandaker types “tell us about the victim,” and the robot relaxes back to her original pose.

Spirit AI don’t make animations, so they’re pretty rudimentary, but the potential applications are clear: a developer with proper animation tools could map them onto emotional states outputted by Character Engine, causing NPCs to grimace, laugh, dance, and so on in response to the player’s dialogue or body language. Obviously, triggering game states –like causing a fight to break out –would be a piece of cake.

This is one of many areas in which Character Engine needs to integrate with other pieces of software. Others include vocal generation tools if the client wants their NPC to speak, and voice recognition if they want to enable the player to speak. Both of these have advanced to the point that, pulled together around Character Engine, we’re on the verge of those believable, organic conversations.

As anyone who’s used Google’s voice search will know, we’ve come a long way from the first generation of speech-to-text software. As for vocal generation, Khandaker says “there’s a lot of research labs all over the world getting to super human-sounding voices.” She cites another Google product, WaveNet – which they are using in their DeepMind AI – as one example (DeepMind’s blog post on WaveNet is well worth a read).

How to integrate them all? “What we are doing is remaining agnostic, so [our clients] can use our system and plug in whatever [other tools] make sense.”

On vocal generation specifically, Khandaker mentions that they’ve been working with a partner whose technology is not only able to generate digital dialogue on the fly, but can make it sound like a specific person, like a celebrity. “There is a certain process where you get them into a recording studio, and there are certain phonemes that you have to say, and certain combinations of sounds, to build up a computational version of [their] voice.” So, depending on how much Brad Pitt wants to charge for his dignity, a developer could hire him to go “aahh,” “oohh,” “eee,” into a microphone for a day, and presto: the software can use those sounds to digitally generate his voice saying anything. Adding a bit of emotion to each sound even enables the software to generate dialogue with varied tones.

Timeframes and authoring

Khandaker concludes by showing me the Character Engine’s authoring tool. Lines of dialogue are nested in one another, with dollar symbols against certain words and phrases. These mark the ‘tags’ which underpin an NPC’s knowledge model. Input from the player – “tell me about your argument with the victim,” for instance – will trigger tags associated with that input, essentially telling the AI what the player is asking about. By tracking previously triggered tags, the AI can also know what they’ve discussed previously. This enables various responses – if the AI suspects you won’t catch them out, for instance, they might try to lie.

“There’s this interplay between game state information, both in the input and the output,” Khandaker says. The possibilities for detective sims are obvious, but it doesn’t take much imagination to see how this could revolutionise practically any game with NPC interactions. We’re looking at organic conversations, with spoken or written player input and dynamically-generated vocal output, capable of tracking changes in game state.

We may see more demos from Spirit AI’s clients within a year or so, but how long until a triple-A game releases with this technology? “It could be a few years yet, because of their project cycle,” Khandaker says. “People we’re talking to about MMO-type things… that could roll out sooner. We’re working with such a huge variety of different partners. We’re [also] working with things like media studios doing VR experiences; again, the dream of naturalistic interaction. We’ve got all kinds of different projects at different stages. It’s exciting.”

That, it is.