Google has just wrapped up the first day of its annual developer event, Google I/O, which briefly touched on the tech behind Google Stadia. While filling just an hour of the three day event, during that time some of Google’s senior staff took to the stage to outline how the company is going about reducing latency to imperceivable levels and building a robust streamer to take on local gaming PCs.

With Google making major strides into cloud gaming, the question on everyone’s lips has been whether the experience will be worthwhile, or directly comparable, to local hardware. In an attempt to persuade devs that Stadia is the place to be for upcoming games, two of Google’s lead engineers, Rob McCool and Guru Somadder, along with project manager Khaled Abdel Rahman, elaborated on the technological foundations of the platform.

And it seems that since the streaming platform’s announcement at GDC, Google wishes to clear up some perceived notions on streaming latency that may, or may not, be true. Most notably, how much latency a datacentre adds into the whole equation.

“It takes less time for a packet from a datacentre to reach you than it takes for a brain’s command to reach your fingers,” Somadder says.

The future of gaming? Here’s everything we know about Google Stadia

But while Google is quick to point out that data travelling at the speed of light isn’t an issue in itself, any extra time spent shifting and processing packets is adding latency to a gamer’s experience.

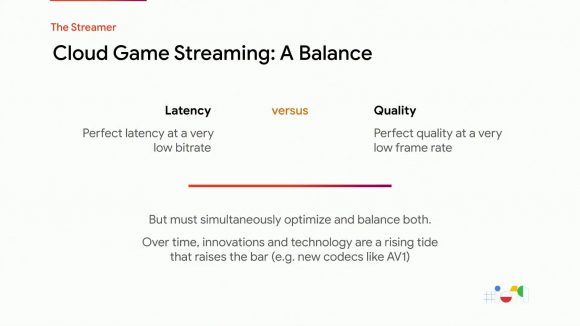

According to Google, the success of game streaming all comes down to codecs, encoders, and decoders capable of minimising delay – along with ruthlessly striking the correct balance between quality and latency. After all, a gamer doesn’t need to only receive data, but transmit it rapidly back to the server to respond to player actions.

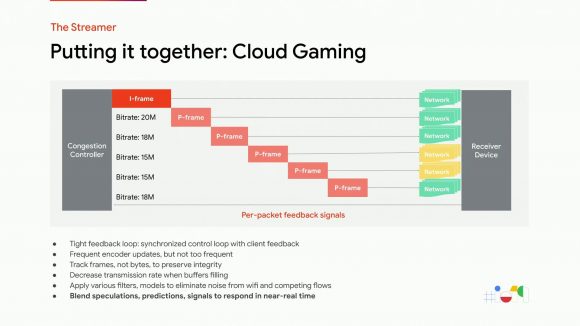

“The streamer is a delivery engine that’s purpose built for game streaming,” McCool says. “It’s a program that runs alongside the game. It takes information from the clients as well as from Google’s content delivery network, and it makes real time decisions about how to maximise quality while keeping latency imperceptible.

“There’s no single optimisation to make the perfect cloud gaming streaming technology. It’s always balancing a series of trade offs. So if you improve one thing, oftentimes it comes at the expense of another thing. So the solution is not an elegant algorithm that you can describe with a few lines of Greek symbols, maybe get a master’s thesis out of it, it looks very different.”

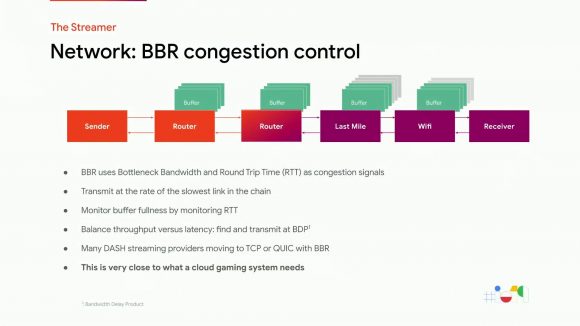

Data has to travel through many steps in order to reach your home, and Google believes it needs to change the inherent way this data is relayed across the web to combat buffering. So it’s employing its own, gaming optimised twist on the Bottleneck Bandwidth and Round-trip propagation time (or simple BBR) congestion control algorithm with Stadia.

This is Google’s own baby, and reportedly “very close” to the needs of Google Stadia as is. BBR is an algorithm that attempts to reduce congestion and packet loss before it happens – especially in the last mile in something commonly referred to as “blufferbloat”. That’s when packets of all-important data stack too deep, clogging up buffers, and end up causing needlessly massive queues. That queuing alone would usually be a death sentence for game streaming.

“So on the receiver side, we use WebRTC extensions provided by our team in Sweden to disable buffering and display things as soon as they arrive. For our application, controlling congestion to keep the buffering as low as possible is vital.”

With an optimised BBR algorithm and a frame-by-frame feedback loop, Google can avoid some of the pitfalls associated with video streaming and laggy web streaming services.

“The controller is constantly adjusting a target bitrate based on per packet feedback signals that arrived from the receiver device,” McCool continues. “The controller uses a variety of filters, models, and other tools and then synthesises them into a model of the available bandwidth and chooses the target bitrate for the video encoder.

“Google is experienced designing algorithms modelling extremely complex situations and code, and working at scale with millisecond latency puts us in an ideal position to enable this experience for players. We tune at the millisecond, and sometimes microsecond, level and make timely informed choices to keep latency imperceptible while maximising quality. We blend many different signals models, feedback, active learning, sensors, and a tight feedback loop to produce a precisely tuned experience.”

Google is also holding out for increased adoption of the very latest codecs, including VP9 and AV1, which it hopes will improve game streaming as a whole, lending to a decrease in network requirements and an increase in quality.

And in an attempt to lure greater numbers of wild devs to the service, Stadia will also implement a dev-friendly “Playability Toolkit”. This suite of APIs allows devs to modify and keep track of encoders, real-time metrics, device information, and latency tracking frame-by-frame.

While we still have so many questions regarding Google Stadia’s business model, the Google I/O talk has shed just a little more light on how Google intends to utilise its considerable weight and global web domination to cater its cloud gaming service to players.

If only the talk was a few hours longer, maybe then we’d have more of the answers we crave. And I’d know for sure whether the future of gaming PC hardware really is in jeopardy.