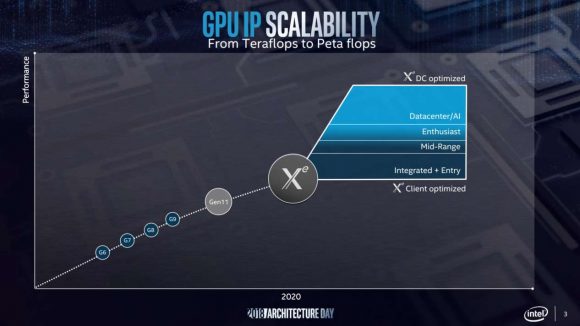

The Intel Xe graphics architecture is set to become the base for a broad range of GPUs, from data centre/AI all the way through enthusiast gaming and down into entry level, integrated graphics components. Intel has though remained relatively tight-lipped about what the Xe architecture will be capable of, what its makeup looks like, and what the exciting new features will be.

But at the FMX conference in Stuttgart, Germany, Intel’s Jim Jeffers has announced that the Intel Xe GPUs will contain hardware-based ray tracing acceleration support for use in the data centre environment.

“I’m pleased to share today,” says Jeffers, “that the Intel Xe architecture roadmap for data centre optimized rendering includes ray tracing hardware acceleration support for the Intel Rendering Framework family of API’s and libraries.”

This means that Intel Xe will provide extra performance acceleration when the render farms are being tasked with creating ray tracing effects for feature films, et al. This is, however, different from the sort of real-time hybrid rasterisation/ray tracing features Nvidia has baked into its own Turing GPUs for use in games and in our home PCs; the hardware acceleration Intel is talking about today is purely regarding offline rendering for animation and CGI.

That’s partly because Jeffers, Intel senior principal engineer and senior director of advanced rendering and visualisation, was making the Intel Xe announcement at FMX. It’s a conference mostly focused on effects and animation, with only a little games-based goodness.

Read more: These are the best graphics cards around today

“Today’s available GPUs have architecture challenges,” says Jeffers, “like memory size limitations and performance derived from years of honing for less sophisticated, ‘embarrassingly parallel’ rasterized graphics use models. Studios continue to reach for maximum realism with complex physics processing for cloth, fluids, hair and more, plus modeling the physics of light with ray tracing.

“These algorithms benefit from mixed parallel and scalar computing while requiring ever growing memory footprints. The best solutions will include a holistic platform design where computational tasks are distributed to the most appropriate processing resources.”

So what studios really need is a platform that can combine both a high-end CPU and GPU to model all the different tasks render farms need to tackle… maybe a mega-core Xeon and a fancy pants new Intel Xe pro-level graphics card perhaps?

At the moment Intel can only really deliver render farms using a whole lotta Xeon power to do the large-scale rendering movie studios require, especially for computationally intensive features such as ray tracing. Doing all that on a processor is slow, so what you need is a graphics card thrown into the mix, or a few hundred of them, to make that process go a bit quicker – something Nvidia and AMD have been saying for ages.

From the launch of Xe next year, however, Intel will have both CPU and GPU components, and with the announcement that it will feature hardware-based acceleration for Intel’s own rendering frameworks that means studios should see a pretty sizeable cut in render times… if they upgrade to a Xe/Xeon combo.

Subscribe now for more hardware videos

But what impact will that have on the discrete Intel Xe graphics cards we’ll have in our machines in the next 12 months or so? What this announcement is not saying is that Xe will definitively have hardware support for real-time ray tracing at launch next year, but the fact that professional level Xe GPUs are going to contain some hardware-based acceleration for such algorithms does suggest it’s a consideration.

Given that it’s the Microsoft DirectX Raytracing API, not some proprietary Nvidia tech, that’s actually responsible for the hybrid rasterisation model used for the real-time ray tracing effects being introduced into PC games today, it’s entirely possible that some of that pro-level Xe hardware will be utilised in the client space too.

If 2020’s top-end, gaming-focused Intel Xe GPU has that professional hardware support left in it, then we could have a contender to Nvidia’s RTX platform from a rather unlikely source.