There’s an exciting new graphics card memory technology on the horizon that could see huge gains in one of the most important aspects of GPUs: memory bandwidth. The new GPU SCM with DRAM tech can deliver peak gains of up to 12.5x compared to high-bandwidth memory (HBM), while also reducing power consumption.

HBM is a technology that AMD used in some of its previous best graphics card contenders, most notably the AMD Vega lineup of GPUs such as the AMD Radeon VII. It sought to massively increase memory bandwidth – a feat it very much achieved – by stacking the memory chips on top of each other and housing them much closer to the GPU than conventional GDDR. However, it was costly to produce, limited in overall capacity, and also difficult to cool.

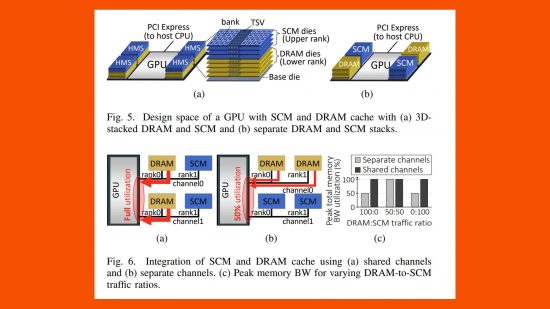

This new research, then, looks to improve upon the general idea of HBM by allowing for much larger amounts of memory, thanks to the use of a ‘storage class memory’ (SCM) rather than DRAM for the bulk of the storage, with a smaller portion of DRAM then used as a read/write cache for that storage. This arrangement is analogous to how many of the best SSD models work, with a small portion of DRAM used as a read/write cache in front of the slower NAND.

The type of SCM used here is a variation on Intel’s 3DXPoint memory, which debuted a few years ago as an intriguing middle ground between faster but volatile (loses its data without power) DRAM, and slower but non-volatile (retains data without power) NAND. It was briefly sold in both RAM stick and SSD formats, but it never quite found a more permanent role in the PC ecosystem.

New refinements in this style of SCM, though, have led this research team to believe that a version of this type of memory could be viable for use with GPUs. The advantage of this memory is that it’s cheaper to produce than DRAM, and runs with much lower power consumption. That means graphics cards could come with huge memory capacities without breaking the bank or melting themselves.

Crucial to making the SCM work, though, is providing the incredibly high bandwidth and low latency needed for graphics memory to be useful. That’s where the DRAM cache and clever hardware-based memory tagging system devised by the POSTECH research team at Soongsil University comes into play.

The intricacies of the research are highly complex, with it involving data-flow models to predict typical memory access patterns, for instance. The upshot, though, is that the team – using a modified Nvidia A100 GPU – could see significant performance advantages to its new system compared to using conventional HBM memory (which itself already offers much higher bandwidth than standard GDDR configurations). The paper summarises the results by saying:

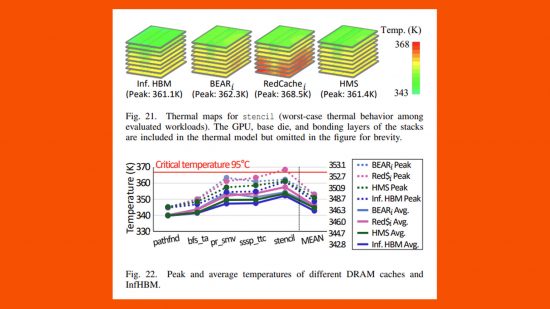

“Compared to HBM, the HMS (heterogeneous memory stack) improves performance by up to 12.5× (2.9× overall) and reduces energy by up to 89.3% (48.1% overall). Compared to prior works, we reduce DRAM cache probe and SCM write traffic by 91-93% and 57-75%, respectively.”

It’s an interesting development, although it comes with a couple of major caveats regarding gaming GPUs. For a start, the research is based on data center workloads, where memory usage patterns and overall system requirements are quite different to gaming workloads. Plus, this research is just that: it’s in the research phase. Even if it were immediately identified as the clear path to next-gen performance, we’d probably be looking at a couple of generations of cards passing before we’d see this new technology integrated into new graphics cards.

Still, it’s always exciting to see figures pointing to a 12.5x (or even 2.9x) performance increase and 89.3% power reduction, as they hint that there are still many ways the graphics card and wider PC tech market can continue to eke out more performance, despite it being ever more difficult for chip produces to make smaller and smaller chips.