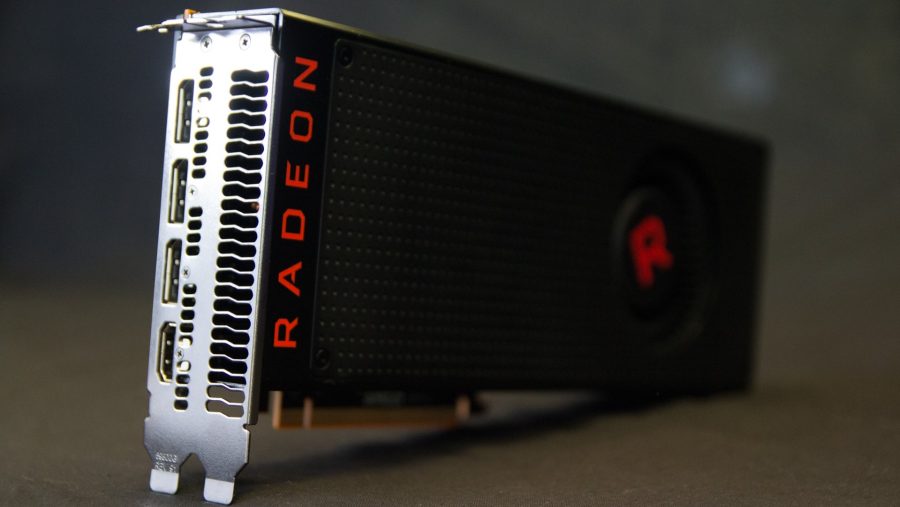

If you want the finest Radeon graphics card right now, then AMD’s RX Vega is where it’s at and, as the video card nerds we are, we’ve got all the latest Vega info right here.

The Vega architecture has now been around in consumer form for close to a year now, and it’s fair to say it’s had a bumpy start to life. But with its sterling showing in the Raven Ridge APUs, new mobile cards on the way, and Vega set to appear as AMD’s first 7nm product, the architecture’s certainly getting around a bit. At launch, however, it was rather a silicon contradiction – the first cards managed to be simultaneously disappointing and completely sell out.

But it embodies that classic AMD ‘fine wine’ approach where it only gets better over time. And Vega genuinely has – with the most modern game launches it has shown some performance improvements to where it’s almost competitive. Damning with faint praise there…

Vital stats

AMD Vega release date

The latest AMD Radeon cards were finally launched on August 14, 2017

AMD Vega pricing and availability

The RX Vega 64 and RX Vega 56 were both hard to track down for a decent price long after launch, but just when it looked like stock was coming back so did the mining boom. Now an RX Vega 64 is $550 (£529) and the RX Vega 56 $480 (£462)… at best.

AMD Vega architecture

AMD called it the biggest architectural change in years, with the advanced Infinity Fabric interconnect, memory, and caching system.

AMD Vega performance

The biggest disappointment was that, after all the hype, the top AMD RX Vega 64 couldn’t really keep up with the Nvidia GTX 1080 it was aiming its sights on.

AMD Vega reviews

- AMD Radeon RX Vega 64 review: a high-end GPU waiting on a future it’s trying to create

- AMD Radeon RX Vega 56 review: even if you could buy one, you probably shouldn’t… yet

AMD Vega news

- AMD’s RX Vega 56 finally gets miniaturised – but can it cope under pressure?

- AMD’s first 7nm product is a 7nm Vega card and is launching this year

- AMD’s Vega and Ryzen refreshes are chasing efficiency gains with a new 12nm node

- It will cost you less to buy a GTX 1080 Ti today than pre-order an Asus STRIX RX Vega 64

- AMD’s AIBs can’t afford to release custom RX Vega 64 cards and we can’t afford to buy one

- Beware low-flying pigs: Intel and AMD are working together on a mobile chip

- XFX tease AMD RX Vega design, but no launch date for patient customers

- AMD’s Vega isn’t finished yet, Vega 11 goes into production to replace Polaris

- Who’s going to make custom AMD RX Vega 64 cards? Short answer: maybe no-one

- Vega’s innards aren’t game-ready, consumer-optimised versions are coming

- AMD Vega is now so good for crypto-mining it’ll probably be sold out forever

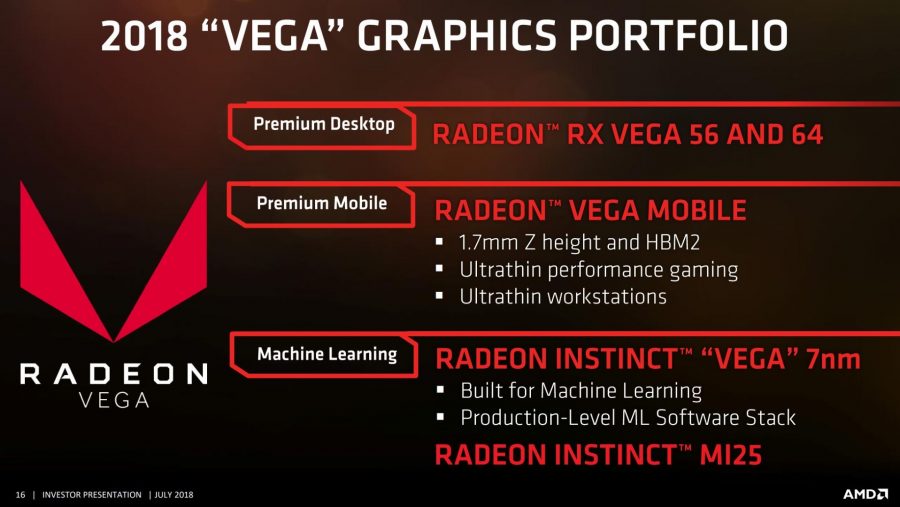

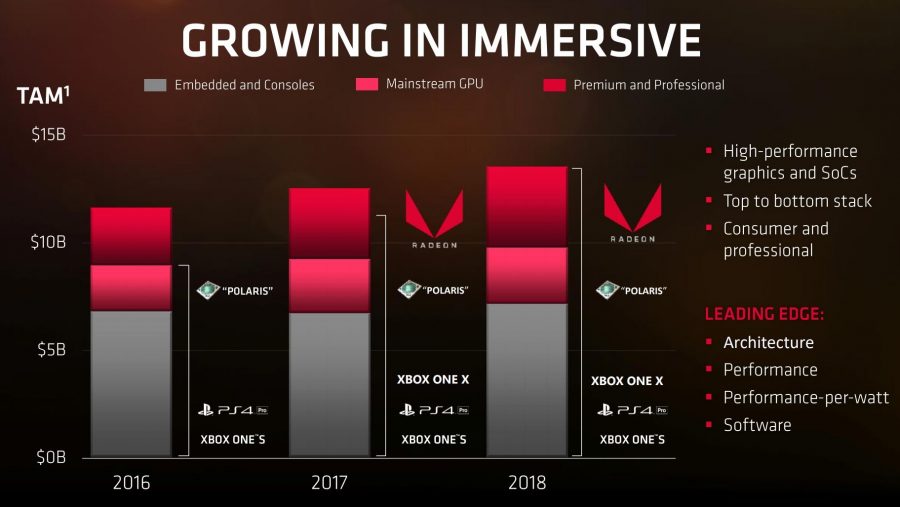

A couple of slides have come out of the a recent AMD investor presentation highlighting the role, or non-role of the AMD Vega GPU architecture throughout 2018.

Naively we were hoping for a broader roll-out of the AMD Vega tech, but this year it sticking with the RX Vega 64 and RX Vega 56 cards at the top of the stack with the only new GPUs arriving in the mobile and machine learning spaces.

That means our Radeon gaming graphics cards will only have a pair of Vega chips available to them, with the rest of 2018’s AMD cards still running the old Polaris GPU tech of the RX 480 and RX 580 fame. Given the sizeable lead Nvidia already has in the gaming graphics card market, that means AMD has essentially ceded 2018 to Team GeForce. Fingers crossed Navi turns out as well as we hope, and not as bad as is feared…

You will, however, be getting Vega graphics in processor form, and not just from the AMD Raven Ridge APUs either. Intel has licensed Vega GPU silicon to form the graphics portion of the Intel Kaby Lake G processors, mixing Radeon and Core architectures together for the first time. It’s an alliance predicted by Nostradamus himself as a portent of the end times.

There will also be mobile Vega cards, as mentioned by Dr. Lisa Su herself at the pre-CES event in Las Vegas this January. But it looks like any hopes we might have had for a Vega GPU refresh, along the same lines as that facing the inaugural Ryzen CPU range with the AMD Ryzen 2 Pinnacle Ridge update this year, have seemingly been dashed.

Originally we had been hoping that AMD would be creating a 12nm refresh of the Vega 56 and Vega 64 cards, sporting the same level of efficiency improvements and higher clockspeeds the Ryzen 2 refresh is offering. Unfortunately the Vega refresh has been removed from the new AMD roadmaps we were shown at their CES Tech Day in January.

Instead AMD is bringing a 7nm version of the Vega architecture to market at the end of the year – likely unveiling at SIGGRAPH in August – in the guise of a new Radeon Instinct card designed specifically for the rigours of machine learning. There is a slight chance that release, like the original Vega Instinct cards, will eventually bleed into a consumer-facing product launch, but maybe not this year.

So, it looks like the next graphics component we’re getting from AMD right now is going to be based on the new AMD Navi design sometime in 2019.

AMD Vega release date

The gaming variants of the AMD Vega architecture released on August 14, 2017, and there was much rejoicing. Mostly because it was my mum’s birthday, but also because it represented the first big Radeon GPU redesign in an age.

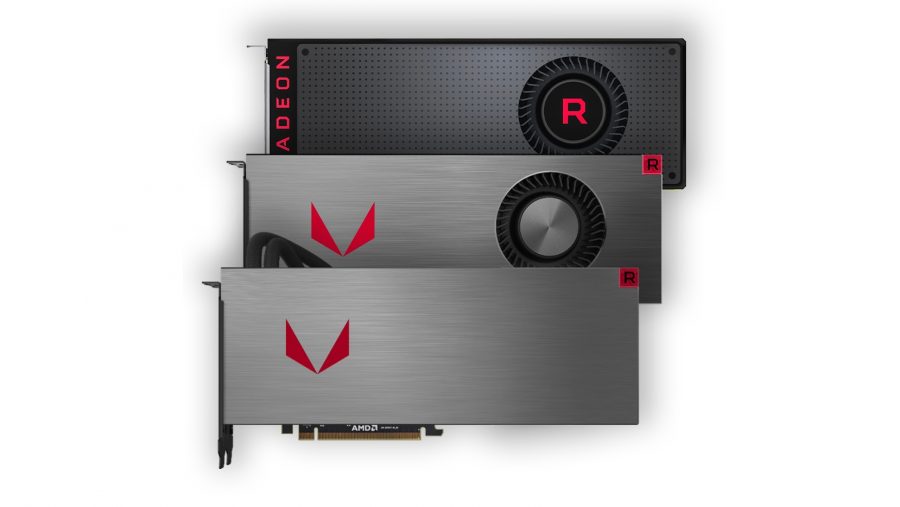

AMD released the Radeon RX Vega 64 and Radeon RX Vega 56 cards, and we’ve not seen anything else to fill out the stack in discrete graphics card form since then. And realistically it seems unlikely that we’re going to. The costly HBM2 VRAM means that it’s potentially complicated to switch out for GDDR5 or GDDR6, and too expensive to use in more mainstream versions.

There was an even more costly liquid-cooled version of the Vega 64, but that doesn’t seem to have survived past launch. We’ve also not been treated to an official Vega Nano, but there is expected to be a Powercolor RX Vega 56 ‘Nano Edition’ appearing at Computex this year.

AMD Vega pricing and availability

We’re starting to see more RX Vega cards becoming available now, but thanks to the cryptomining boom prices are still well over the original MSRP for the cards even though it’s now actually possible to find them on sale. That’s despite the fact the crypto market has slowed down with ASICs more able to cater to the previously GPU-only currencies.

Still, prices are better than they were…

AMD Radeon RX Vega 64 (MSRP $499 | £450) – $550 | £529

AMD Radeon RX Vega 56 (MSRP $399 | £350) – $480 | £462

AMD Vega architecture

The new AMD Vega architecture represents what it called the most sweeping architectural change its engineers had made to the GPU design in five years. That was when the first Graphics Core Next chips hit the market and this fifth generation of the GCN architecture marks the start of a new GPU era for the Radeon team.

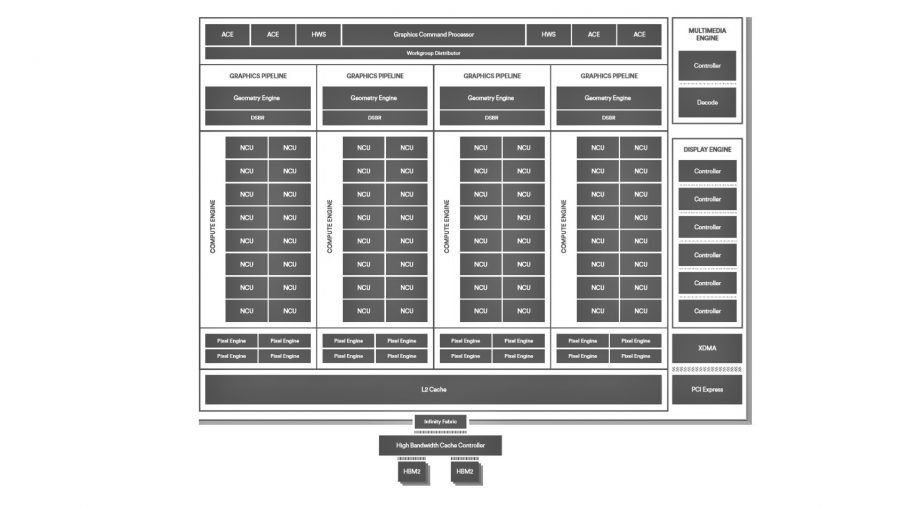

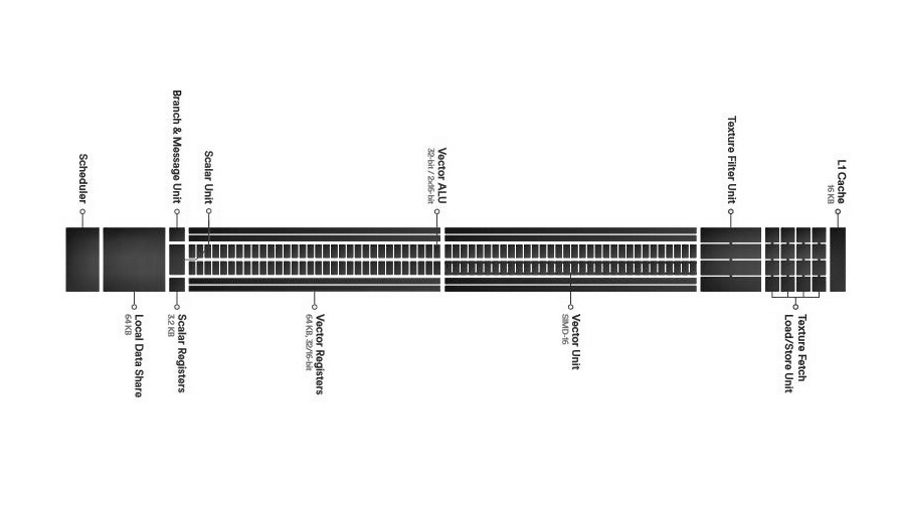

Fundamental to the Vega architecture, represented here by the inaugural Vega 10 GPU, is the hunt for higher graphics card clockspeeds. The very building blocks of the Vega 10, the compute units, have been redesigned from the ground up, almost literally. These next-generation compute units (NCU) have had their floorplans completely reworked to optimise and shorten the physical wiring of the connections inside them.

They also include high-speed, mini memory SRAMs, stolen from the Zen CPUs and optimised for use on a GPU. But that’s not the only way the graphics engineers have benefitted from a resurgent CPU design team; they’ve also nabbed the high-performance Infinity Fabric interconnect, which enables the discrete quad-core modules, used in Ryzen and Ryzen Threadripper processors, to talk to each other.

Vega uses the Infinity Fabric to connect the GPU core itself to the rest of the graphics logic in the package. The video acceleration blocks, the PCIe controller and the advanced memory controller, among others, are all connected via this high-speed interface. It also has its own clock frequency too, which means it’s not affected by the dynamic scaling and high frequency of the GPU clock itself.

This introduction of Infinity Fabric support for all the different logic blocks makes for a very modular approach to the Vega architecture and that in turn means it will, in theory, be easy for AMD to make a host of different Vega configurations. It also means future GPU and APU designs (think the Ryzen/Vega-powered Raven Ridge) can incorporate pretty much any element of Vega they want to with minimal effort.

The NCUs still contain the same 64 individual GCN cores inside them as the original graphics core next design, with the Vega 10 GPU then capable of housing up to 4,096 of these li’l stream processors. But, with the higher core clockspeeds, and other architectural improvements of Vega, they’re able to offer far greater performance than any previous GCN-based chip.

The new NCUs are also capable of utilising a feature AMD is calling Rapid Packed Math, and which I’m calling Rapid Packed Maths, or RPM to avoid any trouble with our US cousins. RPM essentially allows you to do two mathematical instructions for the price of one, but does sacrifice the accuracy. Given many of today’s calculations, especially in the gaming space, don’t actually need 32-bit floating point precision (FP32), you can get away with using 16-bit data types. Game features, such as lighting and HDR, can use FP16 calculations and with RPM that means Vega can support both FP16 and FP32 calculations as and when they’re necessary.

The Far Cry 5 developers came out in support of RPM, and made FC5 very Vega-friendly. 3D technical lead, Steve Mcauley, went on record stating: “there’s been many occasions recently where I’ve been optimising shaders thinking that I really wish I had rapid packed math available to me right now. [It] means the game will run at a faster, higher frame rate, and a more stable frame rate as well, which will be great for gamers.”

The Vega architecture also incorporates a new geometry engine, capable of supporting both standard DirectX-based rendering as well as the ability to use newer, more efficient rendering pipelines through primitive shader support. The revised pixel engine has been updated to cope with today’s high-resolution, high refresh rate displays, and AMD have doubled the on-die L2 cache available to the GPU. They have also freed the entire cache to be accessible by all the different logic blocks of the Vega 10 chip, and that’s because of the brand new memory setup of Vega.

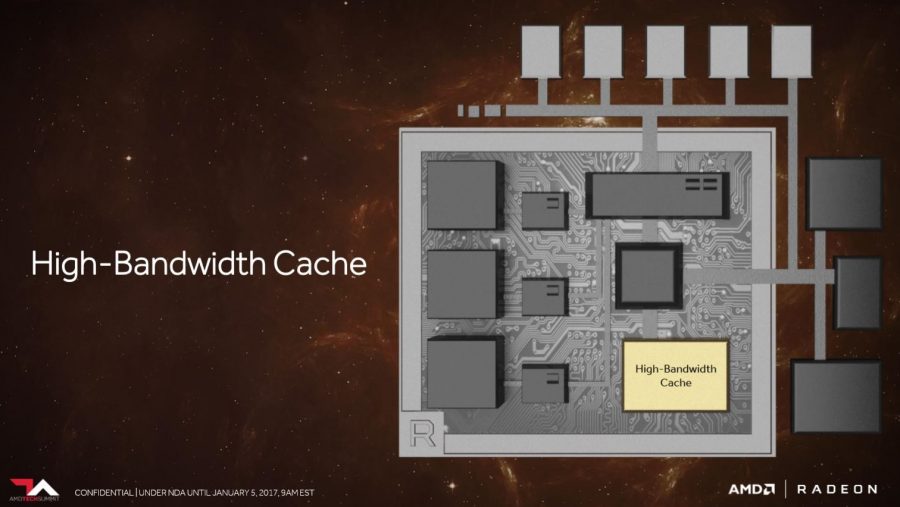

AMD’s Vega architecture uses the second generation of high-bandwidth memory (HBM2) from Hynix. HBM2 has higher data rates, and larger capacities, compared with the first generation used in AMD’s R9 Fury X cards. It can now come in stacks of up to 8GB, with a pair of them sitting directly on the GPU die, making the memory both more efficient and with a smaller footprint compared to standard graphics chip designs. And that could make it a far more tantalising option for notebook GPUs.

Directly connected with the HBM2 is Vega’s new high-bandwidth cache and high-bandwidth cache controller (HBCC). Ostensibly this is likely to be of greater use, at least in the short term, on the professional side of the graphics industry, but the HBCC’s ability to use a portion of the PC’s system memory as video memory should bare gaming fruit in the future. The idea is that games will see the extended pool as one large chunk of video memory, so if tomorrow’s open-world games start to require more than the Vega 64’s 8GB you can chuck it some of your PC’s own memory to compensate for any shortfall.

“You are no longer limited by the amount of graphics memory you have on the chip,” AMD’s Scott Wasson explains. “It’s only limited by the amount of memory or storage you attach to your system.”

The Vega architecture is capable of scaling right up to a maximum of 512TB as the virtual address space available to the graphics silicon. Nobody tell Chris Roberts or we won’t see Star Citizen this side of the 22nd century.

AMD Vega performance

The thinking behind Vega seems to have been to put the RX Vega 64 up against the GTX 1080 with the RX Vega 56 going head-to-head with the GTX 1070. Unfortunately, with most games on the market today, the AMD cards are always that little bit behind the Nvidia GPUs. It’s only when you start looking at the more modern DirectX 12 and Vulkan APIs that the Vega architecture starts to show its worth.

It’s this bifurcated performance – poor in legacy games and impressive with modern software – that makes the Vega cards difficult to recommend right now. AMD’s classic ‘fine wine’ approach may mean that when architecture matures, and devs start to use the impressive feature set to its fullest, the AMD cards might be able to push past their Nvidia rivals.

But that’s scant comfort to anyone wanting class-leading performance for every game in their Steam libraries, or even just the games they’re playing at the moment. There is a little light at the end of the overclocking tunnel however, with tweakers uncovering increased capabilities of the card, unlocked by undervolting the GPU. But that’s a whole other story…