Thank you, Dr. Lisa Su. Thank you for maintaining a little dignity and not letting yourself get dragged into the latest round of tit-for-tat Moore’s Law exultations and obituaries. Is it alive, is it dead? Who knows, we’ll just leave Schrodinger’s box closed and keep arguing about it for the next decade.

Read more: the best CPU for gaming right now.

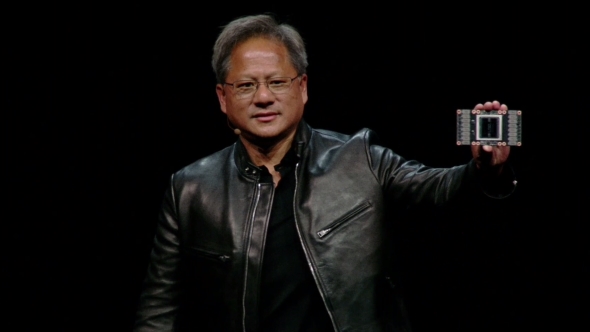

I’m so dreadfully bored of the constant debate about the demise of Moore’s Law. And we’ve just had another turgid bout of Nvidia and Intel’s respective CEO’s making clear (as if we needed reminding) their ultra-predictable stances on the subject. Jen-Hsun recently took to the stage at the Beijing version of Nvidia’s GPU Technology Conference, presenting pretty much the same keynote he’s been delivering all year, covering some holodeck shizzle, their golf-playing Isaacs, and a lot of back-slapping over the power of their AI-focused Nvidia Volta GPU hardware.

And he also proclaimed the very definite death of Moore’s Law. He did this while, in pretty much the same breath, confirming it’s also effectively alive and well. Confused? Well, that’s because tech companies are just taking Moore’s Law to mean whatever the hell they want it to these days.

The original observation by good ol’ Gordo Moore, a few years before he co-founded Intel in 1968, was that the complexity for the minimum component cost of an integrated circuit was found to pretty much double every 12 months.

“The complexity for minimum component costs has increased at a rate of roughly a factor of two per year,” he wrote in a 1965 hot-take. “Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least ten years.”

He didn’t directly mean the power of our PCs was doubling every year, but the balancing point between increased complexity and affordability was growing, that for the same essential manufacturing costs you would be able to double the number of transistors you could cram into a chip every year.

He nudged the time frame out a touch in 1975, to every two years, and tweaked it to be a bit more specific about transistor and resistor count. And Intel have since added another six months onto that. Moore’s Law, then, is not dead, it’s just evolving, but then it’s not even a real law, otherwise people wouldn’t be able to mess around with it in the way they have.

But the fact that El Gordorino himself played fast and loose with his own observations, and subsequently so have Intel, has meant that pretty much everyone just mangles it to make the observation fit their own stance in that particular moment, whether they want the Law in the box to be dead or alive when they take the lid off. Jen-Hsun’s now decided the number of transistors used in a given chip can double every two years and yet he claims Mozza’s Law can still be dead because CPU performance hasn’t doubled as well.

“Process technology continues to afford us 50% more transistors every year [it’s alive!],” he said on stage in Beijing this week, “however, CPU performance has only been increasing by about 10%… the end of Moore’s Law [shit, it’s dead again].”

But, guess what? It’s ok because GPU power has been growing exponentially. From a certain point of view. Nvidia have figured out that graphics cards are not actually best used for, well, graphics anymore. Nope, they’re not just really good at colouring in gibs and lobbing them across the screen, they’re also really good at thinking too.

Because of the way they’re arranged, with thousands of little fixed-function cores, graphics cards are really good at doing lots of concurrent number crunching. At the moment, they’re having a real good time with the machine learning, AI, big data revolution. So, yay, GPUs are going to replace CPUs. Well, for a very specific use case anyways…

Intel’s current CEO, Brian Krzanich, did make the point earlier in the year that the G-Man’s Guestimate was always essentially a financial one, “fundamentally a law of economics,” which is something Jen-Hsun’s kinda just side-stepped.

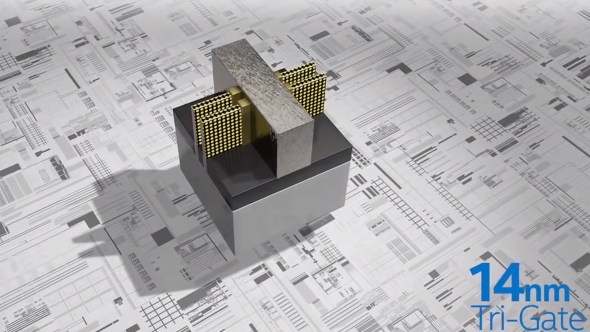

But it was they who started banging the Moore’s Law drum again ahead of their Intel Coffee Lake CPU release, however, with Stacey Smith wanging his wafers around on stage earlier this month. And he did so in the same city, Beijing, just before Nvidia, which is probably what woke up the leather-clad Nvidia CEO again. It was there that Intel desperately tried to convince everyone in the audience at the Technology and Manufacturing Day that their 10nm design process hasn’t been an unmitigated disaster, because look, they’ve got a wafer.

“We are pleased to share in China for the first time,” Smith said, “important milestones in our process technology roadmap that demonstrate the continued benefits of driving down the Moore’s Law curve.”

Forget the fact that Krzanich was on stage at CES in January holding up a finished laptop running with a 10nm Cannon Lake CPU inside it. Seems a little arse-about-face to me, showing off working product and then trying to get folk excited about the silicon inside them actually being manufactured some nine months later.

But then Kranich’s machine would’ve been a prototype and Stacey Smith was talking about a production wafer. Whatever, we should still have even the pathetically low-power 10nm chips in our li’l laptops by now. But we don’t.

To be fair, AMD aren’t squeaky clean in all this Mooresian bull though, they even tried to coin the phrase Moore’s Law+ back in July. AMD’s CTO, The Papermaster, said that silicon technology alone couldn’t cope with their interpretation of the swinging ‘60s observation.

“It’s not just about the transistor anymore; we can’t just have transistors improving every cycle,” he explained. “It does take semiconductor transistor improvements, but the elements that we do in design, in architecture, and how we put solutions together, also keep in line [with] a Moore’s Law pace.

“Moore’s Law Plus means you stay in a Moore’s Law pace of computing improvement. So you can keep in with a Moore’s Law cycle but you don’t rely on just semiconductor chips, you do it with a combination of other techniques.”

It’s also cheaper as you don’t have to try and figure out the necessarily expensive voodoo required to get down to 7nm and 5nm with all the weird physics defying shizzle that goes on down at that almost atomic level. Thankfully, we can ignore AMD’s version because everyone’s been happy to pretty much just let The Papermaster carry on doing his own thing and never bring up the whole Moore’s Law+ thing again.

So, is Moore’s Law dead? Is it alive? Who cares? One thing’s for certain, it’s not a law and it is inevitably doomed. We’re not going to keep using transistors forever. Soon enough we’ll have to move away from silicon, and ancient electrical switches, onto more a more exciting, post-binary world. Maybe we’ll use lasers. I like lasers. Lasers are cool. That’s Dave’s Law, I’m taking that one.