With the announcement of AI and real-time raytracing in games, on next-gen Nvidia Volta graphics cards, you would be forgiven for thinking your current-gen GPU could soon be obsolete. But we’re about to see a future where multi-GPU gaming PCs are actually desirable again. Forget Nvidia’s SLI and AMD’s CrossFire, though, this is all about DirectX, machine learning, and raytracing… and using a second GPU as an AI co-processor.

With great graphics cards you need great screens, so check out our pick of the best gaming monitors around today.

All the talk coming out of the Game Developer Conference, from a tech point of view at least, has surrounded the growth of artificial intelligence, how the power of GPU can be utilised to accelerate machine learning for gaming, and how the same technology is now able to make real-time raytracing possible in games this year. Both Nvidia and AMD have been waxing lyrical about what their graphics silicon can offer the industry outside of just purestrain graphics. And both have questionable names – Nvidia RTX and Radeon Rays. I mean, really?

But that noise is only going to get louder as Jen-Hsun opens Nvidia’s AI-focused GPU Technology Conference on Monday, bouncing around on stage in his signature leather jacket, and hopefully at some point introducing a new GPU roadmap. Most of the raytracing demos that have been shown at GDC this week have been built and run on one of his Volta GPUs – on a graphics architecture that has been almost designed from the ground up to leverage the parallel processing power of their silicon specifically for the demands of AI.

While all the developers have been getting excited because they’ve been able to achieve the real-time raytracing effects in their game engines, using Microsoft’s DirectX Raytracing API and Nvidia’s RTX accelerator, they’ve only been able to do it with a $3,000 graphics card. Or, in the case of Epic’s Unreal-based Star Wars demo, with $122,000 worth of DGX Station GPUs.

As Nvidia’s Tony Tomassi told us before GDC, “if you look at a typical HD res frame there’s about two million pixels in it, and a game typically wants to run at about 60 frames per second, and you typically need many, many rays per pixel, so you’re talking about needing hundreds of millions, to billions, of rays per second so you can get many dozens, maybe even hundreds, of rays per pixel.

“Film, for example, uses many hundreds, sometimes thousands of rays per pixel. The challenge with that, particularly for games, but even for offline rendering, is the more rays you shoot the more time it takes and that becomes computationally very expensive.”

Even on an Nvidia Volta box that’s computationally very expensive, which is why the features vanguard of real-time raytracing is going to be restricted to a few optional, high-end supplemental lighting effects, rather than fully raytraced scenes.

To help, Nvidia’s Volta architecture has some as-yet undisclosed features that accelerate raytracing, but they won’t say what those are. The Volta-exclusive Tensor cores do have an indirect impact on raytracing performance, because they can offer to help the clean up operation to de-noise a scene.

But that means, when we get down to trying out any potential DXR features on the current crop of graphics cards we have in our gaming rigs, which don’t have those techie goodies, they’re toast.

As much as Microsoft want to assure us that DirectX Raytracing will operate on current-gen DX12 graphics cards, there’s no chance they’re going to be able to handle the rigours of DXR as well as the demands of traditional rasterized rendering at the same time. Couple that with the potential demands of games trying to utilise the GPU for machine learning algorithms, with the WinML / DirectML APIs from Microsoft, and there’s suddenly going to be a huge number of extra computational tasks being thrown at your graphics card.

How is a graphics card that’s currently working its ass off trying to traditionally render 60 frames per second at 4K supposed to deal with everything else as well?But what if it wasn’t on its own?

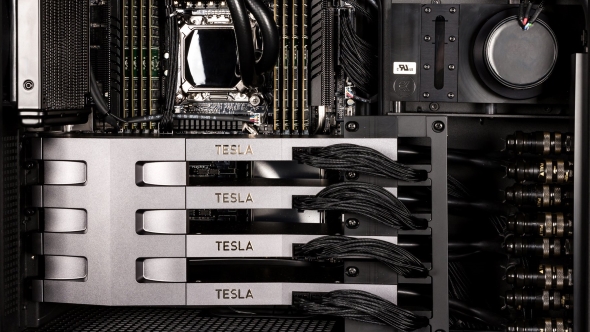

One of the less spoken about features of DirectX 12 has been its multi-GPU support. DX12’s mGPU feature is brand agnostic and has been designed to allow for multiple generations, and potentially different makes of GPU, to operate within the same machine. It’s not been used particularly widely, partly down to the expense of graphics cards at the moment and because of the difficulties in apportioning render tasks to individual GPUs that are going to complete them at different rates.

SLI and CrossFire have historically been a bit of a nightmare to use on a day-to-day basis. Sure, if you throw a couple, or three, graphics cards into the same machine then you’re generally going to get better gaming performance than if you had just a single card. But having run both SLI and CrossFire gaming rigs in the past I know first-hand that consistent gaming performance is the biggest problem. You’re not guaranteed to always get higher frame rates, and even when you do there’s the continued spectre of the dreaded microstutter.

Day one games rarely ship with the necessary profiles to deliver multi-GPU support, and especially not for the last year or so of releases. And some games never offer multi-GPU support, which all means there will definitely be times when you’ve got an expensive graphics card in your rig doing nothing. What a waste.

But if we can use DirectX’s multi-GPU feature to apportion AI and raytracing compute workloads to different graphics cards then we don’t have to wait for specific games profiles and you won’t be wasting the potential of at least one of your expensive GPUs.

When we’re talking about the sorts of workloads used in DXR – and with the DirectML-based dynamic neural networks Microsoft have been talking about – then with a multi-GPU setup one slice of silicon isn’t having to carry the weight of doing both the AI level stuff and the standard rasterization rendering all at the same time.

And if the different GPUs are doing different types of workload, and not trying to render alternate frames, or the like, then they’ve surely got a better chance of balancing the work between them.

Potentially then, if your old AMD or Nvidia DX12 graphics card is free of the demands of rasterized rendering it might be better able to concentrate on the compute work to accelerate the AI or raytracing loads. This might give some extended life to your old hardware, when you upgrade to a new graphics card, as a dedicated AI co-processor.

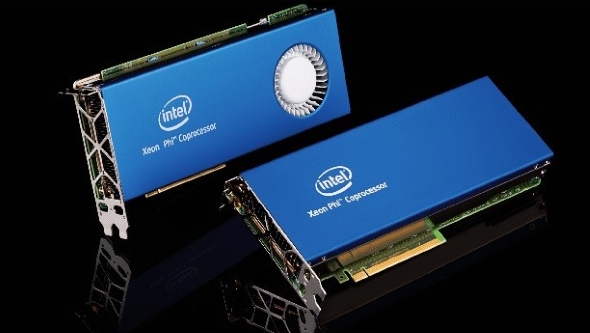

But we’ve only really spoken about AMD and Nvidia so far, don’t forget there will soon be a third way in the graphics card market with Intel joining the fray. Intel have announced they are aiming to produce high-end discrete graphics cards, most likely because of the huge interest in using GPUs for computational AI workloads rather than CPUs.

The graphics card market is incredibly competitive and for Intel to try and gain a foothold is going to require a Herculean effort on their behalf. They’ve got some stunningly smart people already there, and have hired in a stunningly smart person, in Raja Koduri, to head up this push for graphics power.

Breaking into the existing gaming GPU arena, however, the one mostly built around the demands of rasterization, might prove almost impossible for Intel. But, with the growth in AI compute in games, there’s a school of thought which suggests they could just drive straight for optimising their hardware around those workloads and create dedicated AI co-processors with GPUs at their hearts.

Okay, so that school of thought just exists purely in my head. And I haven’t had a lot of sleep, so there are probably a lot of holes in my logic, but creating a whole new line of potential gaming components could be very lucrative for Intel. Back when 3DFX created graphics co-processors, over and above the standard 2D graphics cards of the time, it was seen as a rather risky manoeuvre.

And look how that’s worked out for Nvidia.