Intel has confirmed its next-gen discrete graphics card will be built on the Gen 11 foundations being laid in the Ice Lake processors launching at the end of this year. At its CES press conference the Intel Xe discrete GPU was conspicuous by the absence of any talk of its development, but Gregory Bryant announced at the JP Morgan Annual Tech Forum, the following day, that it’s going to be based on the core IP used in its 10nm integrated graphics.

“The IP base that we have on our Gen graphics,” explains Bryant, “that core IP base is what’s being developed and built on to get to discrete graphics for both the client and the datacentre.”

There hasn’t been a lot of concrete information about the Intel Xe 2020 discrete graphics card architecture, and this is the first confirmation that it’s going to be largely based on Intel’s existing GPU designs, and not on a completely new graphics architecture. There had been speculation Raja Koduri and co. would utilise the current execution unit (EU) design of its Gen graphics to fill out a full discrete GPU, and now we know for sure.

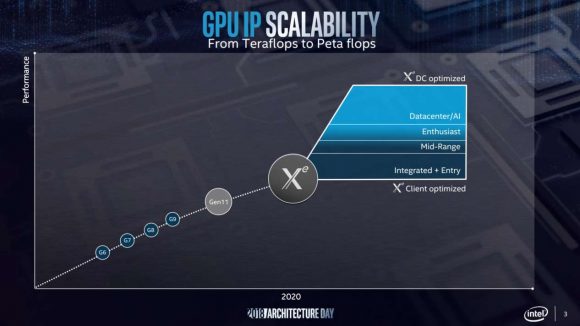

The Intel Xe branding is replacing what would have been the Gen 12 integrated graphics silicon going into any new processors launching in 2020. But with Intel moving on to create a discrete card it makes sense for the company to start afresh with a new brand name for all the GPUs going into its processors, graphics cards, and datacentre designs.

“Gen 11 is a great step forward for us,” says Bryant. “You’ll see us do it again with Gen 12 graphics for 2020 and Gen 12 graphics IP is the basis for that discrete graphics portfolio that Raja is architecting and building.”

Read more: Check out the best graphics cards around today

The 10nm Gen 11 GPU being built into the Ice Lake range of processors will have more than double the execution units of the Gen 9 graphics, with 64 EUs versus the 24 EUs of the previous 14nm design. It’s being created to break the 1 TFLOPS barrier and more than double the performance-per-clock of its last-gen graphics silicon. The Gen 11 GPU will also support adaptive sync in screens too, something that Nvidia is also finally getting on board with via its G-Sync Compatible program.

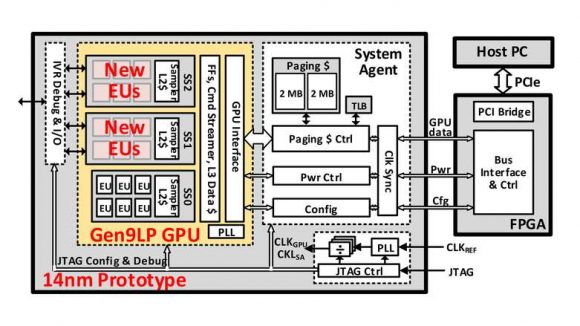

But that still doesn’t mean the exact same spec of the Gen 11 GPU will be used in the Intel Xe graphics card. Back at the start of 2018 Intel designed a prototype discrete GPU using its 14nm Gen 9 execution units, packing 18 low-power EUs across three sub-slices (roughly analogous to Nvidia’s SMs) to offer simple, parallel graphics processing in a tiny, 64mm2 package. It subsequently showed the research off at the ISSCC event in February.

Scale that prototype up to something the size of an RTX 2080, at 545mm2, and you could end up with some serious Intel GPU power. The early 14nm prototype only had 6 EUs per sub-slice, but with the 10nm Gen 11 chips using 16 EUs per sub-slice to make up its heady 64 EU count, each full GPU slice could potentially end up with around 48 EUs each.

Even just throwing some admittedly terrible, back-of-a-napkin maths at this, taking a 48 EU chunk of silicon that’s just 64mm2 (as the prototype GPU was) and scaling it up to the size of an RTX 2080 chip you could end up with more than 400 EUs in a discrete Intel Xe card. With 64 Gen 11 EUs offering at least 1 TFLOPS of processing power, if it scaled in a linear fashion, such a discrete GPU could end up with between 6 and 7 TFLOPs. That would put it around RTX 2070 levels of performance.

As Intel said itself, however, that prototype wasn’t the basis for a future product itself, more a proof of concept for fine grain power management and low-power performance. But power management is going to be key to creating an efficient, high-performance, and large-scale discrete GPU product, which still makes this research fascinating.

My poor excuse for maths notwithstanding we are at least starting to get a clearer picture of what Raja Koduri is building towards for his grand 2020 Intel Xe opus. And it’s actually kind of exciting to be able to say with increasing confidence that Intel is going to release a full discrete graphics card, for gamers, next year.

What a world…