The Intel Xe GPU represents the company’s first foray into discrete graphics since the ill-fated Larrabee project many years ago. But forget that, let’s not dwell on the past, this is a bright new future for Intel graphics cards and we’ve now seen the first Xe DG1 graphics card in the flesh. It’s potentially a bright new future for PC gamers too as it means we’re going to have a genuine third player in the GPU market and that can only be a good thing.

Intel’s Xe GPU was effectively announced last August via a teaser tweet promising to “set our graphics free” with a discrete graphics card in 2020. But it wasn’t until December 2018, at the company’s Architecture Day, that we found out the actual name Intel had chosen for its new GPU venture: Xe.

It didn’t really reveal a whole lot about the underlying graphics tech at the event, besides the fact that it’s pronounced X-E, and not Zee as I can’t help but do. That’s the problem with having both Xe and Xeon in your product portfolio and not following the same phonetics…

But that doesn’t mean we don’t know a fair bit about the new entry into the PC graphics card market. Well, we at least have a few scurrilous rumours, some genuine leaks, some information direct from the big ‘zilla itself, and a few educated guesstimates to whet our appetites for what this year has to offer.

Yes, Intel is going to release a discrete graphics card for the gaming market this year, and it looks like the early engineering samples are already on their way out to developers too. The first Intel Xe card’s even appeared in an online graphics benchmarking database to prove it can actually power up. For those of us who waited through the Larrabee debacle it’s rather exciting to be able to say that.

Vital Stats

Intel Xe release date

We’re thinking that. between a tweet from Raja Koduri and some ‘insider sources.’ we’re looking at a summer 2020 release for the new discrete Intel Xe graphics cards.

Intel Xe specs

Intel has announced three distinct microarchitectures for the Xe family of GPUs. There will be Xe-LP, Xe-HP, and Xe-HPC covering Intel graphics silicon from integrated and entry-level chips, all the way up to exascale high-performance computing. It looks likely that the initial Xe GPUs will largely be based on the building blocks put in place by the Ice Lake Gen11 graphics architecture. And we’re expecting a 96 EU Xe-LP DG1 chip first, with 128 EU, 256 EU, and 512 EU Xe-HP parts for the enthusiast and datacentre markets to follow.

Intel Xe performance

Not a lot is known about the potential performance of the new Intel graphics cards, but Gen11 GPUs with just 64 EUs are capable of decent 720p gaming. So with many times that figure, the Intel Xe could be genuinely competitive cards. If they clock high enough…

Intel Xe price

Spanning entry-level, mid-range, and high-end, you’re looking at a spread of graphics card pricing potentially from $200 all the way up to $1,000… depending on actually how powerful that 512 EU card turns out to be.

What is the Intel Xe release date?

Our best guess for the Intel Xe release date is June 2020. That’s based on a couple of things with Raja Koduri, the popular public face of Intel’s renewed push into the discrete graphics card market, tweeting out a picture that’s widely believed to be some sort of launch window tease, as well as another source suggesting a mid-2020 release.

Intel CEO, Bob Swan, has announced the first discrete Xe card, DG1, has completed its power-on testing, and early dev kits have been listed on the EEC database. So things are definitely moving, and hopefully that also means they’re on track.

Raja Koduri was once AMD’s GPU engineering maestro, before switching allegiance around the end of 2017. After taking a leave of absence after the launch of Vega, he announced his decision to leave AMD “to pursue my passion beyond hardware,” a pursuit which took him straight into the arms of Intel and its hardware…

Now he heads up the company’s Core and Visual Computing group, and is driving forward the cause of discrete GPUs. The pun is fully intended, with Raja recently tweeting out a picture of his Tesla with a new license plate reading “THINKXE”.

@IntelGraphics pic.twitter.com/T2symDHxJ7

— Raja Koduri (@Rajaontheedge) October 4, 2019

The little nugget which has caused people to cite this as a release date teaser is the expiry date reads June 2020. It could very well be a coincidence, and Koduri could just be showing off both his green credentials and love of a customised license plate, or it could be a little nod to the future of Intel graphics.

Then a subsequent DigiTimes report cited “industry sources” which claimed a mid-2020 Intel Xe release date, and which seems to tally quite nicely with the Raja tease too.

Fresh wafer pic.twitter.com/M2zaaYLSDD

— Raja Koduri (@Rajaontheedge) February 6, 2020

In case you were wondering just how shiny these discrete GPUs will be, Raja Koduri has you covered. Here’s him posing with what looks like an Intel Xe GPU wafer.

We also know Intel plans to ramp up into the data centre market with Xe in 2021 following the launch of its Ponte Vecchio GPU within the US Department of Energy’s Aurora supercomputer.

What are the Intel Xe specs?

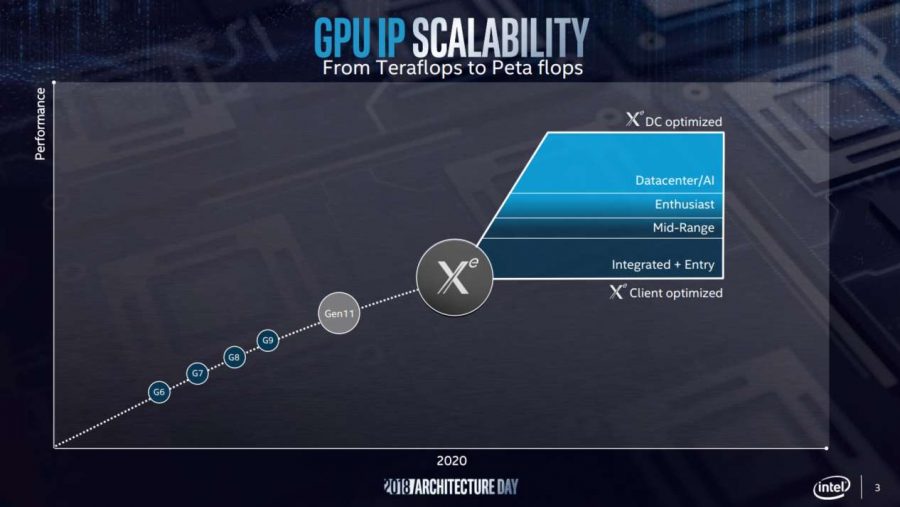

Intel has announced that there are going to be three distinct GPU microarchitectures for Xe, covering the full breadth of chips from the lowest of the integrated pool, all the way up to super high-performance supercomputers.

“These three micro architectures,” says the chip maker, “allow Intel to scale the Xe architecture from low-power mobile (Xe-LP) up to exascale HPC applications (Xe-HPC).”

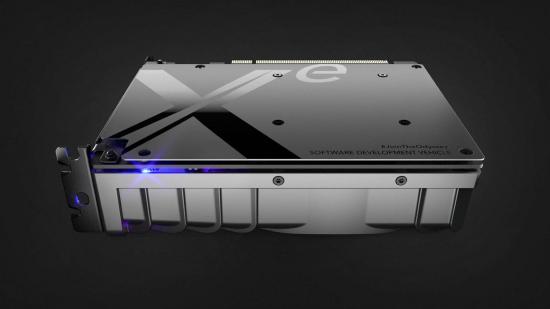

Despite showing off the Software Development Vehicle card at CES 2020, there hasn’t been any further information divulged about specs. But the DG1 is surely the 96 EU part that was recently confirmed in EEC registration documents for new developer kits going out.

Although there has been talk of the Intel Xe GPU being built from the ground up, Intel’s Gregory Bryant has explained that its first discrete cards will be built from the building blocks of its existing graphics IP. And that means we’re expecting the Xe tech to essentially be the Gen11 GPU architecture of the new Ice Lake processors writ large.

Though at DevCon recently there has been more talk about the new GPU architectures, with mention of a variable vector width for the Xe design. That sounds a little like the way AMD’s new RDNA architecture has been designed to offer both high throughput as well as a huge amount of parallelism.

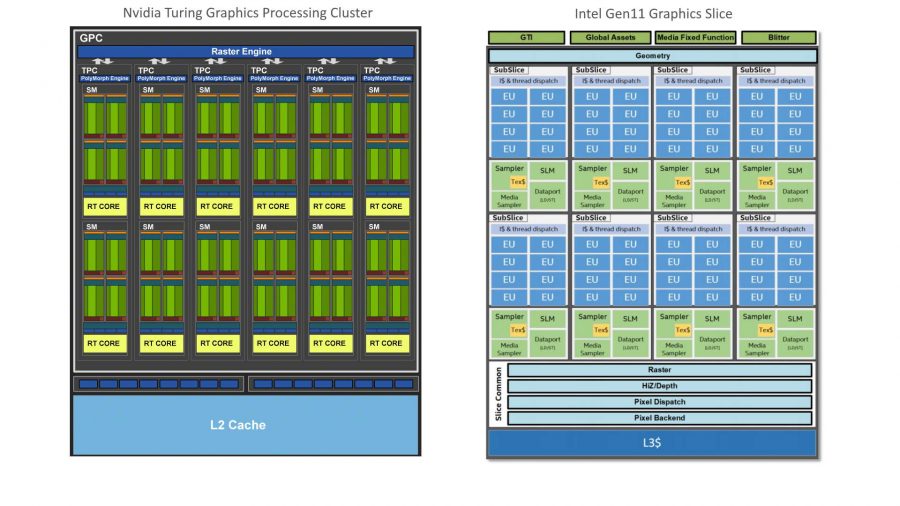

The way the current Intel graphics silicon is designed means it’s comprised of number of execution units (EUs) arrayed in groups of eight with some shared resources. That’s called a subslice, and there are eight of these subslices grouped together to form a full slice, which shares L3 cache and the raster backend. This 8×8 makeup is what gives Ice Lake’s Gen11 GPU its full 64 execution unit count.

If you look at the design of a slice, on the surface at least, the block diagrams make it look a lot like a graphics processing cluster (GPC) from one of Nvidia’s recent GPUs. For subslice read SM, and for EU read CUDA core. Each of Intel’s execution units contains a pair of arithmetic logic units (ALU), which support both floating point and integer operations, while Nvidia CUDA cores contain one fixed FP and one INT unit. In that sense Intel’s low level GPU design is closer to AMD’s Graphics Core Next, or Navi RDNA execution units.

They are different beasts – and comparing Intel execution unit count to CUDA cores, or RDNA cores, is a bit of an apples vs. oranges vs. pomegranate comparison – but you can see how Intel could bring multiple slices together to create larger, more powerful graphics chips with its existing design.

Back in July Intel published a developer version of its graphics drivers which featured a bunch of different codenames. It was quickly taken down, but such is the way of the interwebs, a user on the Anandtech forums posted them all. The key entries from the perspective of Intel Xe are the ones under the DG1 and DG2 headers.

- iDG1LPDEV = “Intel(R) UHD Graphics, Gen12 LP DG1” “gfx-driver-ci-master-2624”

- iDG2HP512 = “Intel(R) UHD Graphics, Gen12 HP DG2” “gfx-driver-ci-master-2624”

- iDG2HP256 = “Intel(R) UHD Graphics, Gen12 HP DG2” “gfx-driver-ci-master-2624”

- iDG2HP128 = “Intel(R) UHD Graphics, Gen12 HP DG2” “gfx-driver-ci-master-2624”

This now seemingly relates to the Xe-LP and Xe-HP GPUs, spanning the integrated and entry level DG1 to the enthusiast and professional-level DG2 cards.

From this it looks like the initial discrete Xe GPU (DG1) will be a low-power version with the same essential makeup as the integrated chip inside the upcoming Tiger Lake processors. That would put it at 96 EUs, as shown in an early CompuBench database listing for the chips.

Intel has promised that its Tiger Lake graphics would double the performance of its current Ice Lake GPUs, and that would indicate an entry-level discrete card with 1080p/60fps gaming performance. Or at least that is what Intel Japan has been saying.

The DG2 listings are more promising, however, though whether this represents a second-gen version of Xe to release later than 2020 we’re not sure. I’m hoping they’re just the cards filling out the rest of the Xe graphics card stack.

The general interpretation of the code is that it’s the high-power (HP) version of Intel’s Gen12 graphics, and the discrete graphics (DG) version. That would mean they reference three different Intel Xe graphics cards. The final number on the end of the codename can be seen as the total EU count of that particular GPU, and that’s based on standard Intel syntax for existing codenames.

That would mean we’ll have a 128 EU low-end card, offering twice the silicon as that on offer in the current top Ice Lake integrated GPU hardware. That would be an Intel Xe GPU with a pair of full graphics slices in its makeup, and then the mid-range 256 EU part would come with four slices to create its GPU.

At the top of the Intel Xe stack would then be a 512 EU chip, sporting eight individual slices of graphics goodness, and that could make it a very powerful GPU indeed. So long as Intel can nail high clock speeds for its graphics silicon…

Therein lies the rub. If Intel’s discrete Xe graphics cards are still running at the same 1.1GHz clock speeds as their integrated CPU siblings then they’re going to struggle to be competitive with the graphics silicon coming out from either AMD or Nvidia’s GPU skunkworks. The hope is that because the Xe GPU is not having to cope with the thermal restrictions of an added bunch of CPU cores sat cheek by jowl with it, it will be able to run quicker than the integrated chips.

Now, one of the problems with Intel’s 10nm process, and part of the reason why we still haven’t seen any desktop 10nm CPUs, is that they struggle to hit the sort of frequencies that Intel’s 14nm processors can. GPUs don’t necessarily have to clock as high as a 5GHz CPU, for example, so it wouldn’t be beyond the realms of possibility for Xe chips to knock around the 1.7GHz or 1.8GHz marks, or potentially even 2GHz.

In terms of the cache on offer each Ice Lake slice has 3MB of L3 cache and 512KB of shared local memory. Spread that out to a potential eight-slice GPU and you’re looking at 24MB L3 and 4MB of shared. We don’t yet know what video memory solution Intel is likely to use for its discrete cards, but Koduri has said in a redacted interview that it wouldn’t necessarily be limited to GDDR6, but that Intel could also match its new GPUs with the more expensive high bandwidth memory (HBM).

And we all know how well that went down with your Vega designs, eh Raja?

But Intel issued some clarification on the now removed interview suggesting that Koduri had been referencing a potential high-end datacentre part for a potential HBM design. That makes more sense and means we’re probably going to see the standard GDDR6 setup for both the entry and enthusiast class Xe graphics cards.

For Intel’s part it has said that its 7nm generation will kick off with a Xe datacentre GPGPU built using the Foveros stacking technique. That’s likely where Raja’s HBM dreams have gone. The company has since announced that this GPU will be called Ponte Vecchio – after that super old bridge in Italy.

Ponte Vecchio is just one “microarchitecture” within the Intel Xe architecture umbrella. Other uses for Xe include gaming, mobile, and workstation cards – all utilising different loadouts of the same underlying tech.

“We are not disclosing today all the details on the construction of Ponte Vecchio,” Ari Rauch, VP of architecture, graphics, and software, says during a pre-briefing call on Intel Xe. “But you can assume that this device takes advantage of all the latest and greatest technology from Intel: 2D/3D memory technology all in place. I cannot confirm, but there’s a lot of technology packed into this amazing device”

How the individual slices will be connected is still something that’s up for debate. There is the possibility that Intel might take the chiplet approach and create each slice as an individual piece of silicon. This would reduce the complexity, and therefore cost, while also potentially increasing yields… something the 10nm tech has struggled with in the past.

But connected the slices together, whether in chiplet or monolithic form, is going to be vital in the final picture of overall gaming performance.

How will Intel Xe GPUs perform?

The current 64 EU Ice Lake GPUs are capable of some decent levels of 720p gaming performance. They can even deliver a modicum of 1080p fun, albeit at rather more staccato frame rates. Double, quadruple, or even octuple the hardware in those integrated GPUs and you should be looking at significantly more gaming performance down the line.

We’ve seen the early DG1 prototype running Destiny 2 pretty well, though we don’t know what the resolution or settings were. But we’ve also checked out the 96 EU part running Warframe at 1080p Low and there we’re looking at around the 30fps mark.

Where they eventually line up against the green and red competition will come down to how fast the chips can run, how well Intel can optimise the hardware and the software in terms of scaling up the number of execution units, and how exactly the different slices are connected together on the die.

And also whether the mythical multi-GPU malarkey ends up being more than just an Intel feature that gets shouted about around launch, but is too costly and complicated for any developer to actually implement themselves.

There’s some interesting back-of-a-napkin maths over at TechSpot suggests the Xe 128 card will sit just about the performance of GTX 1650, with the Xe 256 GPU sitting in between the RTX 2060 and 2060 Super. The top-end Xe 512 card in its guesstimate actually sits just above the Nvidia RTX 2080 Ti, which would be quite an achievement. That’s all predicated on the GPU running at 1.7GHz and TechSpot’s TFLOPS calculations actually holding water…

Intel has introduced support for variable rate shading (VRS) into its Gen11 graphics library, meaning that games built with that in mind should perform better with the feature enabled. In the synthetic 3DMark VRS test Intel has promised a 40% uplift in its Ice Lake chips with variable rate shading enabled.

The Intel Xe GPUs will also support Adaptive Sync too, so your FreeSync-supporting monitors ought to also provide smooth gaming when they’re plumbed into a Xe graphics card too.

There was a suggestion recently that an Intel exec. at a Japan event had confirmed that Intel was baking ray tracing support into its Xe GPUs. That’s now been clarified by Intel as an error in translation… but hasn’t actually detailed whether or not it’s true.

Whatever happens with the ray tracing support in its new Xe graphics cards, at least Intel CPUs now have access to ray tracing. You only have to check out World of Tanks now to see ray traced shadows firing out of your CPU. It’s maybe just a shame ray tracing works better on AMD chips…

Intel is also working on Xe’s multi-GPU performance for its first discrete graphics cards too, with the potential for them to work with the integrated GPU silicon of its CPUs to boost gaming performance. So far it’s only evidenced in Linux driver patches, so there’s no guarantee the multi-GPU work will bear fruit on the gaming side as it might be restricted to simpler compute workloads alone.

But the latest development kits have been shipped with the 96 EU DG1 as well as either an unnamed eight-core or six-core CPU. If Intel is looking to encourage devs to code with multi-GPU in mind then maybe it’s shipping out early Tiger Lake CPUs with Gen12 processor graphics inside them to help the whole process…

How much will Intel Xe GPUs cost?

Intel stated at the Architecture Day last December that it was aiming to provide Xe GPUs across a wide spectrum of the graphics industry. That means going right from the CPU integrated chips, through mainstream, enthusiast, and on to datacentre and AI professional cards.

We had thought Intel was going to lead with its discrete GPUs for the gaming market, but the DigiTimes release date report suggests the company is going to be more aggressive than that.

“Instead of purely targeting the gaming market,” the report says, “Intel is set to combine the new GPUs with its CPUs to create a competitive platform in a bid to pursuit business opportunities from datacenter, AI and machine learning applications and such a move is expected to directly affect Nvidia, which has been pushing its AI GPU platform in the datacenter market, the sources noted.”

In an earlier interview Raja was seemingly misquoted as promising the entry-level Xe GPU would cost $200, because not all users would buy a $500 – $600 graphics card. But Intel had to clarify it as a mistranslation, instead saying that was just an example of entry-level discrete GPU pricing.

Until we’re a lot closer to release, and maybe even have an idea of what products Intel is aiming to go up against, we still won’t have a real clue as to how much Intel will charge for its first Xe graphics cards. But there is expected to be some news at CES 2020, and potentially some sort of Architecture Day Redux early in 2020 too.